AWS Solutions Architect Associate Exam Cheatsheets [SAA-C03]

This guide was prepared by me during my studies for the AWS Solutions Architect exam. Below is a comprehensive collection of key topics and a cheat sheet for quick reference.

Analytics

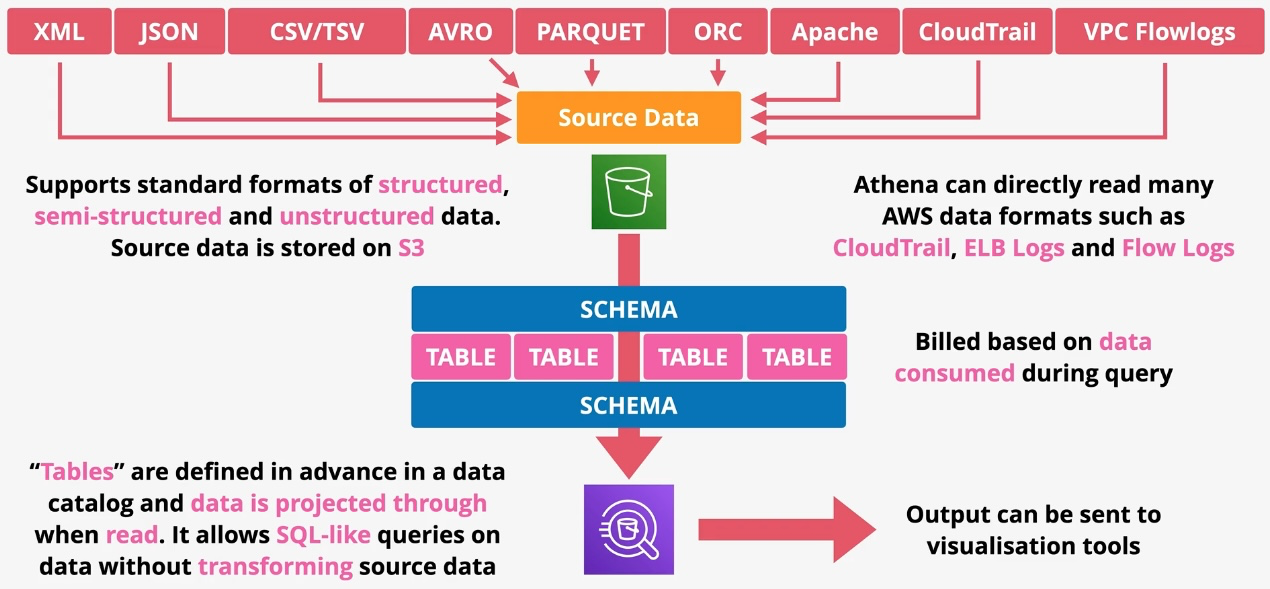

Amazon Athena

Type: Serverless Interactive Query Service Description: Amazon Athena is a serverless query service that allows you to analyze data stored in Amazon S3 using standard SQL. With Athena, there is no need to manage infrastructure or perform complex ETL processes. It is ideal for running ad-hoc queries and gaining insights from large datasets quickly and cost-effectively.

Key Features

- Serverless Architecture:

- No infrastructure to manage; simply define the data and execute SQL queries.

- SQL Query Support:

- Use standard ANSI SQL for querying structured, semi-structured, and unstructured data.

- Supports Various Data Formats:

- Compatible with CSV, JSON, Parquet, ORC, Avro, and other popular data formats.

- Data Partitioning:

- Query specific partitions of data to improve query performance and reduce costs.

- Schema on Read:

- Define schemas when querying the data rather than during data ingestion, making it flexible for analyzing raw data.

- Integration with AWS Glue:

- Uses AWS Glue Data Catalog for metadata management and schema definitions.

- Scalable Query Processing:

- Automatically scales to handle complex and large queries.

- Pay-Per-Query Pricing:

- Billed based on the amount of data scanned by queries, encouraging optimization.

Subtypes and Components

- Query Execution Engine:

- Powered by Presto, an open-source distributed SQL query engine optimized for large-scale data analysis.

- Data Catalog Integration:

- Leverages AWS Glue Data Catalog to store metadata about tables and databases.

- Federated Query Support:

- Query data across various sources, including RDS, Redshift, or on-premises databases, without moving data.

- Partitioning and Compression:

- Supports partitioned and compressed datasets for enhanced performance.

Use Cases

- Ad-Hoc Data Analysis:

- Quickly analyze data in Amazon S3 without complex data pipelines.

- Log and Event Analysis:

- Query server logs, clickstream data, or application logs stored in S3.

- Data Exploration:

- Explore raw or semi-structured data for insights before loading into a data warehouse.

- Business Intelligence (BI):

- Integrate with tools like Amazon QuickSight for interactive reporting and dashboards.

- ETL Replacement:

- Simplify ETL workflows by directly querying data in its native format.

- Machine Learning Data Preparation:

- Prepare datasets for machine learning workflows by filtering and transforming data using SQL. Integration with AWS Services Integrated With:

- Amazon S3: Primary data storage for querying with Athena.

- AWS Glue: Provides the Data Catalog to manage metadata and table definitions.

- Amazon QuickSight: Enables visualization of Athena query results in interactive dashboards.

- Amazon CloudWatch Logs: Analyze logs stored in S3.

- AWS Lake Formation: Simplifies managing and securing data lakes queried by Athena. Not Directly Integrated With:

- RDS or Aurora: While Athena cannot natively query these databases, you can export their data to S3 for analysis.

- DynamoDB: Data must be exported to S3 or queried via federated queries using AWS Glue connectors.

Governance and Security

- Access Control:

- Manages access through IAM policies, ensuring users have the appropriate permissions for querying and managing resources.

- Encryption:

- Supports data encryption at rest (using S3 encryption) and in transit (using SSL/TLS).

- Query results can also be encrypted and stored in S3.

- Auditing and Logging:

- Integrated with AWS CloudTrail for logging query and access events.

- Data Privacy:

- Access control integrated with AWS Lake Formation to enforce granular security policies. Benefits

- No Infrastructure Management:

- Focus on querying data without worrying about provisioning or maintaining servers.

- Cost Efficiency:

- Pay only for the data scanned, and optimize costs by compressing, partitioning, and filtering data.

- Fast Query Execution:

- Presto-powered execution ensures low-latency querying for large datasets.

- Flexibility:

- Analyze various data formats, including structured and semi-structured, without transformation.

- Scalable:

- Automatically scales resources to match query demands, enabling high availability.

AWS Data Exchange

- Type: Data Sharing Service

- Description: Facilitates secure and efficient exchange of third-party data sets, enabling customers to subscribe to and use data products in the cloud.

- Use Cases:

- Accessing financial market data

- Integrating healthcare datasets

- Utilizing demographic and consumer data for analytics Additional Features:

- Subscriptions: Automated updates to subscribed datasets.

- Data API Access: Seamlessly retrieve data directly into analytics tools. Governance and Security:

- Control data access with IAM roles.

- Ensure compliance by subscribing only to verified providers. Examples:

- Leverage third-party weather data for logistics optimization.

AWS Data Pipeline

- Type: Data Workflow Orchestration

- Description: Automates the movement and transformation of data between different AWS services and on-premises data sources at specified intervals.

- Use Cases:

- Periodic ETL processes

- Data replication across services

- Data processing workflows

Amazon EMR (Elastic MapReduce)

- Type: Managed Big Data Framework

- Description: Provides a managed Hadoop framework to process vast amounts of data across resizable clusters of Amazon EC2 instances.

- Subtypes:

- Hadoop: Batch processing and distributed storage

- Spark: Real-time data analytics and machine learning

- Presto: Interactive SQL queries on large datasets

- Hive: Data warehousing and SQL-like querying

- HBase: NoSQL database for big data applications

- Use Cases:

- Large-scale data processing

- Log analysis

- Machine learning workloads Additional Features:

- Auto Scaling Clusters: Dynamically add or remove nodes based on workload.

- Custom AMIs: Use pre-configured images for specific analytics needs. Governance and Security:

- Encrypt data in transit with SSL and at rest using S3 encryption options.

- Monitor job execution via CloudWatch logs. Examples:

- Process petabytes of clickstream data for behavioral analysis.

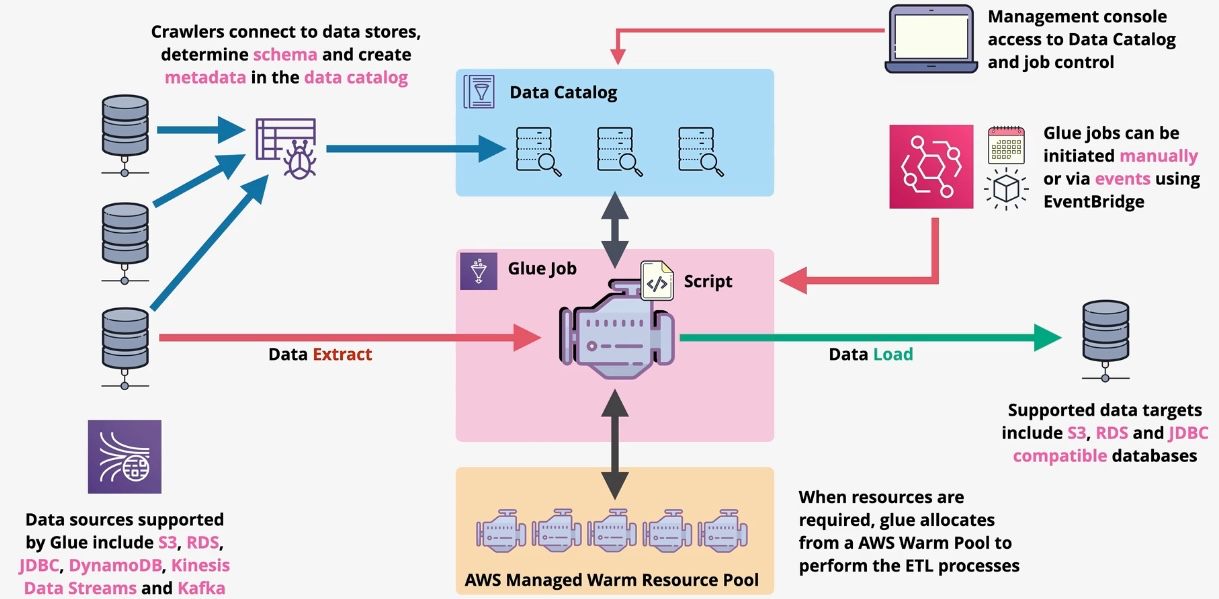

AWS Glue

Type: Serverless ETL (Extract, Transform, Load) Service Description: AWS Glue is a fully managed ETL service that simplifies the process of extracting, transforming, and loading data for analytics. It automatically discovers and catalogs data, generates ETL code, and provides tools to clean and transform datasets for use in data lakes, warehouses, and machine learning workflows.

Key Features

- Serverless Architecture:

- No infrastructure management; AWS Glue scales automatically to meet workload demands.

- Data Catalog:

- Centralized metadata repository to store table definitions, schemas, and job runtime metrics.

- Compatible with AWS Lake Formation for enhanced governance and security.

- Built-In ETL Engine:

- Generates Python or Scala ETL scripts, which can be customized using an integrated development environment (IDE).

- Schema Discovery:

- Automatically detects and catalogs schema and format of data in various sources (e.g., S3, RDS, DynamoDB).

- Developer-Friendly:

- Supports PySpark for building ETL workflows and integrates with Jupyter Notebooks for interactive development.

- Job Scheduling:

- Allows scheduling and orchestrating ETL workflows, with dependency tracking and retries for failed jobs.

- Streaming ETL:

- Supports real-time data transformation for streaming data sources like Amazon Kinesis and Kafka.

- Integration with AWS Ecosystem:

- Works seamlessly with services like Amazon S3, Redshift, Athena, and more for end-to-end data processing.

- Data Preparation:

- AWS Glue DataBrew provides a visual interface for preparing and transforming data without writing code.

- Data Quality and Lineage:

- Track and audit the lineage of data transformations for compliance and debugging.

Subtypes and Components

- AWS Glue Data Catalog:

- Central repository for metadata that integrates with Amazon Athena, Redshift, and Lake Formation.

- ETL Jobs:

- Fully managed Spark-based jobs for transforming and moving data between sources and destinations.

- Glue Crawlers:

- Automatically crawl data sources to detect schema and store metadata in the Glue Data Catalog.

- AWS Glue DataBrew:

- Visual data preparation tool for cleaning and enriching data without code.

- AWS Glue Studio:

- Low-code interface to visually design and manage ETL workflows.

- Triggers and Workflows:

- Orchestrate complex ETL pipelines with event-based triggers and multi-step workflows.

- Glue Connectors:

- Pre-built connectors to integrate with on-premises and cloud-based data sources, including SaaS applications.

Use Cases

- Data Lakes:

- Create and manage data lakes by ingesting, cataloging, and transforming raw data for analysis.

- Data Warehousing:

- Load transformed data into Amazon Redshift for BI and reporting use cases.

- Real-Time Analytics:

- Stream and transform data from sources like Kinesis or Kafka for immediate insights.

- Machine Learning Preparation:

- Prepare and clean datasets for machine learning models in Amazon SageMaker or other platforms.

- Data Discovery:

- Automatically identify and catalog data from diverse sources for easy querying and exploration.

- Regulatory Compliance:

- Ensure data quality, lineage, and transformations for regulatory reporting and audits.

- Application Data Integration:

- Transform and integrate application data from databases, APIs, and other sources into a unified format.

Integration with AWS Services Integrated With:

- Amazon S3: Primary storage for raw and transformed data.

- Amazon Redshift: Load data into Redshift for data warehousing and analytics.

- Amazon Athena: Query cataloged data directly from the Glue Data Catalog.

- AWS Lake Formation: Enhanced security and governance for data lakes.

- Amazon DynamoDB: Transform and process DynamoDB tables.

- Amazon RDS and Aurora: Extract and transform data from relational databases.

- Amazon SageMaker: Prepare data for machine learning workflows.

- Amazon Kinesis and Kafka: Stream real-time data for transformation and storage. Not Integrated With:

- Standalone EC2 instances unless used with custom configurations to pull data into Glue-supported formats.

- On-premises systems without using Glue connectors or custom scripts.

Governance and Security

- IAM Policies:

- Use IAM roles to manage access to Glue resources and associated services like S3, DynamoDB, and Redshift.

- Encryption:

- Data can be encrypted at rest using AWS KMS and in transit using SSL/TLS.

- Auditing and Logging:

- Monitor Glue activities through AWS CloudTrail and detailed logs in CloudWatch.

- Data Governance:

- Integrated with AWS Lake Formation for managing fine-grained access control.

- Data Lineage:

- Trace the flow of data and transformations for compliance and debugging.

Benefits

- Ease of Use:

- Automates the tedious aspects of data preparation, freeing up developer time.

- Scalable:

- Handles large datasets with automatic scaling of resources.

- Cost-Effective:

- Pay-as-you-go pricing ensures costs scale with usage, making it affordable for varying workloads.

- Flexibility:

- Supports diverse data formats and integrates with a wide range of AWS services.

- Developer Productivity:

- Provides tools like Glue Studio and DataBrew to simplify ETL and data preparation.

- Real-Time Processing:

- Supports streaming data transformations for immediate use cases.

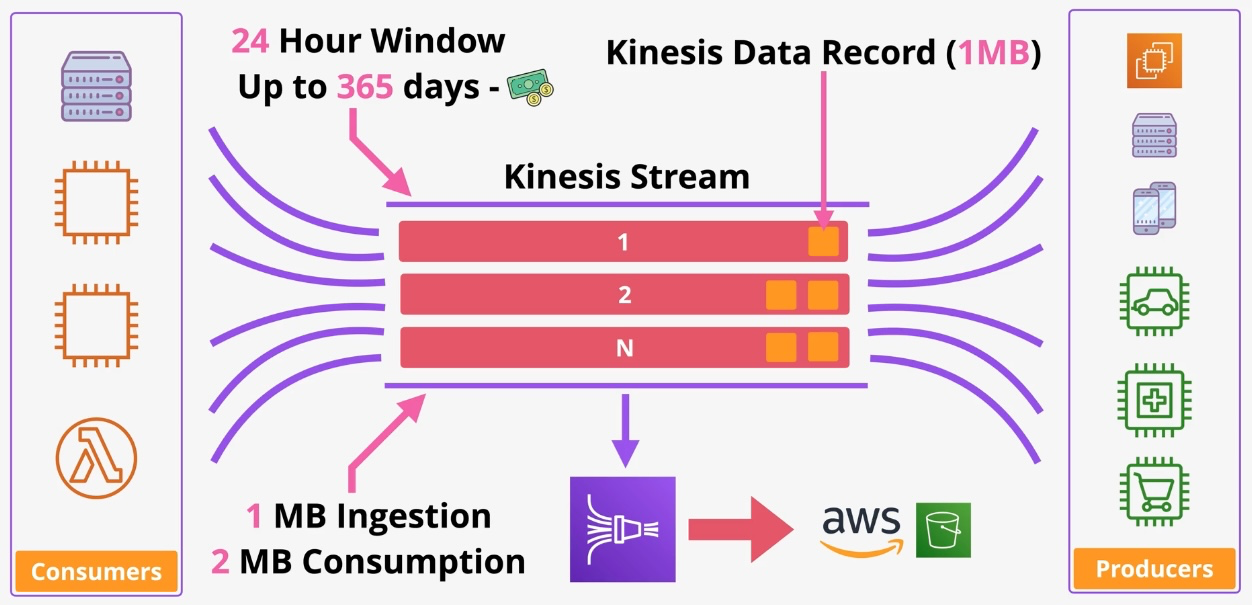

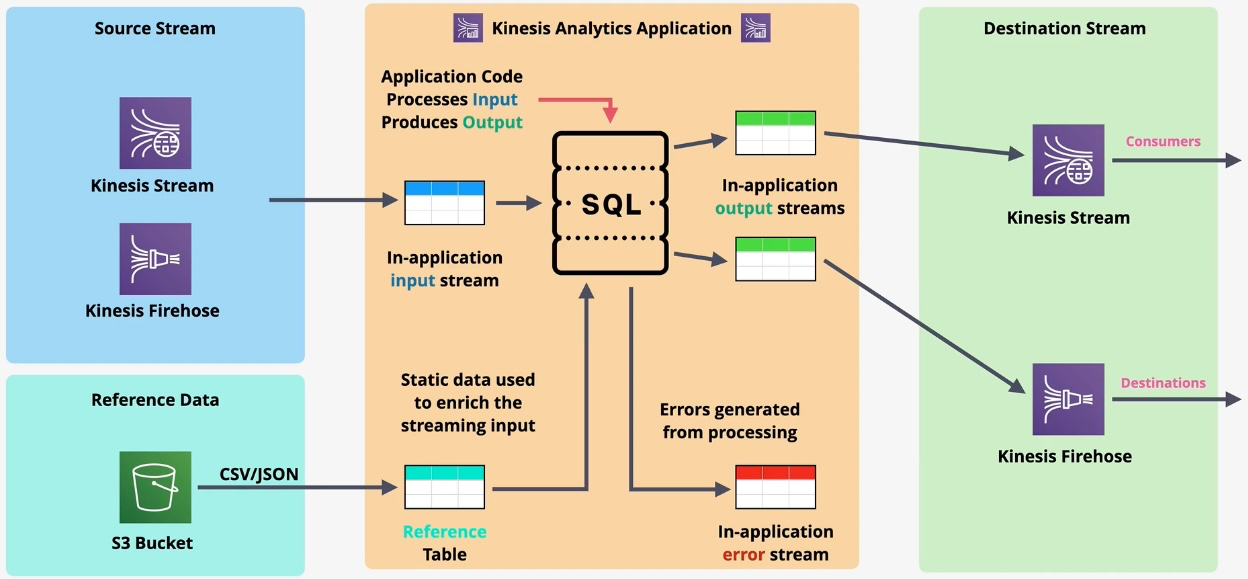

Amazon Kinesis

- Type: Real-Time Data Streaming

- Description: Enables real-time processing of streaming data at scale.

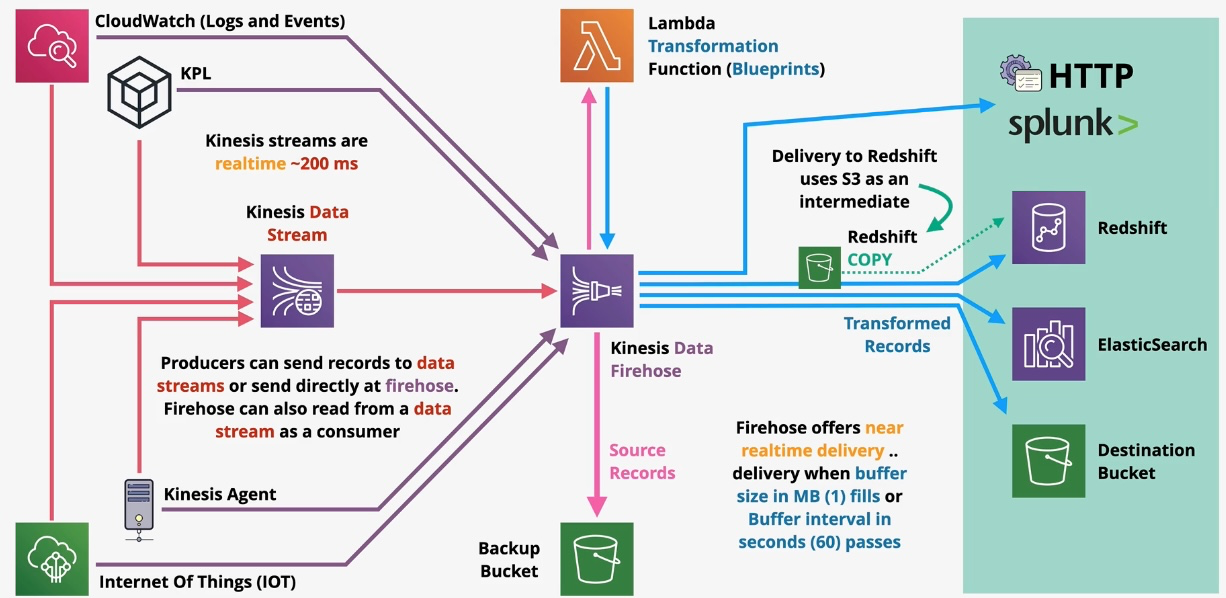

- Subtypes:

- Kinesis Data Streams: Real-time data ingestion

- Kinesis Data Firehose: Data delivery to destinations like S3, Redshift, and Elasticsearch

- Kinesis Data Analytics: Real-time data processing using SQL

- Use Cases:

- Real-time analytics

- Log and event data collection

- Streaming data pipelines

Additional Features:

- Data Retention: Keep data for up to 7 days for replay.

- Integration: Works seamlessly with Lambda, S3, and Redshift. Governance and Security:

- Control access to streams using IAM roles and resource policies.

- Encrypt data streams with KMS-managed keys. Examples:

- Real-time monitoring of social media trends.

AWS Lake Formation

- Type: Data Lake Management

- Description: Simplifies the process of building, securing, and managing data lakes, allowing for centralized storage and analysis of diverse data.

- Use Cases:

- Centralized data repository

- Data governance and security

- Simplified data ingestion and cataloging

Amazon Managed Streaming for Apache Kafka (Amazon MSK)

- Type: Managed Kafka Service

- Description: Fully managed service that makes it easy to build and run applications that use Apache Kafka for streaming data.

- Use Cases:

- Real-time data streaming

- Event sourcing

- Log aggregation

Amazon OpenSearch Service (formerly Elasticsearch Service)

- Type: Managed Search and Analytics

- Description: Provides a managed service for deploying, operating, and scaling OpenSearch (and legacy Elasticsearch) clusters in the AWS Cloud.

- Use Cases:

- Log and event data analysis

- Full-text search

- Real-time application monitoring

Amazon QuickSight

- Type: Business Intelligence Tool

- Description: Cloud-powered business analytics service that makes it easy to deliver insights to everyone in your organization.

- Use Cases:

- Interactive dashboards

- Ad-hoc analysis

- Embedded analytics

Amazon Redshift

- Type: Data Warehousing

- Description: Fully managed, petabyte-scale data warehouse service in the cloud.

- Use Cases:

- Complex query processing

- Business intelligence

- Data warehousing Additional Features:

- Materialized Views: Speed up queries by precomputing results.

- Spectrum: Query S3 data without loading it into Redshift. Governance and Security:

- Use role-based access controls (RBAC) for granular permissions.

- Audit access with CloudTrail integration. Examples:

- Perform complex OLAP queries for e-commerce reporting.

Application Integration

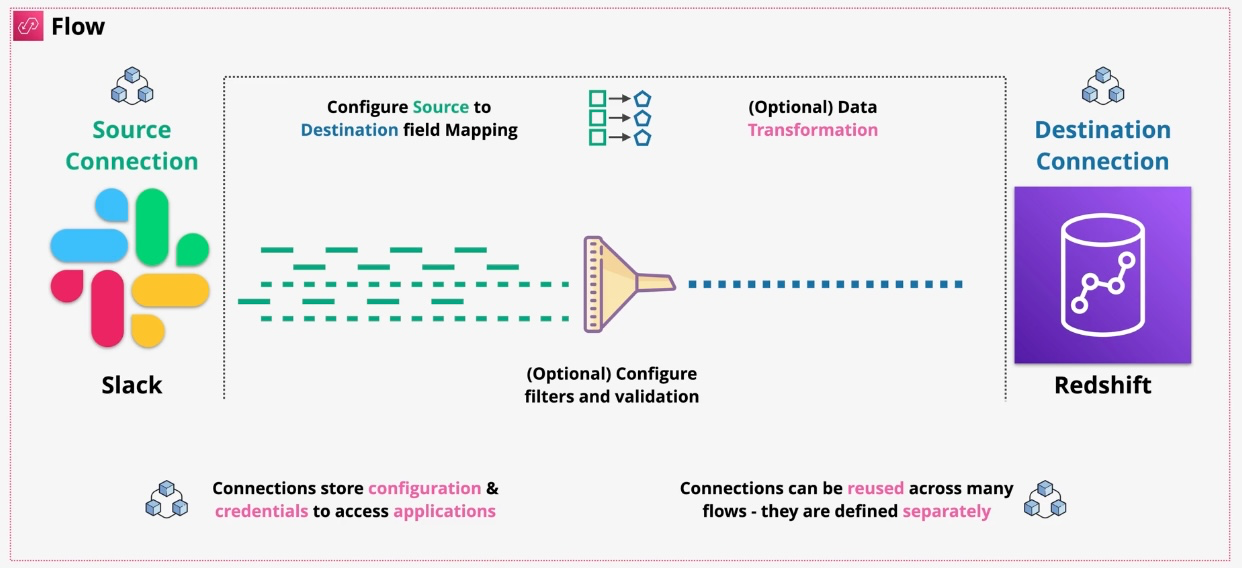

Amazon AppFlow

- Type: SaaS Integration

- Description: Enables secure data transfer between AWS services and SaaS applications like Salesforce, ServiceNow, and others without writing custom code.

- Use Cases:

- Data synchronization between SaaS and AWS

- Automated data workflows

- Integrating third-party applications with AWS services

Additional Features:

- Data Filtering: Transfer only necessary records using filters.

- Custom Mappings: Map source fields to destination fields. Governance and Security:

- Encrypt data in transit and at rest.

- Use private links to transfer data securely without internet exposure. Examples:

- Sync Salesforce contacts to S3 for advanced analytics.

AWS AppSync

- Type: Managed GraphQL Service

- Description: Simplifies application development by providing a flexible API to securely access, manipulate, and combine data from multiple sources.

- Use Cases:

- Real-time applications

- Offline data access

- Mobile and web application backends Additional Features:

- Express Workflows: Ideal for high-frequency, short-duration processes.

- Visual Workflow Designer: Drag-and-drop UI to simplify workflow creation. Governance and Security:

- Secure workflows with IAM role-based access.

- Monitor executions with CloudWatch Logs. Examples:

- Automate multi-step approval processes.

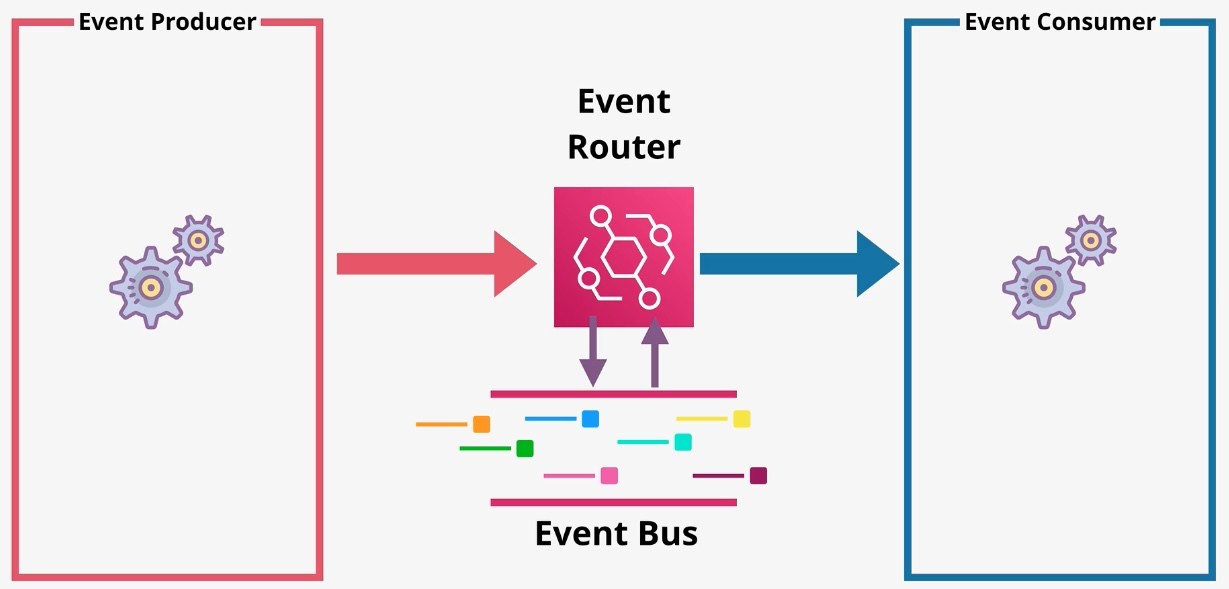

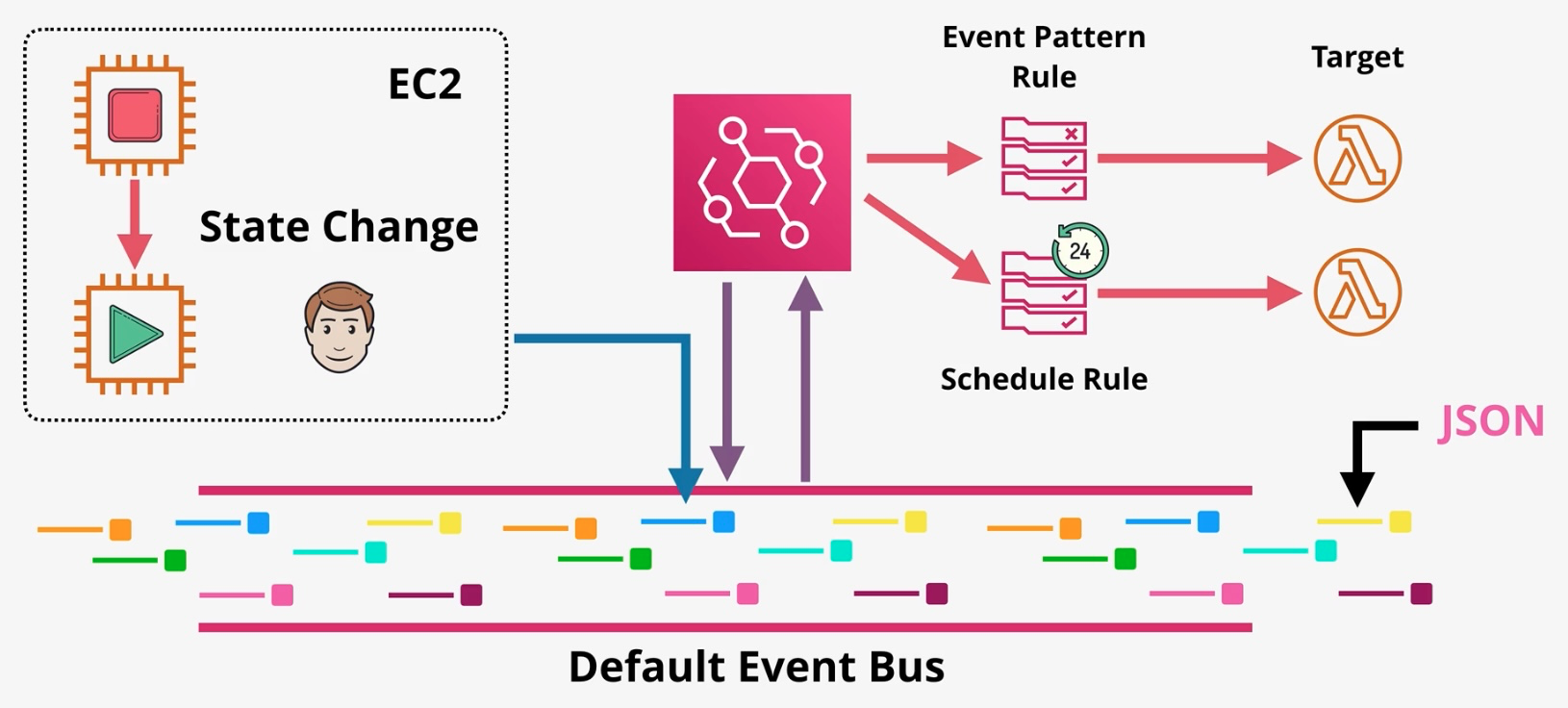

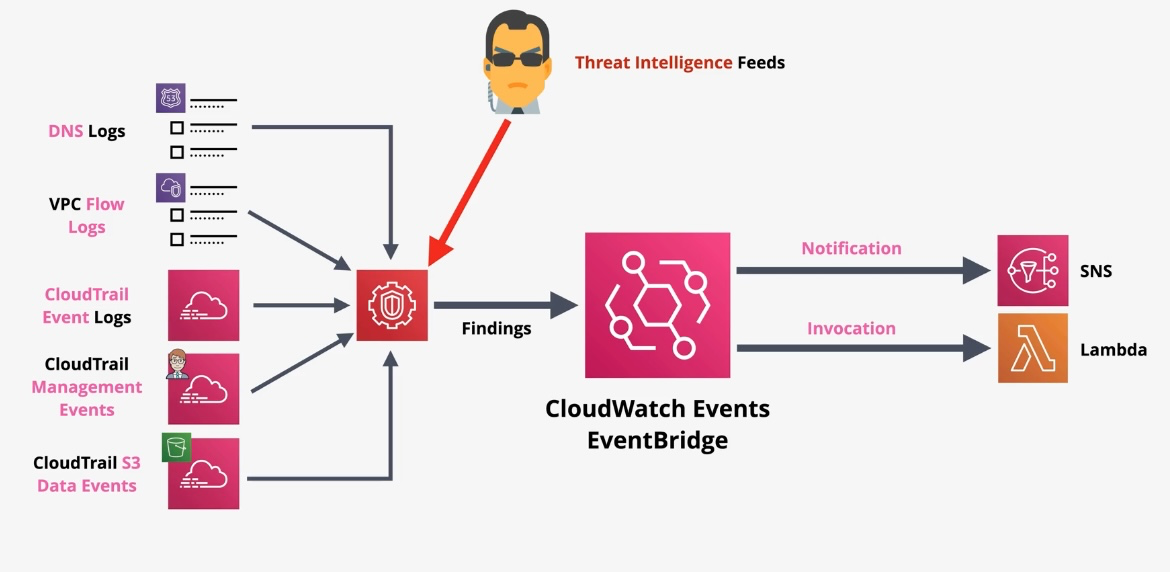

Amazon EventBridge

- Type: Serverless Event Bus

- Description: Amazon EventBridge is a serverless event bus service that allows you to connect your applications with event data from various AWS services, third-party SaaS applications, or custom sources. EventBridge enables event-driven architectures by facilitating real-time data transfer and triggering actions across systems without the need for manual integration or polling mechanisms. Key Features

- Event Bus:

- Centralized event routing system for handling events from AWS services, third-party SaaS applications, and custom event sources.

- Event Filtering:

- Apply rules to filter and process events based on defined patterns, enabling targeted actions.

- Event Archiving and Replay:

- Automatically archives events for later reprocessing or troubleshooting.

- Schema Registry:

- Central repository for managing and discovering event schemas, supporting schema versioning and validation.

- Cross-Account Event Sharing:

- Share events across multiple AWS accounts securely using resource policies.

- Third-Party Integration:

- Native integration with SaaS applications like Zendesk, Shopify, and Datadog.

- Custom Event Sources:

- Publish custom application events directly to EventBridge for further processing.

- Scalability:

- Automatically scales to handle millions of events per second.

- Low Latency:

- Processes and delivers events in near real-time, ensuring timely execution of workflows.

Components

-

Event Bus:

-

Default Bus: Captures events from AWS services by default.

-

Custom Bus: Allows for segregating event sources for different applications or services.

-

Partner Bus: Integrates with supported SaaS applications.

-

Rules:

-

Define how events are processed and routed to one or more targets.

-

Targets:

-

Services or resources that receive the events, such as Lambda, SQS, SNS, Step Functions, or Kinesis.

-

Schema Registry:

-

Stores event schemas and enables event schema discovery for producers and consumers.

-

Event Archive:

-

Retains events for replay or debugging with configurable retention policies.

-

Use Cases:

-

Application integration

-

Real-time data processing

-

Event-driven workflows Integration with AWS Services Integrated With:

-

AWS Lambda: Trigger serverless functions for event-driven processing.

-

Amazon SQS: Queue events for asynchronous processing.

-

Amazon SNS: Distribute notifications or trigger downstream actions.

-

AWS Step Functions: Orchestrate workflows triggered by events.

-

Amazon Kinesis: Process real-time data streams for analytics.

-

Amazon ECS/EKS: Launch tasks or pods in response to events.

-

AWS CloudWatch Logs: Generate events based on log patterns for monitoring and automation.

-

Amazon API Gateway: Route external events to EventBridge for processing.

-

AWS Glue: Trigger ETL workflows upon data updates. Not Directly Integrated With:

-

Standalone databases like RDS or DynamoDB unless events are routed through another trigger like Lambda or DynamoDB Streams.

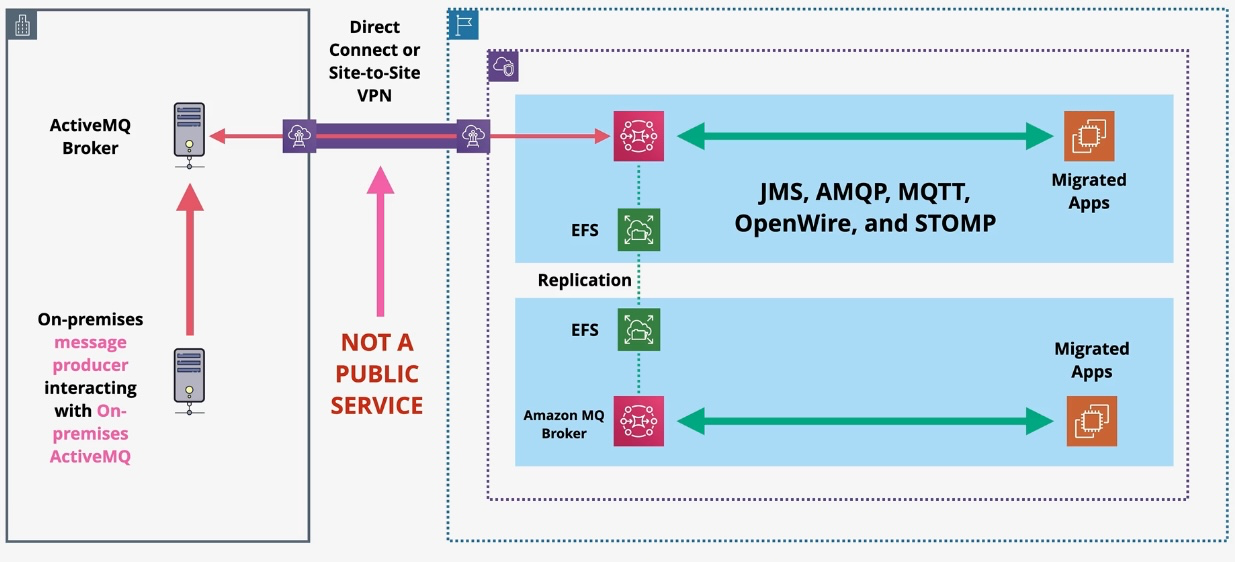

Amazon MQ

- Type: Managed Message Broker Service

- Description: Provides a managed message broker service for Apache ActiveMQ and RabbitMQ, facilitating the migration of messaging workloads to AWS.

- Use Cases:

- Messaging between distributed systems

- Application decoupling

- Legacy application integration

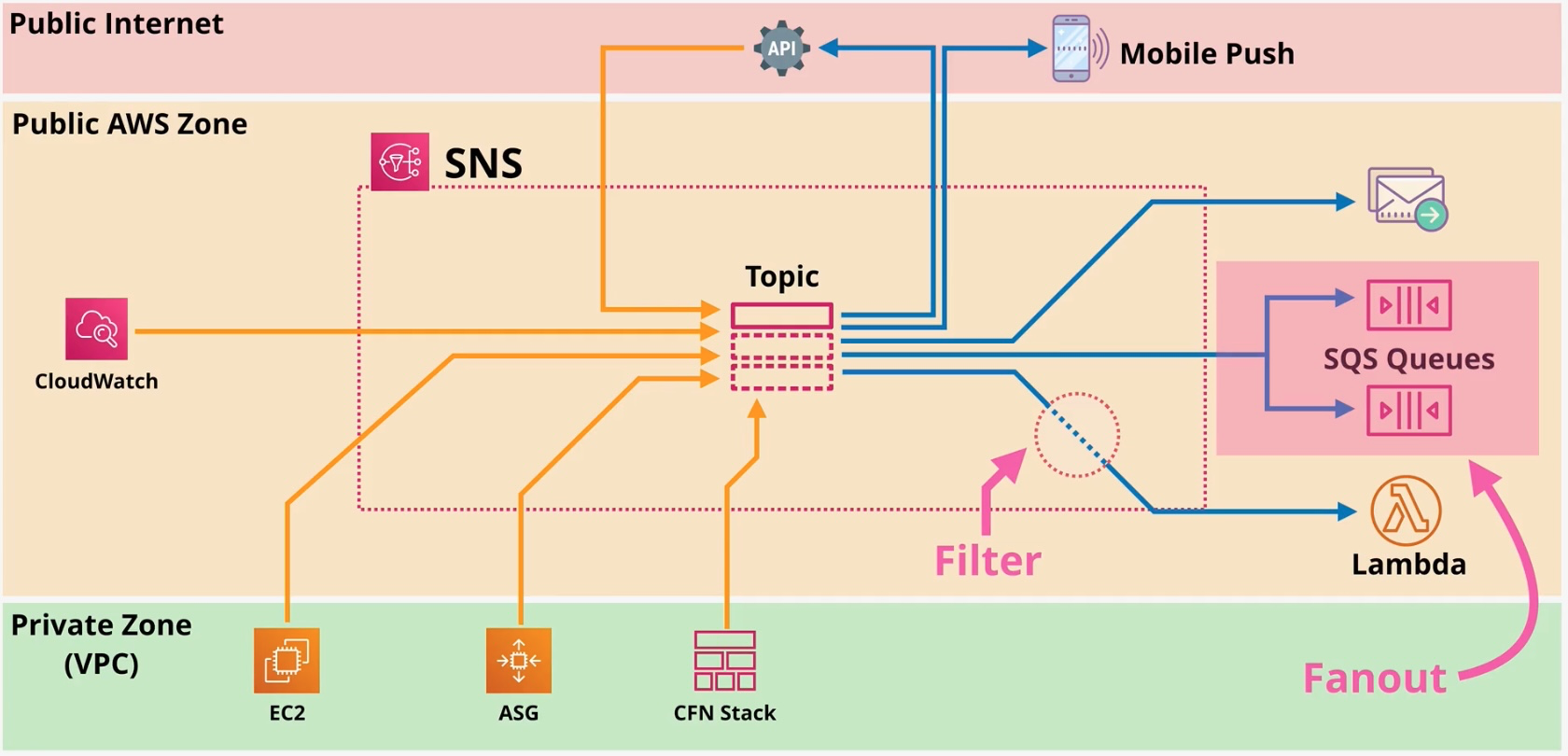

Amazon Simple Notification Service (SNS)

- Type: Pub/Sub Messaging Service

- Description: Fully managed messaging service for both application-to-application (A2A) and application-to-person (A2P) communication.

- Use Cases:

- Sending notifications

- Fan-out message delivery

- Event-driven computing

Amazon Simple Queue Service (SQS)

Type: Managed Message Queue Service Description: Amazon SQS is a fully managed message queuing service that enables decoupling and scaling of distributed systems, microservices, and serverless applications. It allows asynchronous communication between application components by securely transmitting messages via queues, ensuring reliable and scalable operations.

Key Features

- Message Queues:

- Store messages in a queue for processing by consumers, enabling asynchronous communication.

- Two Queue Types:

- Standard Queue: Provides at-least-once message delivery and best-effort ordering.

- FIFO Queue (First-In-First-Out): Ensures exactly-once processing and message order consistency.

- Scalability:

- Scales automatically to handle high-throughput workloads.

- Serverless Architecture:

- Fully managed with no infrastructure to provision or manage.

- Message Retention:

- Messages can be retained for up to 14 days.

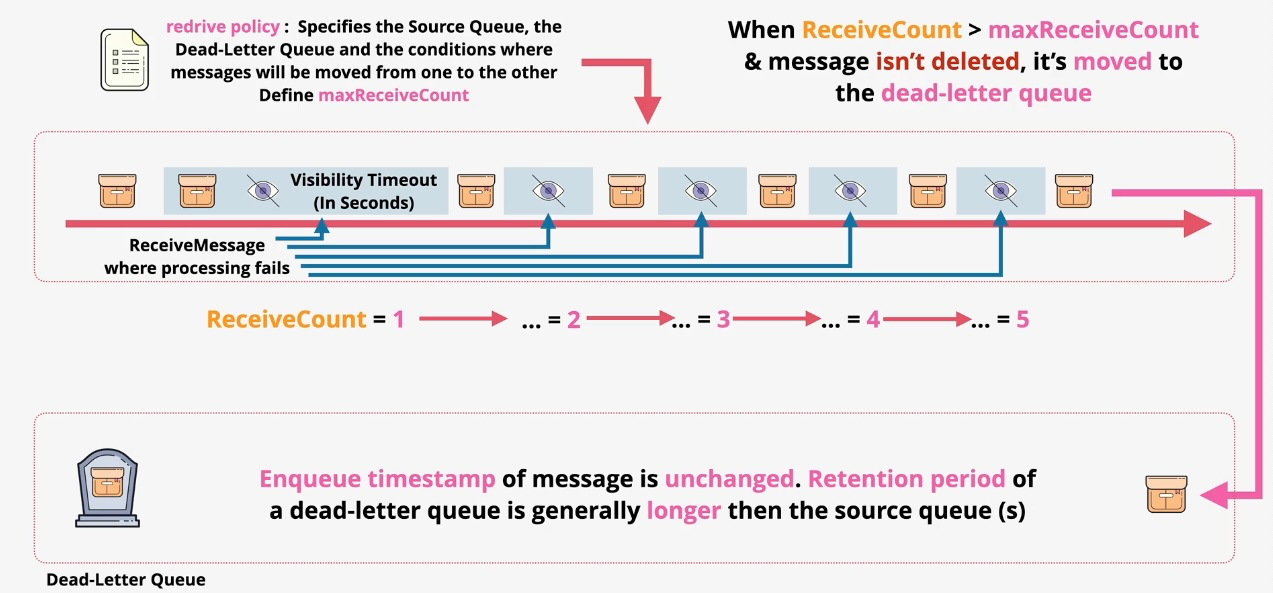

- Dead-Letter Queues (DLQs):

- Store undeliverable messages for troubleshooting.

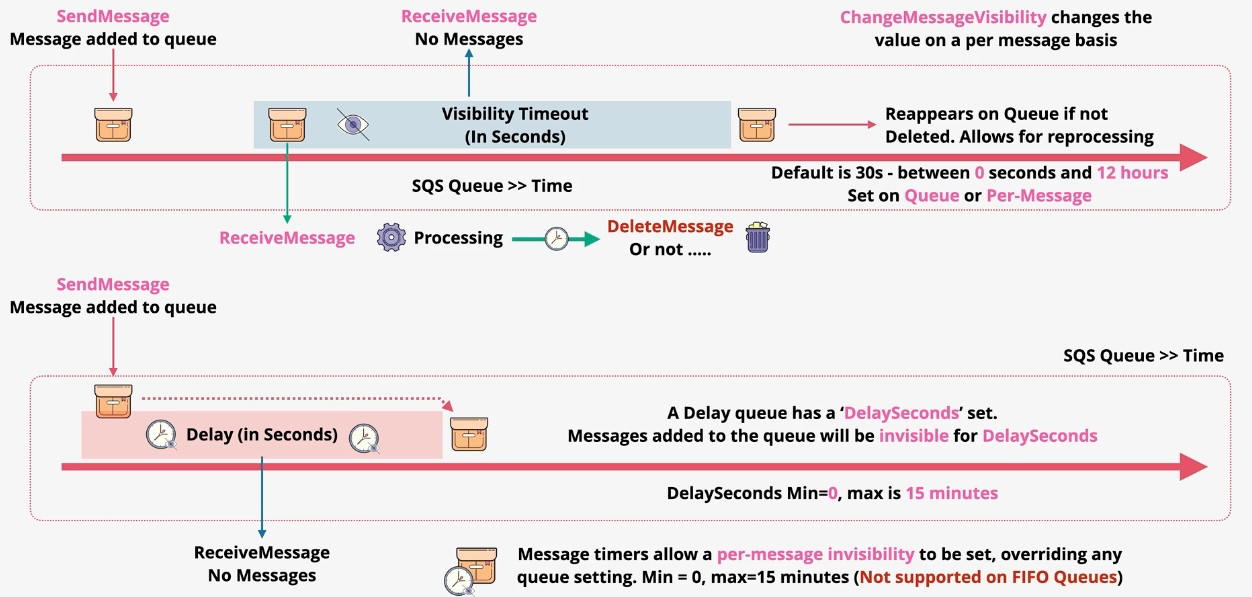

- Message Visibility Timeout:

- Prevents messages from being processed multiple times by hiding them from other consumers during processing.

- Delay Queues:

- Delay delivery of messages by a specified duration.

- Long Polling:

- Reduce empty responses by waiting for messages to be available in the queue.

- Encryption:

- Supports encryption at rest using AWS Key Management Service (AWS KMS).

Components

- Producers:

- Applications or services that send messages to SQS queues.

- Queue:

- Stores messages temporarily until consumed.

- Consumers:

- Applications or services that retrieve and process messages from the queue.

- Dead-Letter Queue (DLQ):

- Configurable queue for storing failed messages.

- Attributes:

- Metadata associated with each queue or message, such as retention period, visibility timeout, or delay duration.

Queue Types

- Standard Queue:

- High throughput and low latency.

- At-least-once delivery with potential duplication of messages.

- Best-effort ordering (not guaranteed).

- FIFO Queue:

- Exactly-once delivery.

- Preserves the order of message processing.

- Limited throughput compared to standard queues (300 transactions per second with batching, or 3000 with high-throughput mode).

Use Cases

- Decoupling Microservices:

- Ensure independent scalability and failure isolation between application components.

- Load Leveling:

- Buffer requests during high traffic to ensure backend systems process them at a steady pace.

- Serverless Applications:

- Trigger AWS Lambda functions to process messages in SQS.

- Job Queues:

- Manage long-running tasks or background jobs asynchronously.

- Message Offloading:

- Offload temporary or bulk messages for later processing.

- Batch Processing:

- Combine multiple messages for efficient consumption and processing.

- Event-Driven Architectures:

- Trigger downstream workflows based on event-driven messages.

- Dead-Letter Handling:

- Troubleshoot and manage failed message deliveries using DLQs.

Integration with AWS Services Integrated With:

- AWS Lambda: Trigger Lambda functions for event-driven processing.

- Amazon SNS: Fan-out messages to multiple SQS queues for parallel processing.

- Amazon EC2: Process messages in distributed systems running on EC2 instances.

- AWS Step Functions: Orchestrate workflows that include SQS as a task.

- AWS KMS: Encrypt messages at rest for enhanced security.

- Amazon CloudWatch: Monitor queue metrics and set alarms for visibility and debugging.

- AWS IAM: Control access and permissions for producers and consumers. Not Directly Integrated With:

- Direct database triggers without intermediary services like Lambda or custom scripts.

Governance and Security

- IAM Policies:

- Manage fine-grained access to queues for producers and consumers.

- Encryption:

- Use AWS KMS for encryption at rest.

- Messages in transit are encrypted using SSL/TLS.

- Auditing:

- Log message activity and access through AWS CloudTrail.

- Visibility Timeout:

- Prevent multiple consumers from processing the same message.

- Dead-Letter Queues (DLQs):

- Isolate and debug failed messages for improved reliability.

Benefits

- Fully Managed:

- Offload operational overhead of managing message queues.

- Scalability:

- Automatically scales to accommodate any workload size.

- Reliability:

- High availability and durability with at-least-once message delivery.

- Cost-Effective:

- Pay-per-use pricing ensures costs scale with usage.

- Flexibility:

- Supports both standard and FIFO queues for diverse use cases.

- Ease of Integration:

- Seamless integration with AWS services and APIs for custom workflows.

- Operational Resilience:

- Buffering mechanisms help absorb traffic spikes without service disruption.

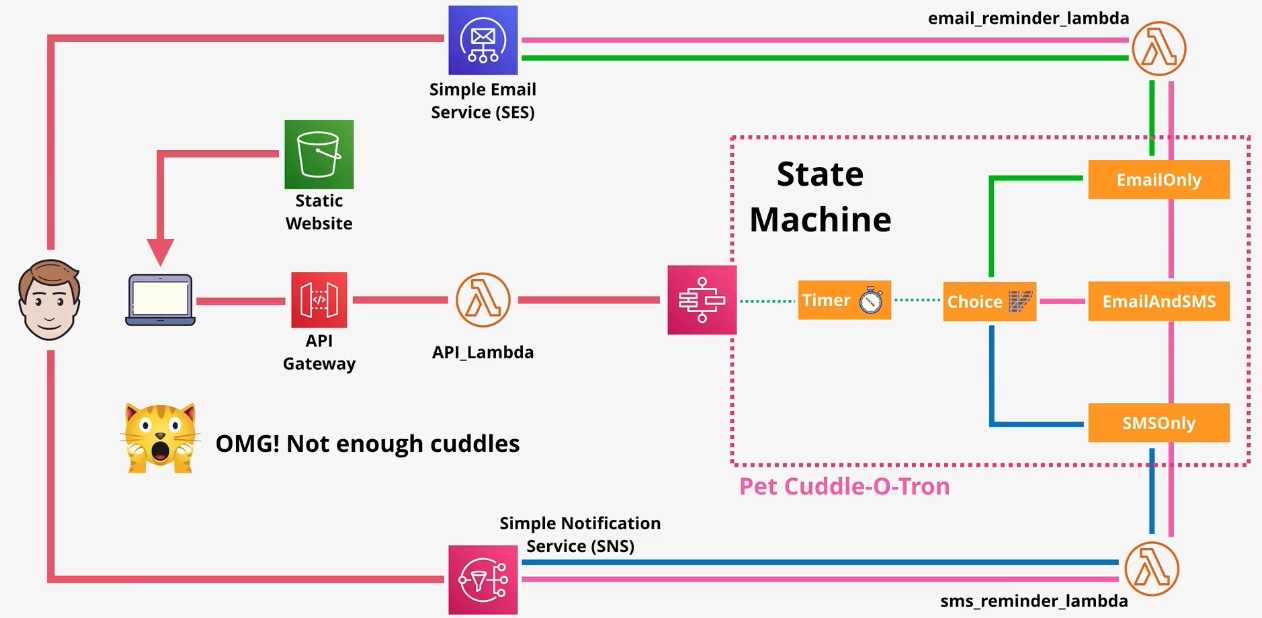

AWS Step Functions

- Type: Serverless Orchestration Service

- Description: Enables the coordination of multiple AWS services into serverless workflows, simplifying the development and execution of multi-step applications.

- Use Cases:

- Building data processing pipelines

- Orchestrating microservices

- Automating IT and business processes

AWS Cost Management

AWS Cost and Usage Report

- Type: Billing and Usage Analytics

- Description: Provides the most detailed information about AWS usage and costs, delivered to an S3 bucket.

- Use Cases:

- Deep cost analysis

- Custom billing reports

- Integration with analytics tools like Amazon Athena or Amazon Redshift

AWS Cost Explorer

- Type: Cost Visualization Tool

- Description: Allows users to visualize, understand, and manage AWS costs and usage over time.

- Use Cases:

- Cost trend analysis

- Budget forecasting

- Cost optimization insights Additional Features:

- Savings Plans Recommendations: Optimize compute costs with custom recommendations.

- Tag-Based Filtering: Analyze costs by department or project. Governance and Security:

- Restrict access to billing data with IAM policies. Examples:

- Forecast costs for the next billing cycle to ensure budget adherence.

Savings Plans

- Type: Flexible Pricing Model

- Description: Offers significant savings over On-Demand pricing in exchange for a commitment to use a specific amount of compute power over one or three years.

- Use Cases:

- Cost savings for predictable workloads

- Optimizing compute costs

- Running EC2, Fargate, and Lambda workloads

Compute

AWS Batch

- Type: Batch Processing Service

- Description: Efficiently runs hundreds to thousands of batch computing jobs by dynamically provisioning the optimal quantity and type of compute resources.

- Use Cases:

- High-throughput data processing

- Scientific simulations

- Media transcoding

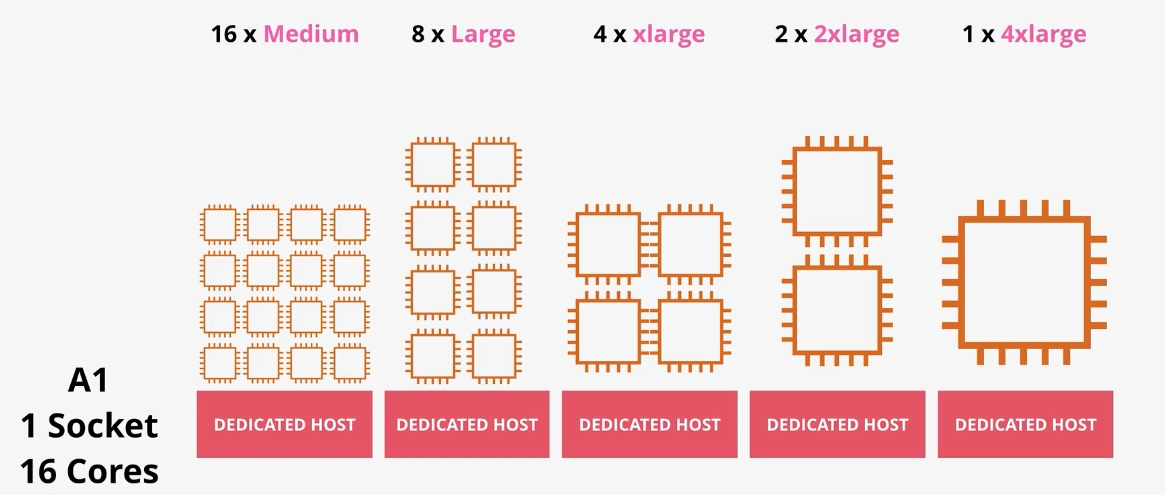

Amazon EC2 (Elastic Compute Cloud)

- Type: Scalable Virtual Servers

- Subtypes:

- On-Demand Instances: Pay-as-you-go pricing for short-term needs.

- Spot Instances: Cost-effective for non-critical workloads.

- Reserved Instances: Lower pricing for committed usage.

- Savings Plan Instances: Flexible commitment-based pricing.

- Use Cases:

- Hosting applications and websites

- Running large-scale distributed systems

- Machine learning model training

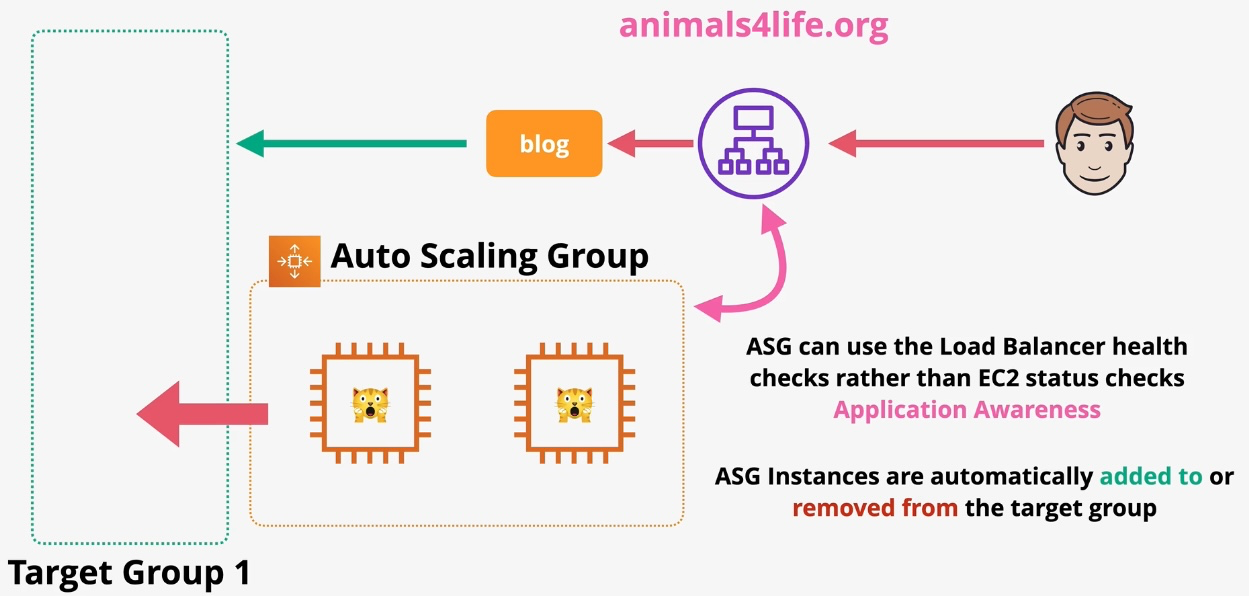

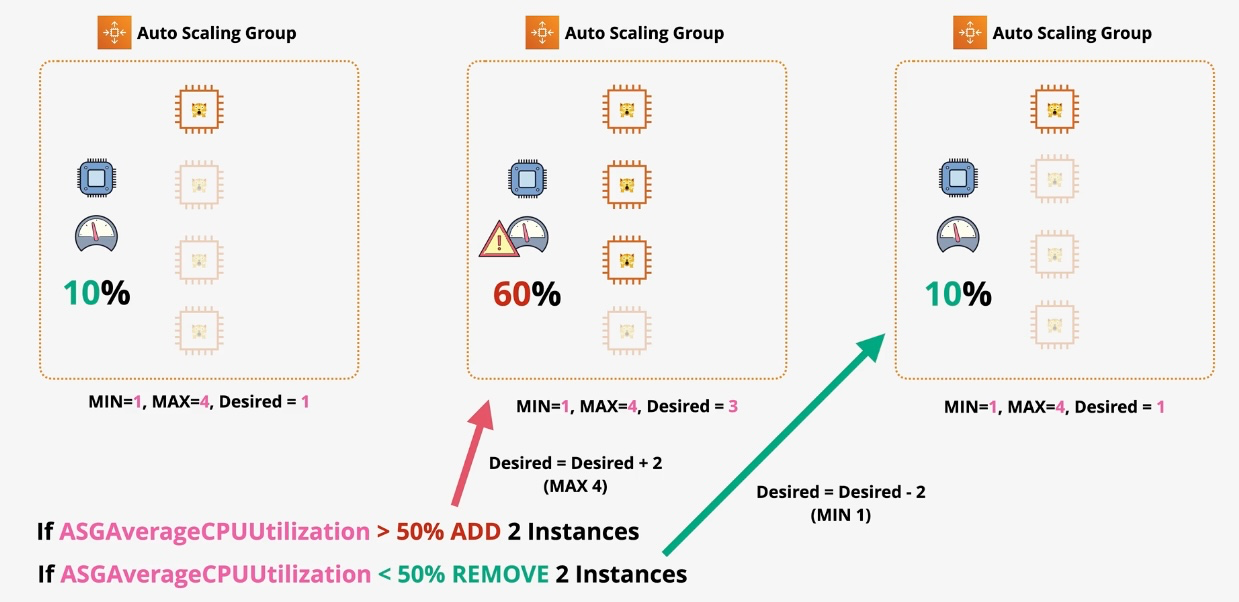

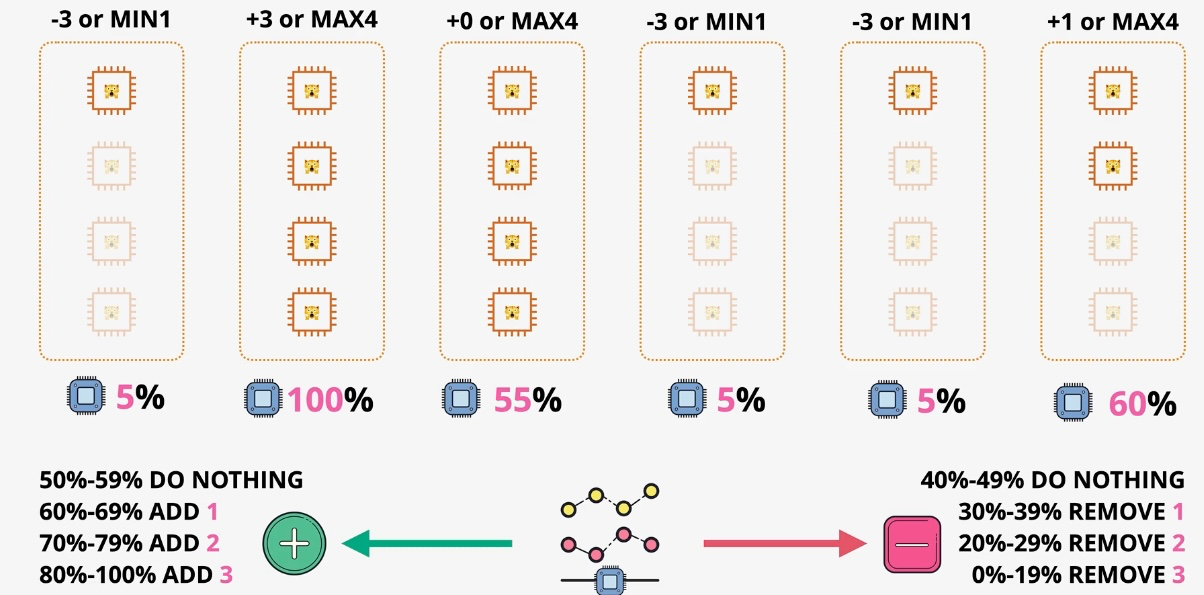

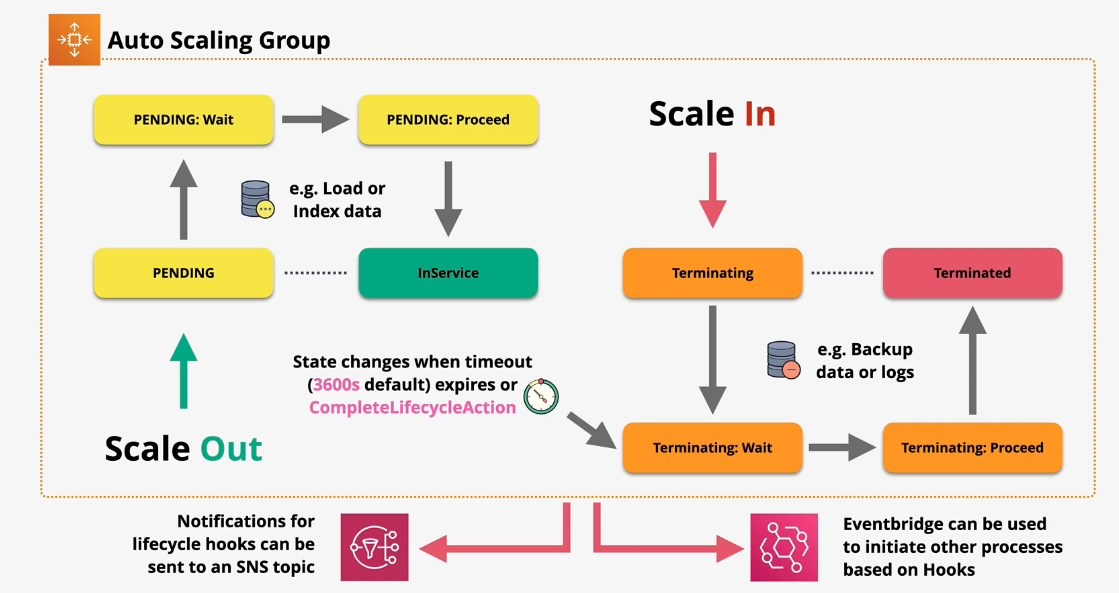

Amazon EC2 Auto Scaling

- Type: Dynamic Scaling

- Description: Automatically adjusts the number of EC2 instances based on demand.

- Use Cases:

- Maintaining application availability

- Cost optimization by scaling down during low usage

AWS Elastic Beanstalk

- Type: PaaS (Platform as a Service)

- Description: Simplifies deployment and management of applications by automatically handling capacity provisioning, load balancing, and scaling.

- Use Cases:

- Deploying web applications

- Auto-scaling applications

- Rapid environment setup for developers

AWS Outposts

- Type: Hybrid Cloud Service

- Description: Extends AWS services to on-premises data centers.

- Use Cases:

- Low-latency applications

- Hybrid cloud architectures

- On-premises data processing

AWS Serverless Application Repository

- Type: Repository for Serverless Applications

- Description: Enables users to discover, deploy, and share serverless applications.

- Use Cases:

- Quickly deploying prebuilt serverless applications

- Sharing serverless solutions across teams

- Accelerating development

VMware Cloud on AWS

- Type: Hybrid Cloud Integration

- Description: Enables migration and extension of on-premises VMware environments to AWS.

- Use Cases:

- Seamless migration to the cloud

- Hybrid cloud deployments

- Disaster recovery for VMware workloads

AWS Wavelength

- Type: Edge Computing Service

- Description: Enables developers to build applications that deliver ultra-low latency to 5G devices and edge computing workloads.

- Use Cases:

- Real-time gaming

- IoT applications

- AR/VR experiences

Containers

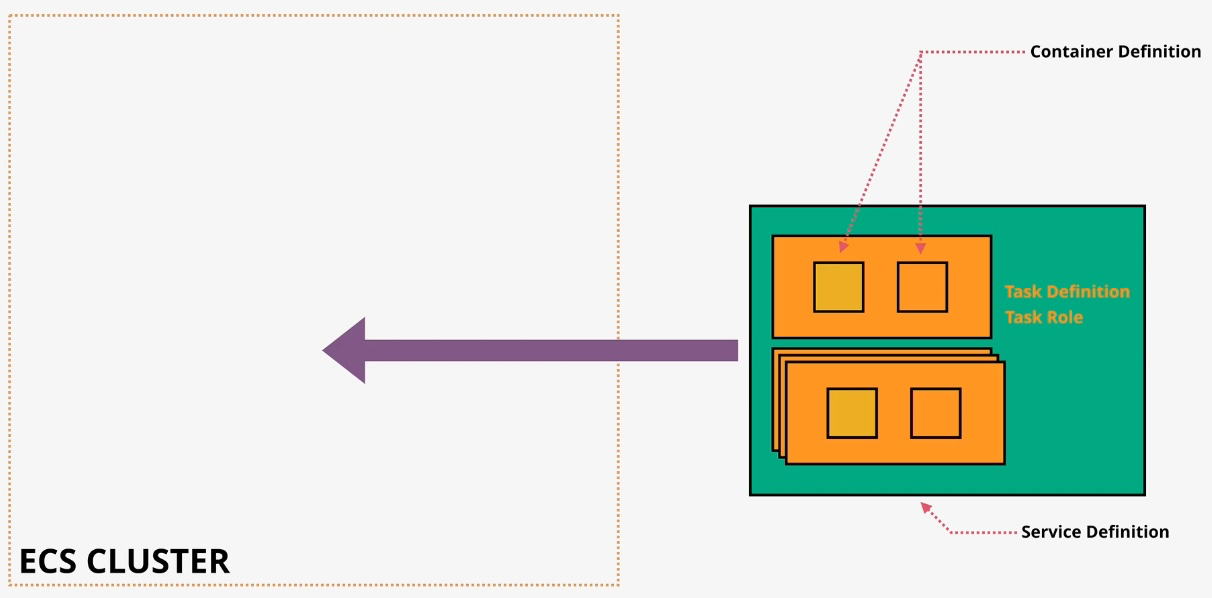

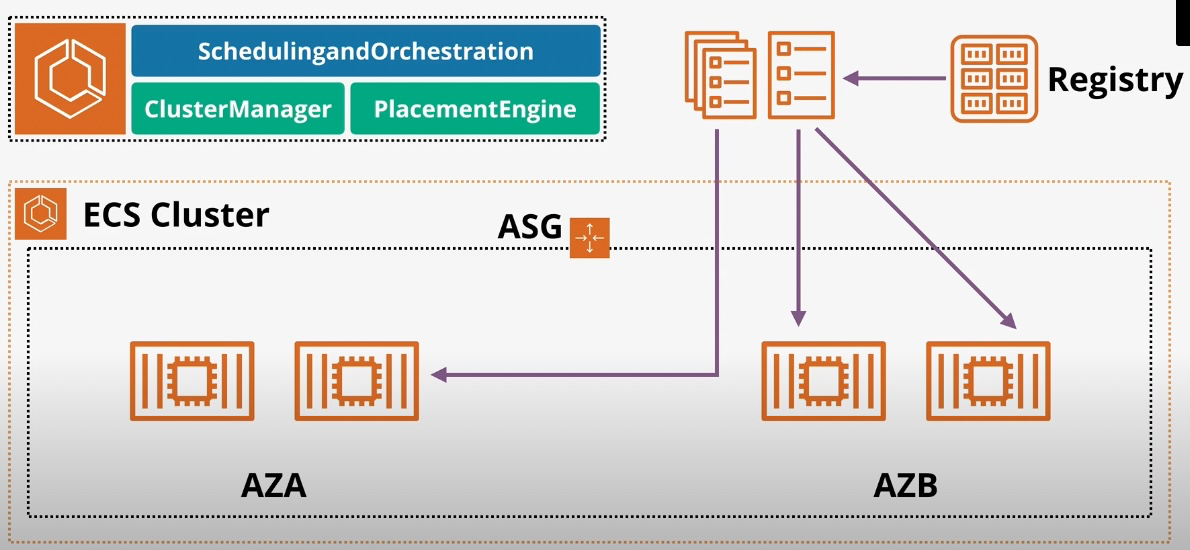

Amazon Elastic Container Service (ECS)

- Type: Managed Container Orchestration

- Subtypes:

- ECS Anywhere: Extends ECS to on-premises environments.

- Use Cases:

- Running containerized microservices

- Deploying applications across hybrid environments

- Managing containers without Kubernetes

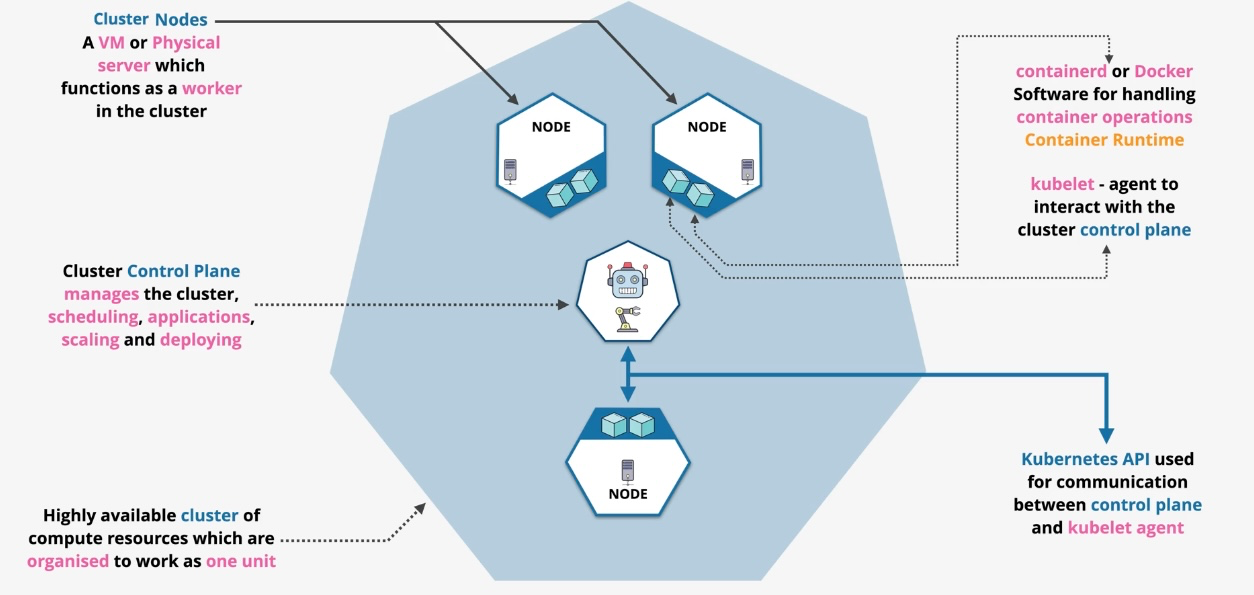

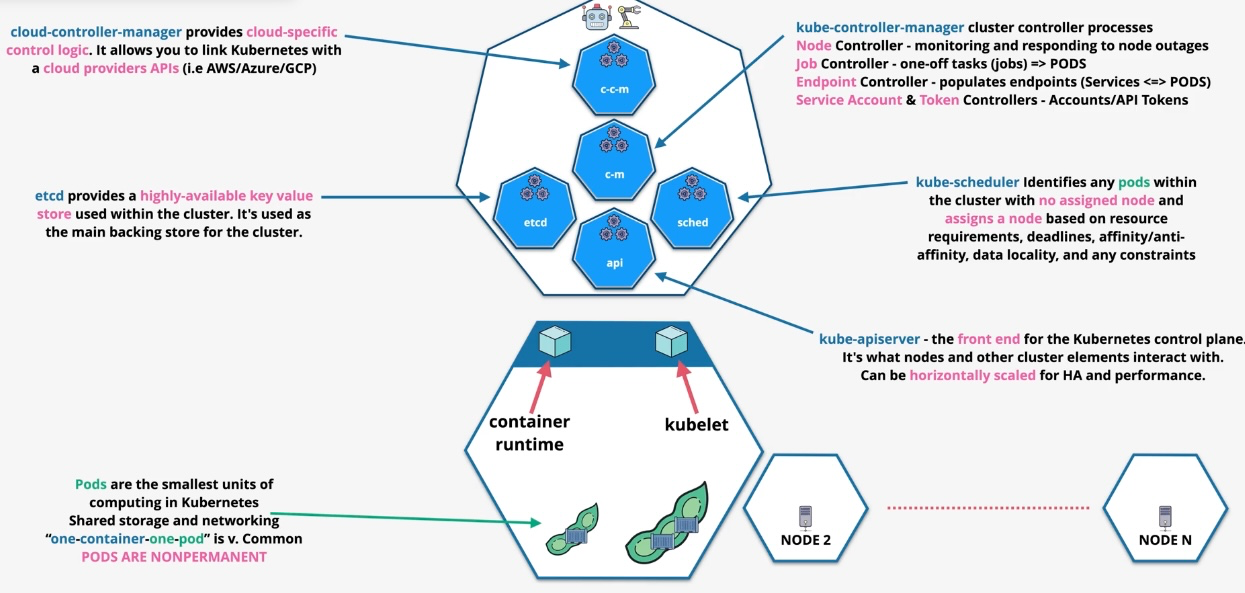

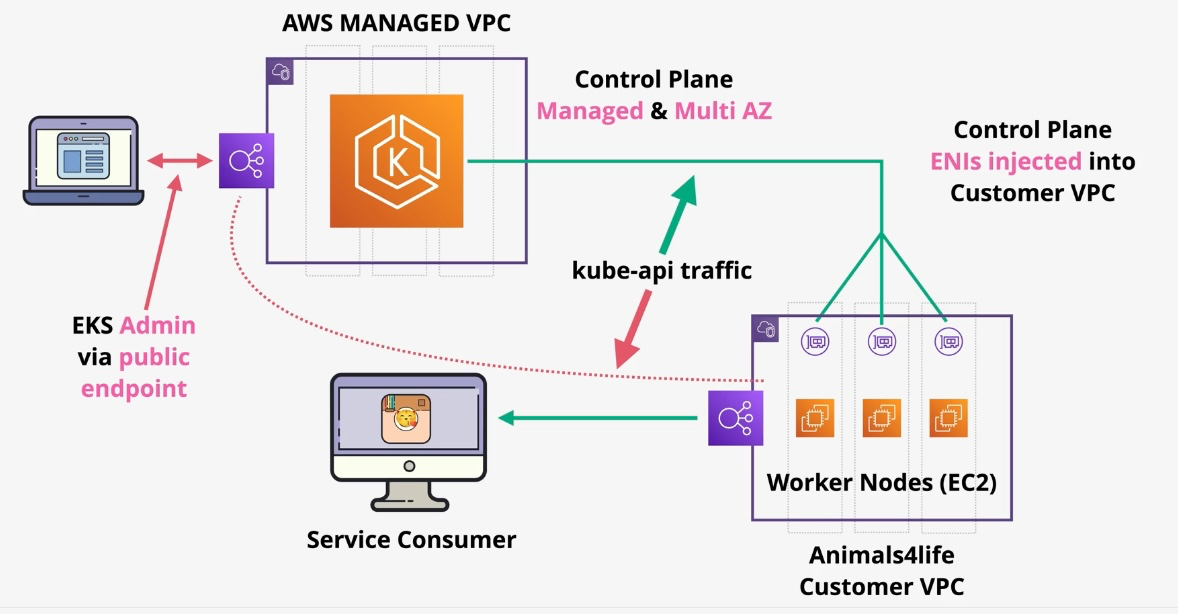

Amazon Elastic Kubernetes Service (EKS)

- Type: Managed Kubernetes Service

- Subtypes:

- EKS Anywhere: Kubernetes on-premises with AWS management.

- EKS Distro: Open-source distribution of Kubernetes.

- Use Cases:

- Deploying and managing Kubernetes clusters

- Running highly scalable containerized workloads

- Hybrid Kubernetes deployments

Amazon Elastic Container Registry (ECR)

- Type: Container Registry

- Description: Provides a secure, scalable, and reliable registry for storing Docker images.

- Use Cases:

- Storing and managing container images

- Integrating with ECS and EKS

- Simplified deployment of containerized applications

Database Services Cheat Sheet

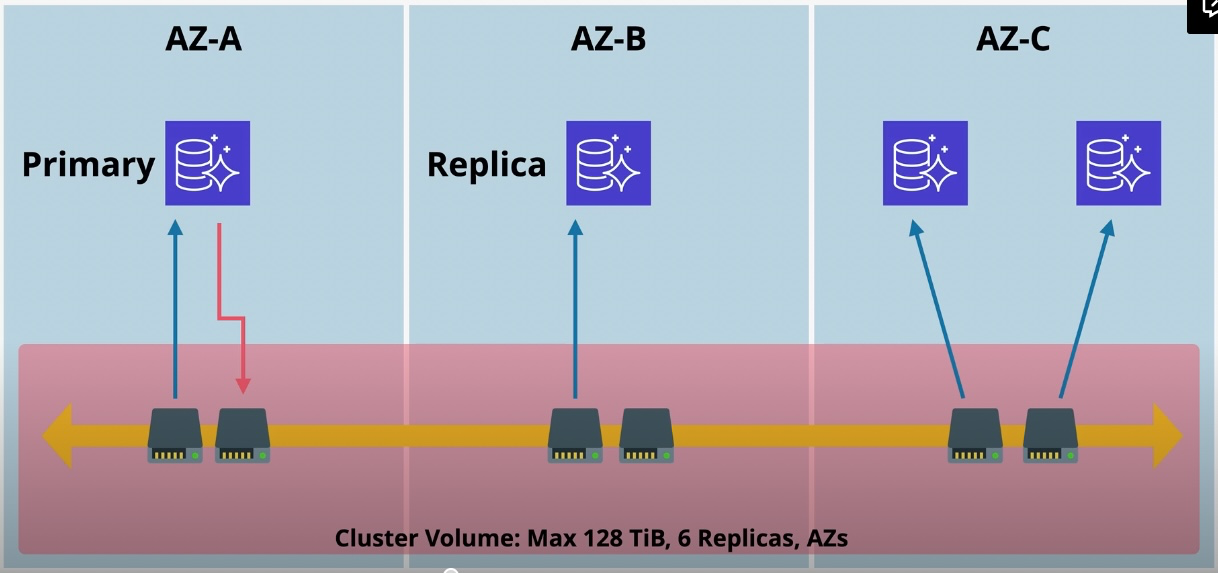

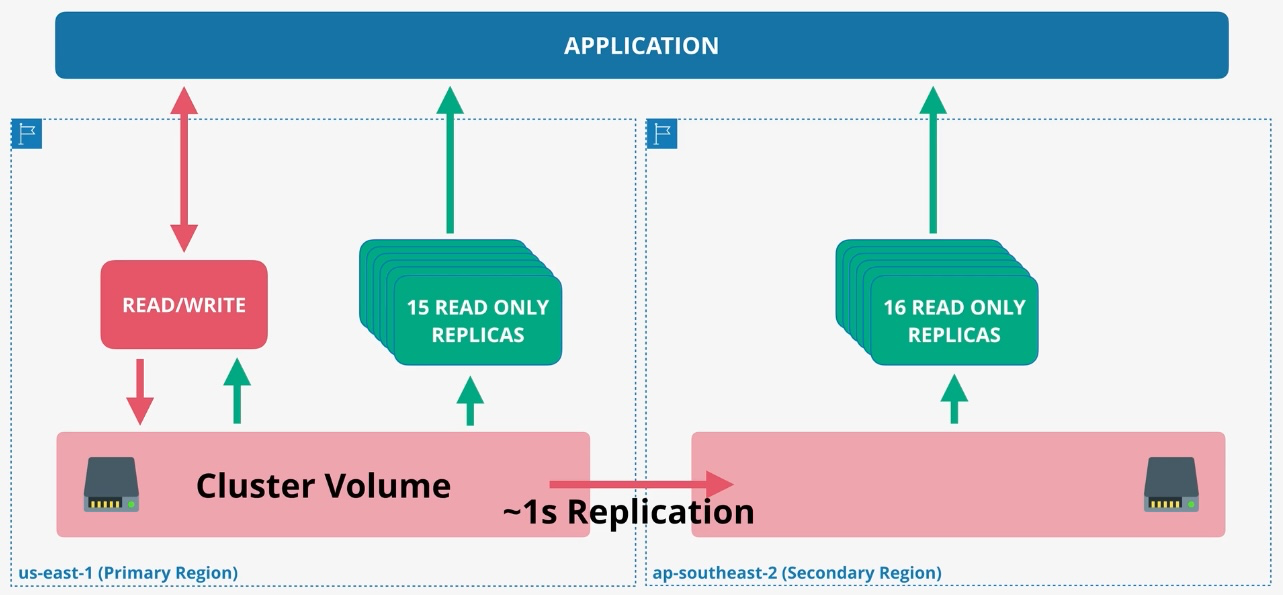

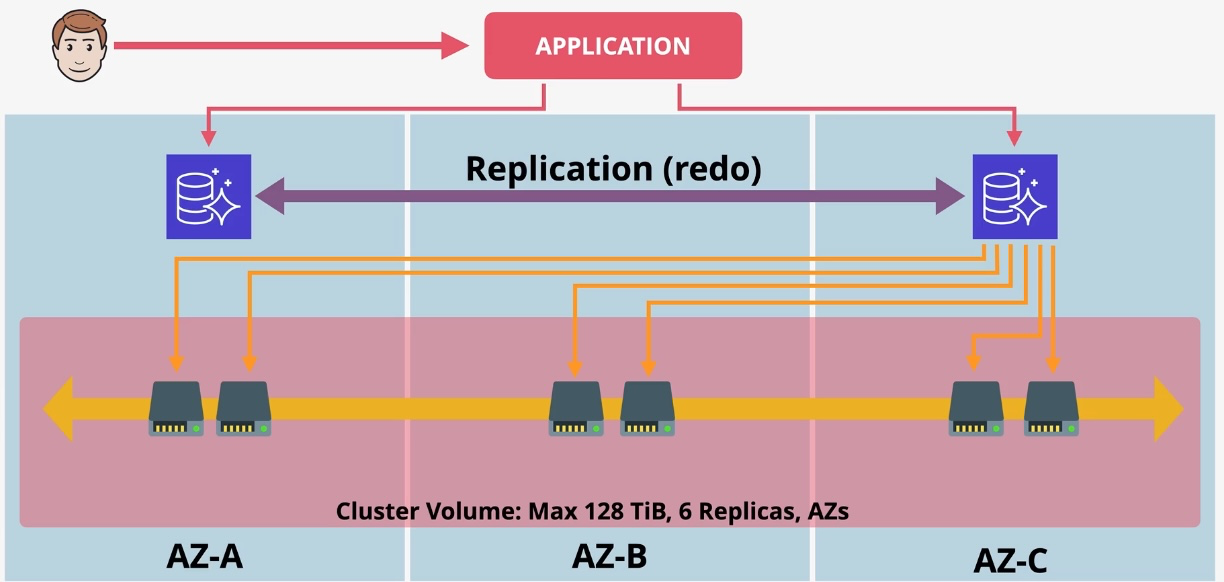

Amazon Aurora

- Type: Managed Relational Database

- Description: High-performance, fully managed relational database compatible with MySQL and PostgreSQL.

- Features:

- Distributed, fault-tolerant storage.

- Automatic backups and failover.

- Global Database for low-latency reads across regions.

- Use Cases:

- High-throughput online transaction processing (OLTP).

- Enterprise applications requiring high availability.

- E-commerce platforms and SaaS applications.

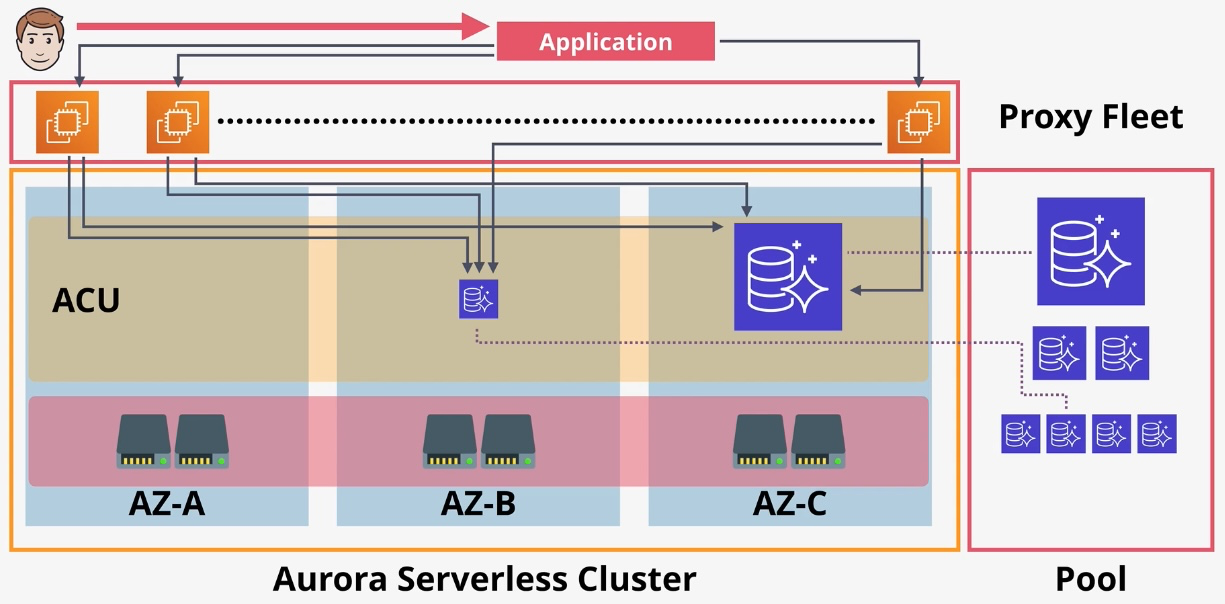

Amazon Aurora Serverless

- Type: Auto-Scaling Relational Database

- Description: Serverless version of Aurora that scales automatically based on demand.

- Features:

- Pay-per-use pricing.

- Automatic scaling to handle workload spikes.

- Compatible with MySQL and PostgreSQL.

- Use Cases:

- Applications with unpredictable traffic patterns.

- Development and testing environments.

- Cost-optimized, low-maintenance workloads.

Amazon DocumentDB (with MongoDB compatibility)

- Type: NoSQL Document Database

- Description: Managed database service designed to run MongoDB workloads.

- Features:

- Scalability with replicas and sharding.

- Fully managed backups and patching.

- High availability with multi-AZ deployments.

- Use Cases:

- Content management systems.

- Cataloging and inventory applications.

- Storing hierarchical or semi-structured data.

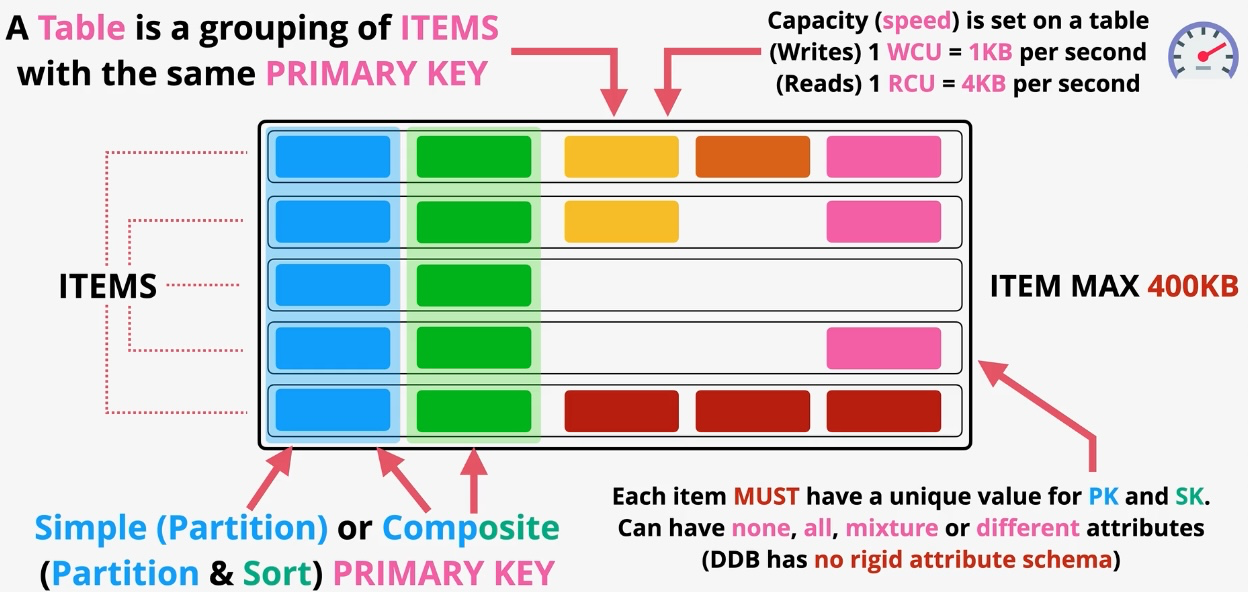

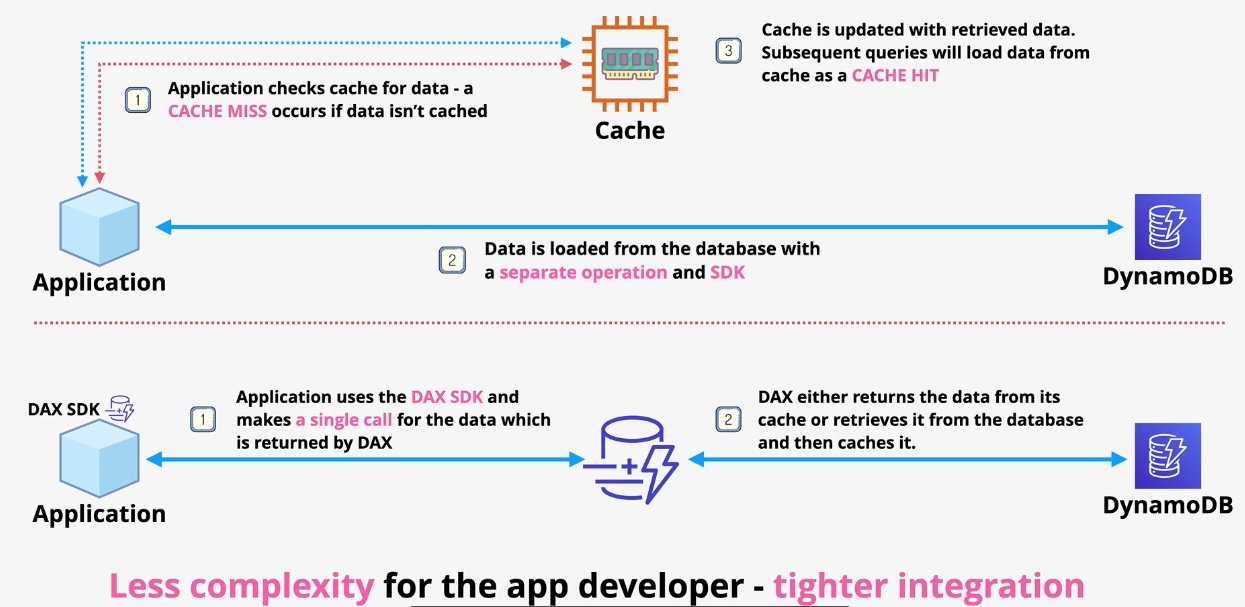

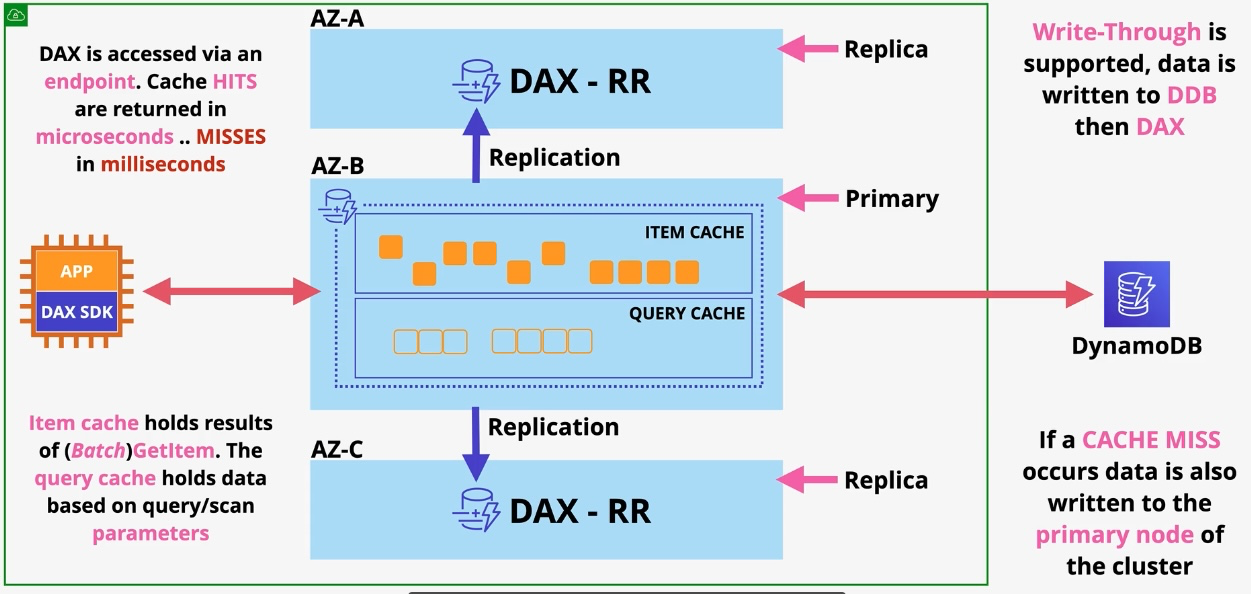

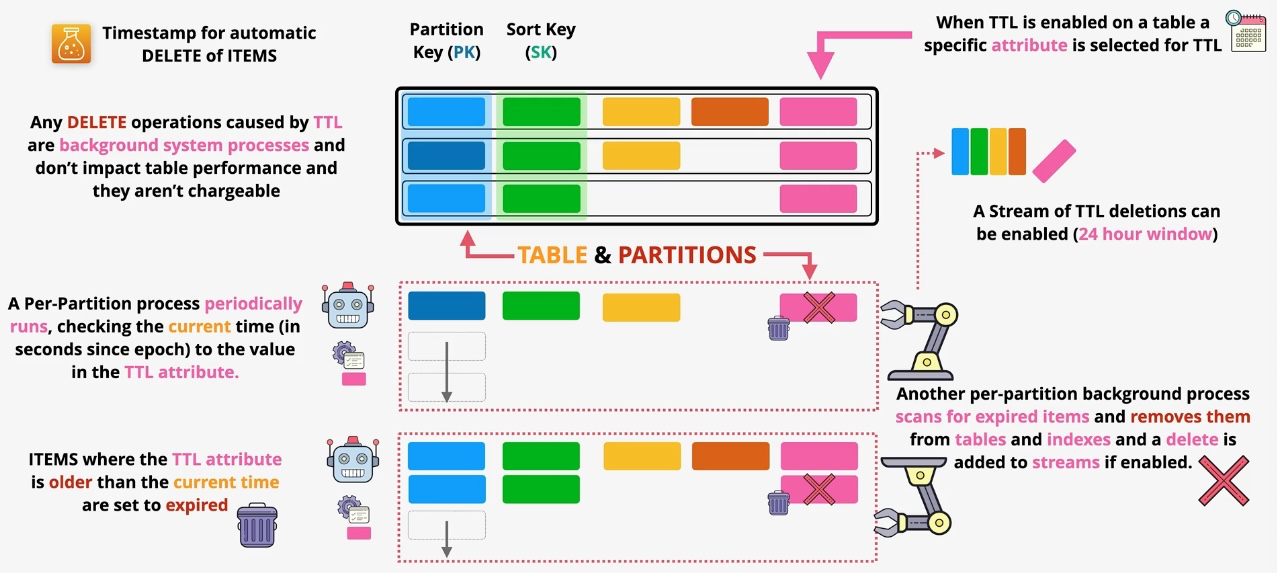

Amazon DynamoDB

- Type: NoSQL Key-Value and Document Database

- Description: Fully managed, serverless NoSQL database for low-latency applications.

- Features:

- Single-digit millisecond response times.

- On-demand or provisioned capacity modes.

- Global Tables for multi-region replication.

- Use Cases:

- Real-time applications (e.g., gaming leaderboards).

- IoT data storage.

- Shopping cart and session management.

Additional Features:

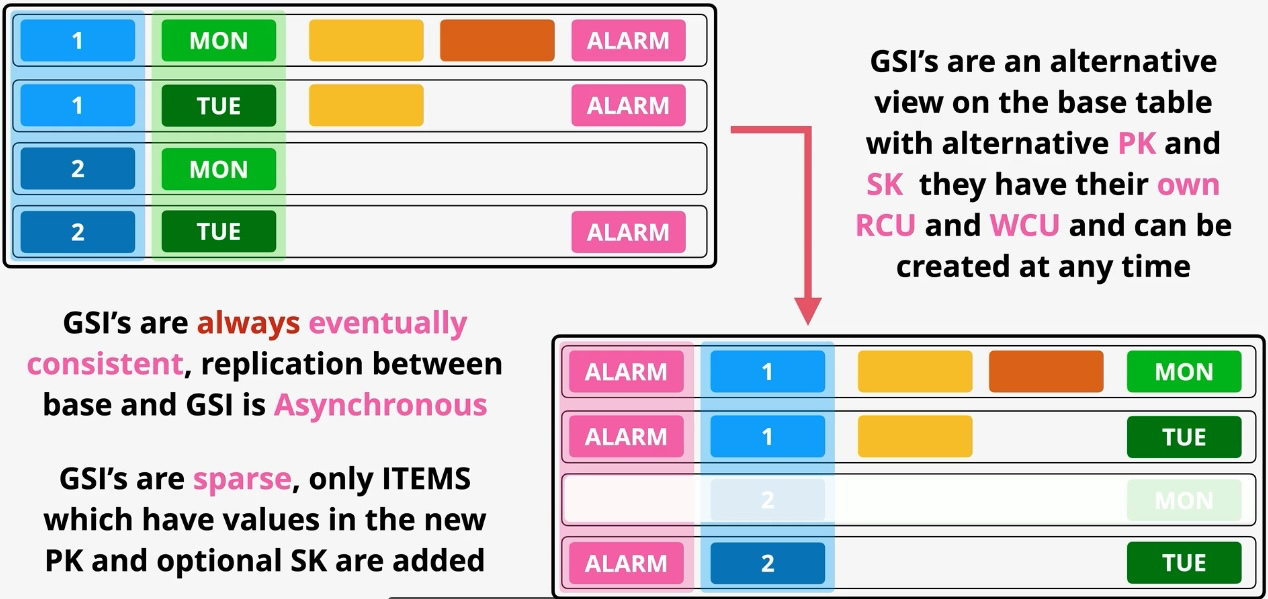

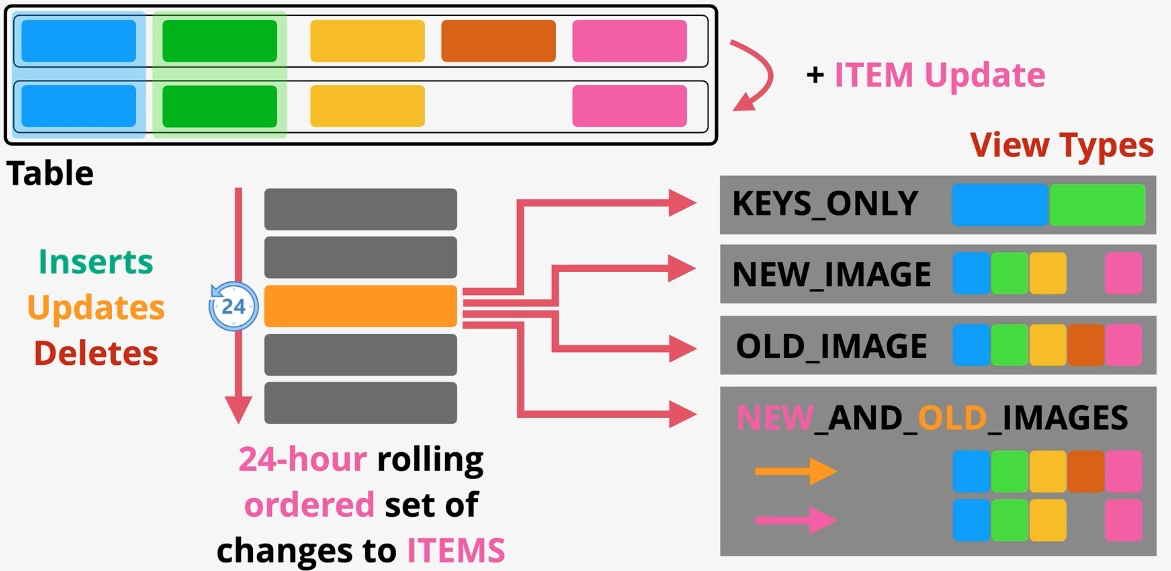

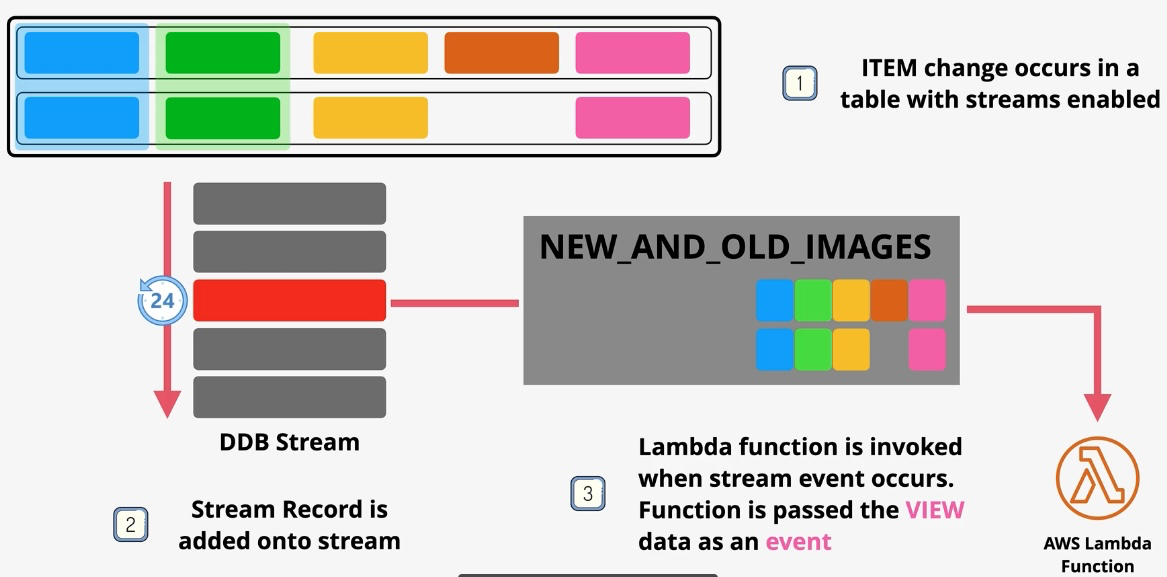

- Streams: Capture item-level changes for event-driven applications.

- Global Tables: Multi-region replication for low-latency global access. Governance and Security:

- Define fine-grained access controls with IAM policies.

- Monitor table activity using CloudWatch Metrics. Examples:

- Power real-time leaderboards for online games.

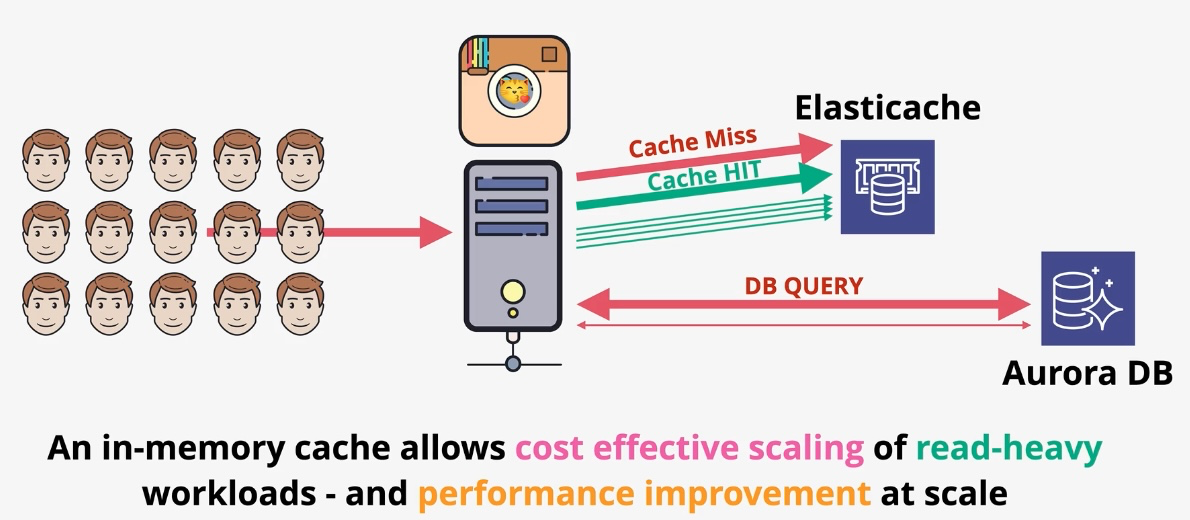

Amazon ElastiCache

- Type: In-Memory Data Store

- Description: Fully managed in-memory data store compatible with Redis and Memcached.

- Subtypes:

- Redis: Advanced in-memory data structure store with support for replication and persistence.

- Memcached: Simple key-value store for caching.

- Use Cases:

- Real-time caching for high-throughput applications.

- Session storage for web applications.

- Gaming leaderboards and real-time analytics.

Amazon Keyspaces (for Apache Cassandra)

- Type: Managed NoSQL Database

- Description: Fully managed database service compatible with Apache Cassandra.

- Features:

- Scalable and highly available.

- Serverless and zero-maintenance.

- Compatible with Cassandra Query Language (CQL).

- Use Cases:

- IoT applications requiring high throughput.

- Time-series data storage.

- Decentralized data models.

Amazon Neptune

- Type: Graph Database

- Description: Fully managed graph database for storing and navigating relationships.

- Features:

- Supports both property graph and RDF graph models.

- Optimized for high-performance graph queries.

- High availability with automated failover.

- Use Cases:

- Social networking applications.

- Fraud detection through relationship analysis.

- Knowledge graphs and recommendation engines.

Amazon Quantum Ledger Database (QLDB)

- Type: Immutable Ledger Database

- Description: Fully managed ledger database providing a transparent, immutable, and cryptographically verifiable transaction log.

- Features:

- Append-only, cryptographically chained data structure.

- Managed service with no server provisioning required.

- ACID-compliant transactions.

- Use Cases:

- Financial transaction tracking.

- Supply chain management.

- Identity verification and compliance systems.

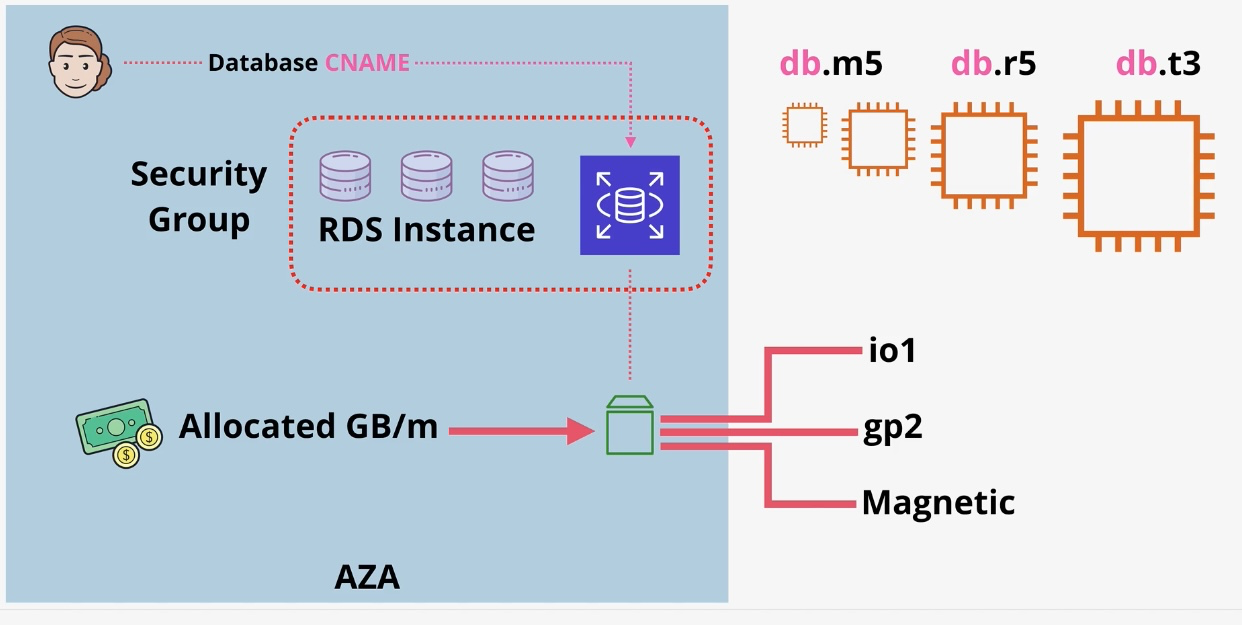

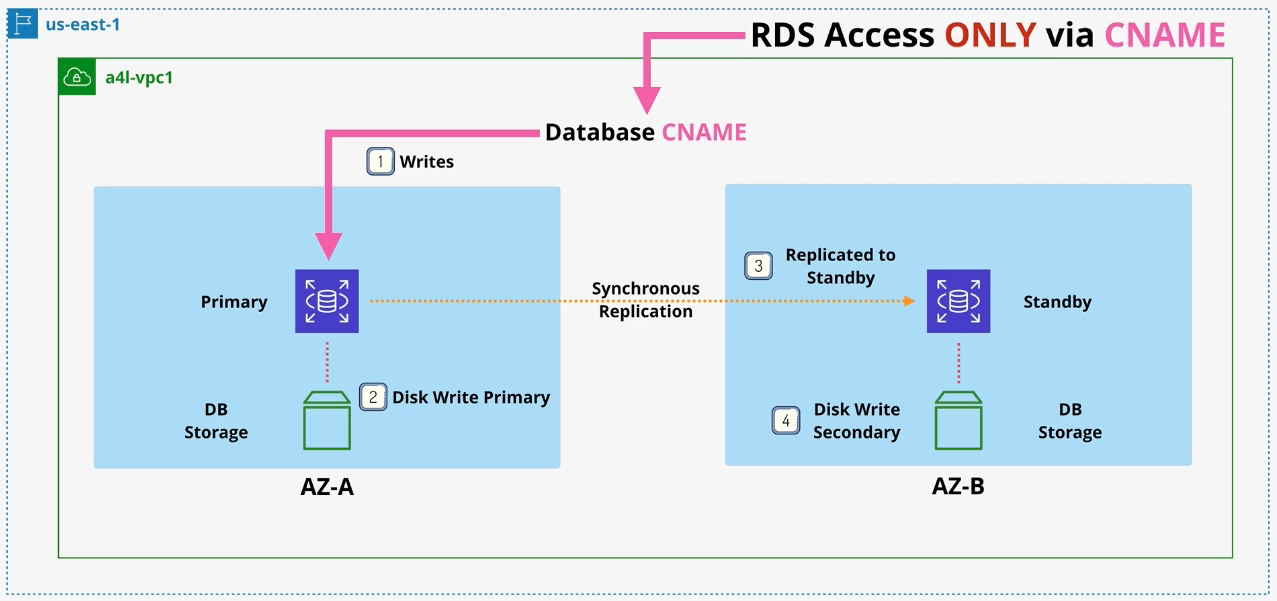

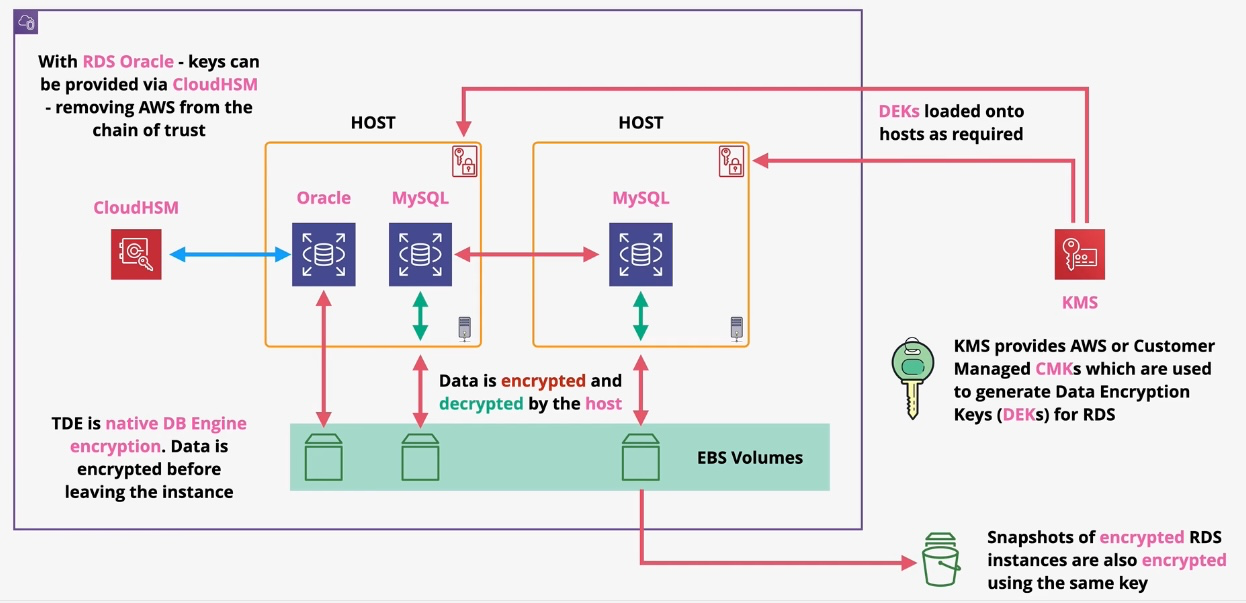

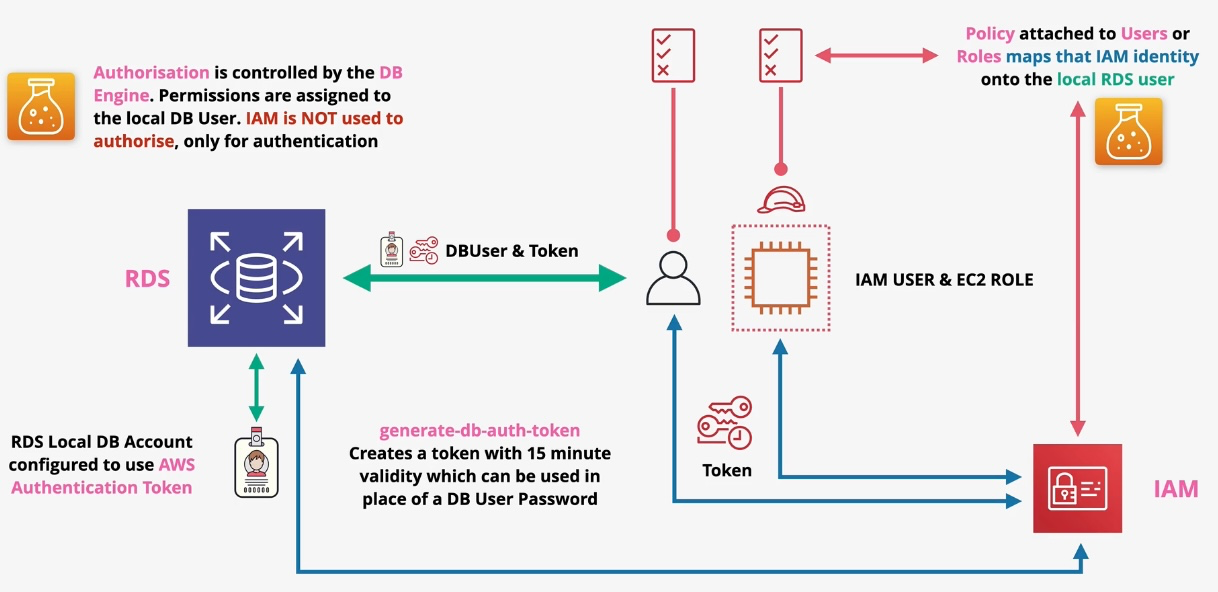

Amazon RDS (Relational Database Service)

- Type: Managed Relational Database

- Description: Simplifies the setup, operation, and scaling of relational databases.

- Supported Engines:

- MySQL

- PostgreSQL

- MariaDB

- Oracle

- SQL Server

- Features:

- Automated backups and software patching.

- Multi-AZ deployments for high availability.

- Read replicas for improved performance.

- Use Cases:

- Hosting transactional databases.

- Enterprise applications requiring relational data storage.

- E-commerce platforms and CMS applications.

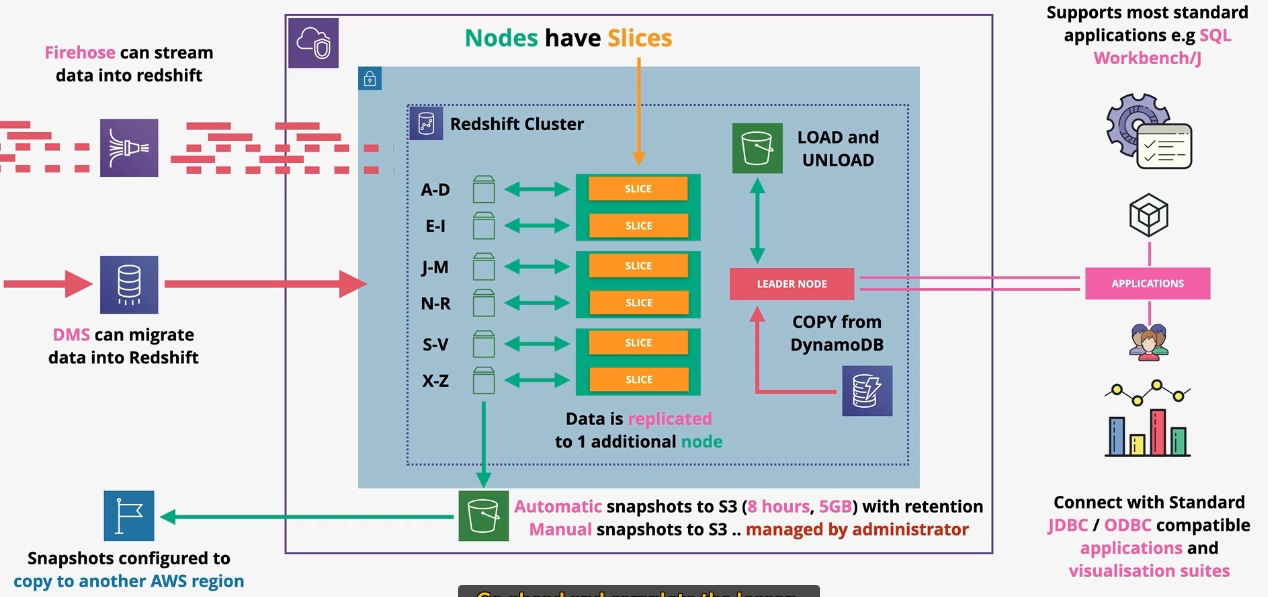

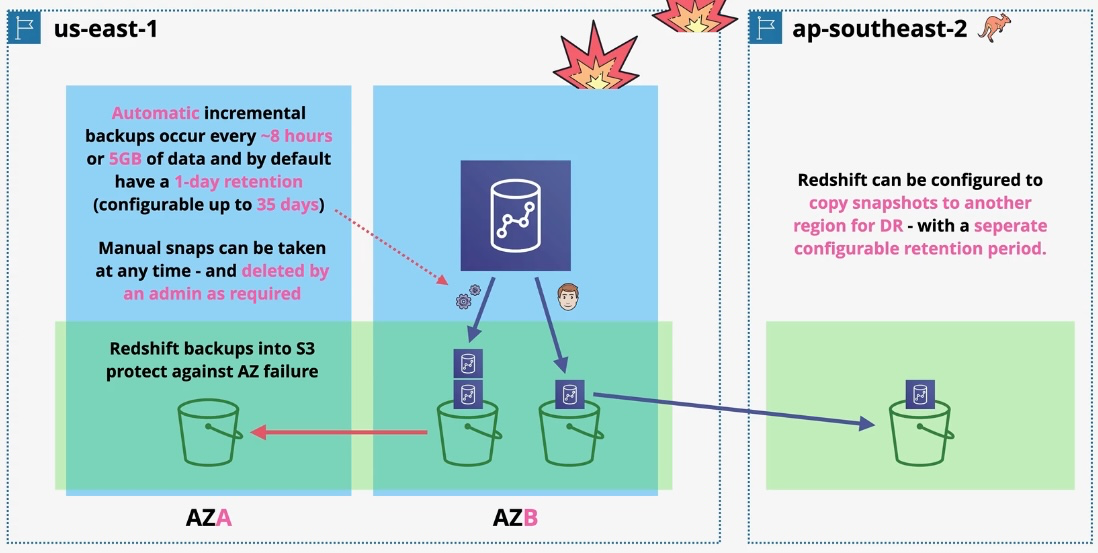

Amazon Redshift

- Type: Data Warehousing Service

- Description: Fully managed, petabyte-scale data warehouse for analyzing structured and semi-structured data.

- Features:

- Columnar storage for high-performance analytics.

- Integration with BI tools like QuickSight.

- Redshift Spectrum for querying S3 data without ETL.

- Use Cases:

- Business intelligence and reporting.

- Analyzing large datasets from IoT or transactional systems.

- Preparing data for machine learning.

Comparison of Database Services Service Best For Amazon Aurora High-performance relational workloads. Aurora Serverless Unpredictable or intermittent traffic patterns. Amazon DocumentDB MongoDB-compatible document database. Amazon DynamoDB Low-latency key-value and document storage. Amazon ElastiCache Real-time caching and in-memory data stores. Amazon Keyspaces Cassandra-compatible workloads for time-series or IoT data. Amazon Neptune Graph-based applications for relationship analysis. Amazon QLDB Immutable ledger use cases like transaction tracking. Amazon RDS Relational databases with multiple engine options. Amazon Redshift Analytical queries on large datasets for business intelligence.

Developer Tools

AWS X-Ray

- Type: Distributed Tracing Service

- Description: Helps developers analyze and debug distributed applications, providing insights into performance and identifying bottlenecks.

- Use Cases:

- Debugging serverless and microservices applications.

- Identifying latency issues in distributed systems.

- Monitoring end-to-end application performance.

Front-End Web and Mobile

AWS Amplify

- Type: Front-End Development Framework

- Description: Provides tools and services to build scalable, secure mobile and web applications.

- Use Cases:

- Hosting single-page applications.

- Simplifying backend integrations.

- Rapid prototyping and deployment.

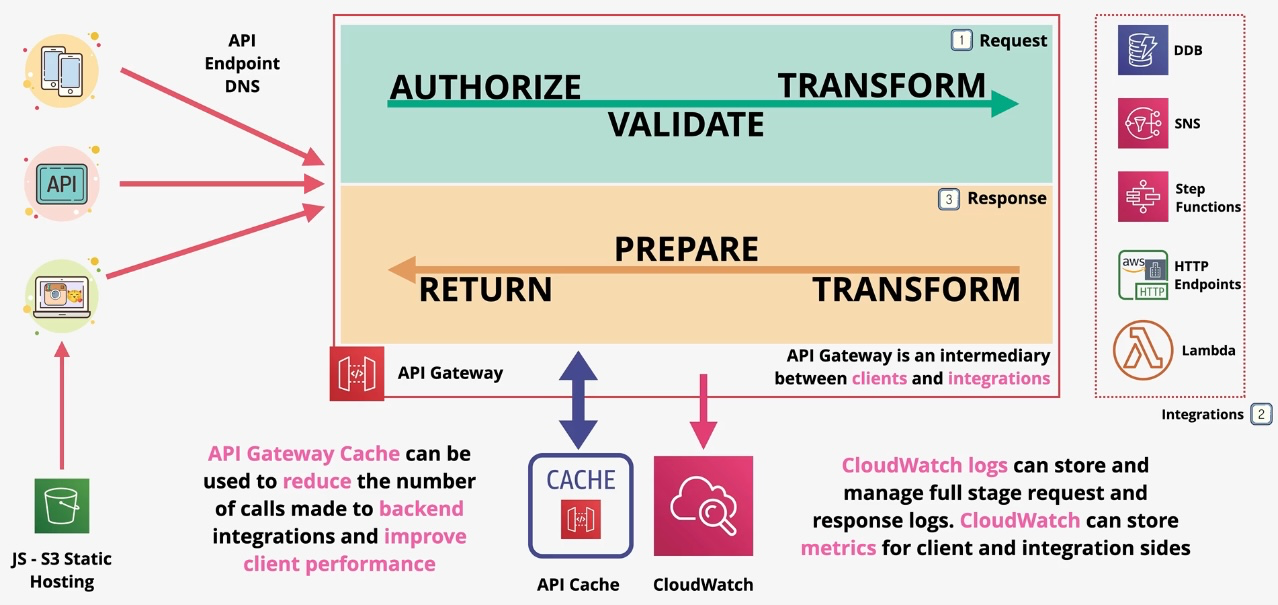

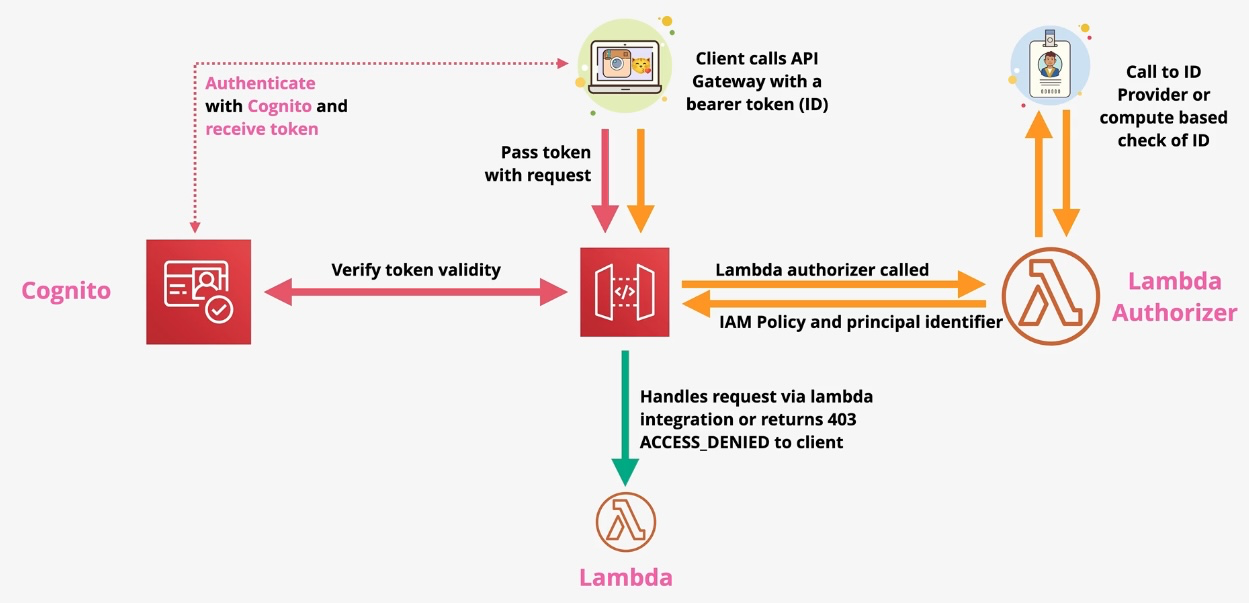

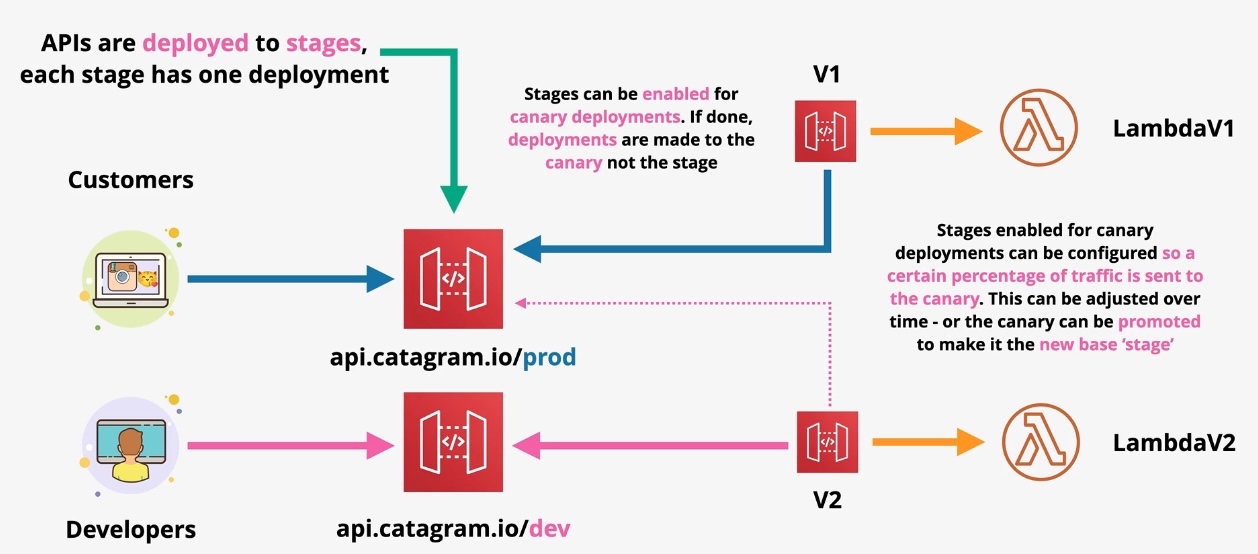

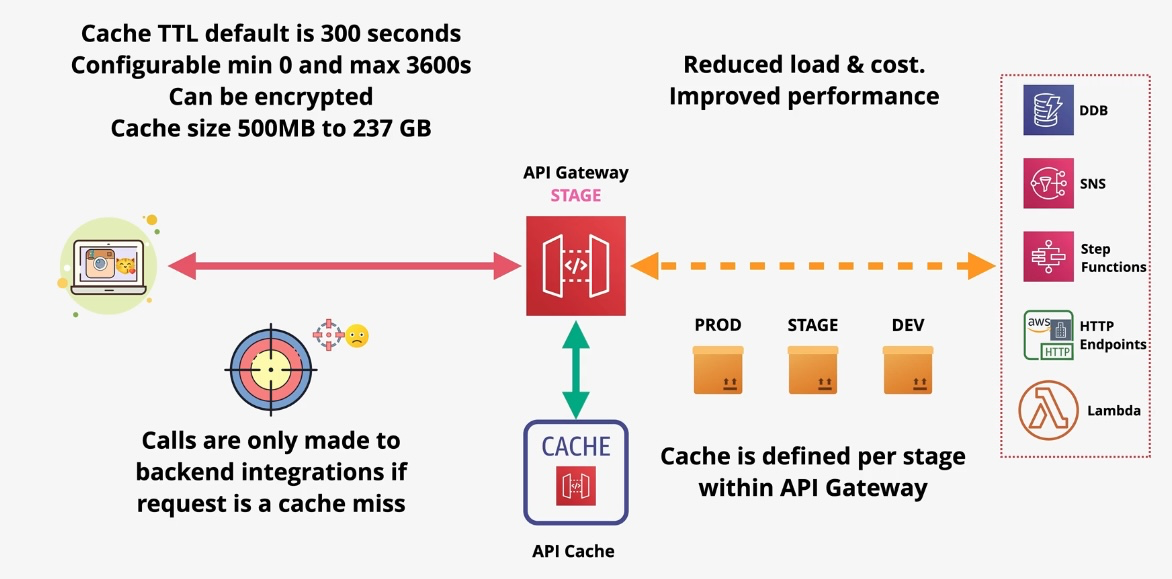

Amazon API Gateway

- Type: Managed API Gateway

- Description: Enables developers to create, publish, maintain, and secure APIs.

- Use Cases:

- Creating RESTful APIs.

- Managing WebSocket APIs.

- Enabling serverless architectures with AWS Lambda. Additional Features:

- Usage Plans: Control API access with rate limiting and quotas.

- WebSocket Support: Enable real-time, two-way communication. Governance and Security:

- Implement authorization with IAM, Lambda authorizers, or Cognito.

- Use WAF for additional protection against threats. Examples:

- Build serverless APIs for mobile applications.

AWS Device Farm

- Type: Mobile App Testing Service

- Description: Tests mobile and web apps on real devices hosted in the AWS cloud.

- Use Cases:

- Cross-platform application testing.

- Identifying bugs in different OS environments.

- Automated testing for mobile applications.

Amazon Pinpoint

- Type: Customer Engagement Service

- Description: Helps businesses engage with customers via targeted messaging campaigns.

- Use Cases:

- Marketing automation.

- User retention campaigns.

- Analyzing customer behavior.

Machine Learning

Amazon Comprehend

- Type: Natural Language Processing (NLP)

- Description: Extracts insights from text, such as sentiment, key phrases, entities, and more.

- Use Cases:

- Analyzing customer feedback.

- Text classification for document processing.

- Social media sentiment analysis.

Amazon Forecast

- Type: Time-Series Forecasting Service

- Description: Uses machine learning to generate accurate forecasts for business metrics.

- Use Cases:

- Inventory planning.

- Financial forecasting.

- Resource demand predictions.

Amazon Fraud Detector

- Type: Fraud Detection Service

- Description: Identifies potentially fraudulent online activities using machine learning.

- Use Cases:

- Preventing payment fraud.

- Reducing fake account registrations.

- Monitoring transaction anomalies.

Amazon Kendra

- Type: Enterprise Search Service

- Description: Provides intelligent search capabilities for internal documents and datasets.

- Use Cases:

- Knowledge management.

- Internal document search.

- Enhancing customer support tools.

Amazon Lex

- Type: Conversational AI Service

- Description: Builds conversational interfaces using automatic speech recognition (ASR) and natural language understanding (NLU).

- Use Cases:

- Building chatbots.

- IVR systems for customer support.

- Real-time conversational applications.

Amazon Polly

- Type: Text-to-Speech Service

- Description: Converts text into lifelike speech using deep learning.

- Use Cases:

- Content accessibility for visually impaired users.

- Automating audio for news and articles.

- Building voice-enabled applications.

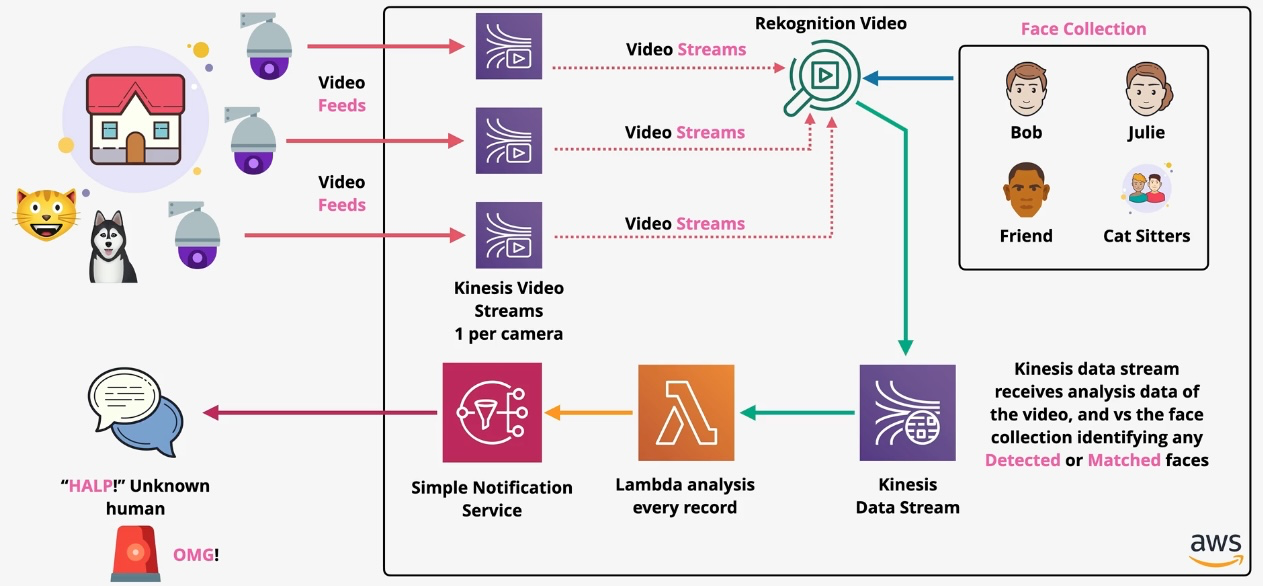

Amazon Rekognition

- Type: Image and Video Analysis

- Description: Provides image and video recognition capabilities, including facial recognition and object detection.

- Use Cases:

- Content moderation for images/videos.

- Real-time facial recognition in security systems.

- Image metadata extraction.

Amazon SageMaker

- Type: Machine Learning Development Platform

- Description: Helps build, train, and deploy machine learning models at scale.

- Use Cases:

- Custom machine learning model development.

- Real-time model inference.

- Automated ML pipeline creation. Additional Features:

- Autopilot: Automatically build, train, and tune ML models.

- Studio: Fully integrated development environment for ML workflows. Governance and Security:

- Encrypt ML models and datasets.

- Secure endpoints with VPC configurations. Examples:

- Predict customer churn using structured customer data.

Amazon Textract

- Type: Document Text Extraction

- Description: Automatically extracts text, forms, and tables from scanned documents.

- Use Cases:

- Invoice and receipt processing.

- Document digitization.

- Automating data entry workflows.

Amazon Transcribe

- Type: Speech-to-Text Service

- Description: Converts speech into text using machine learning.

- Use Cases:

- Transcribing customer calls.

- Real-time speech analytics.

- Captioning for videos.

Amazon Translate

- Type: Language Translation Service

- Description: Provides neural machine translation for 75+ languages.

- Use Cases:

- Localizing content for global audiences.

- Real-time translation in communication apps.

- Automating multilingual customer support.

Management and Governance Cheat Sheet

AWS Auto Scaling

- Type: Resource Scaling Service

- Description: Automatically adjusts resource capacity to maintain performance and minimize costs.

- Use Cases:

- Scaling EC2 instances based on demand.

- Scaling DynamoDB read/write capacity.

- Scaling ECS tasks for containerized applications.

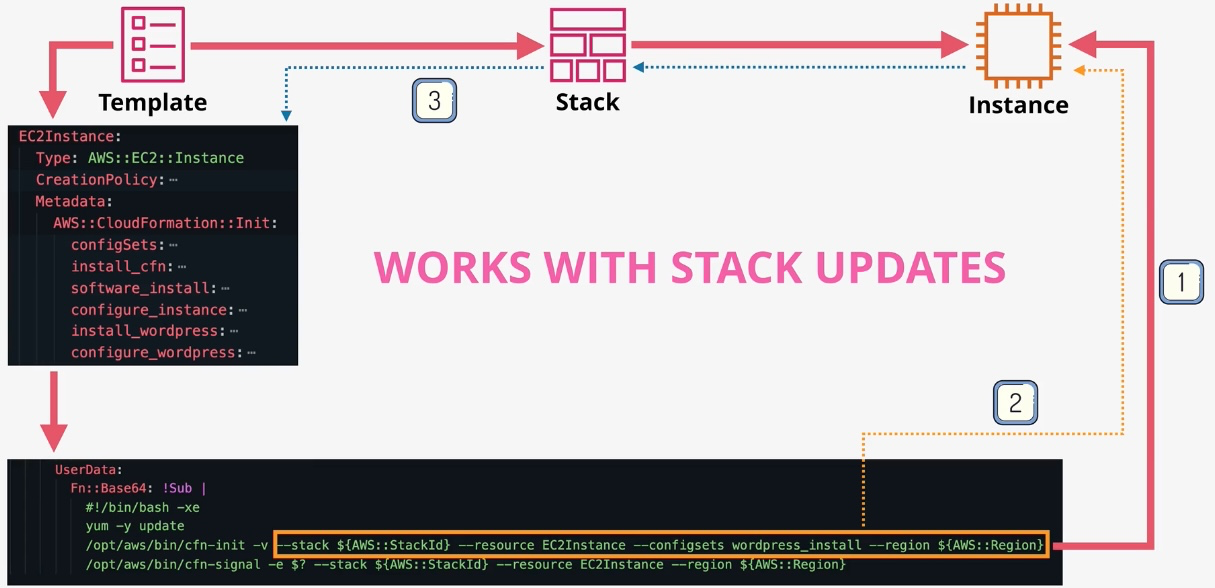

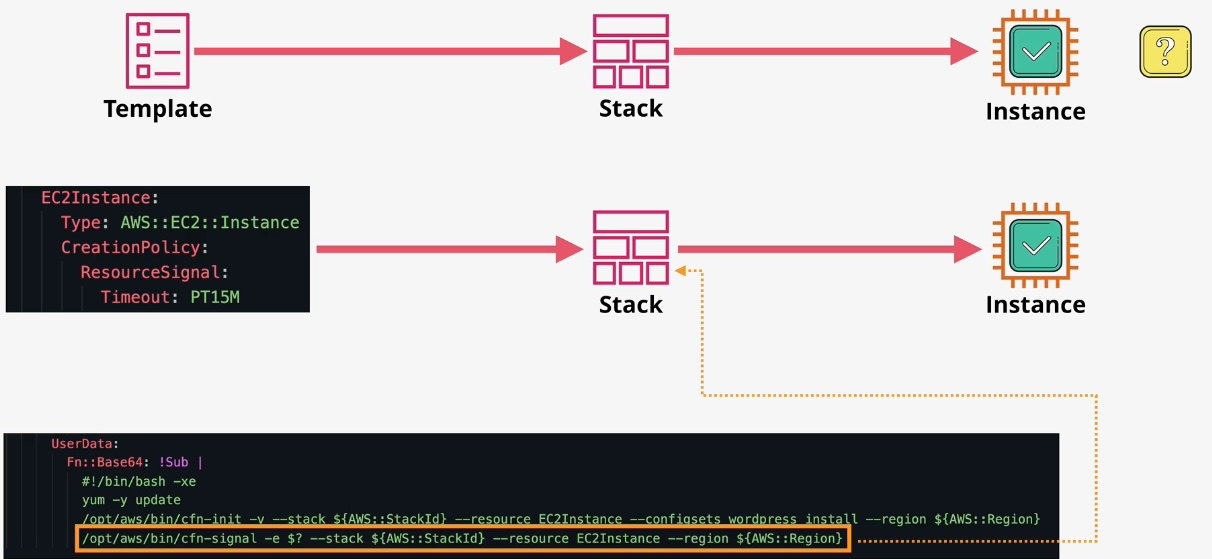

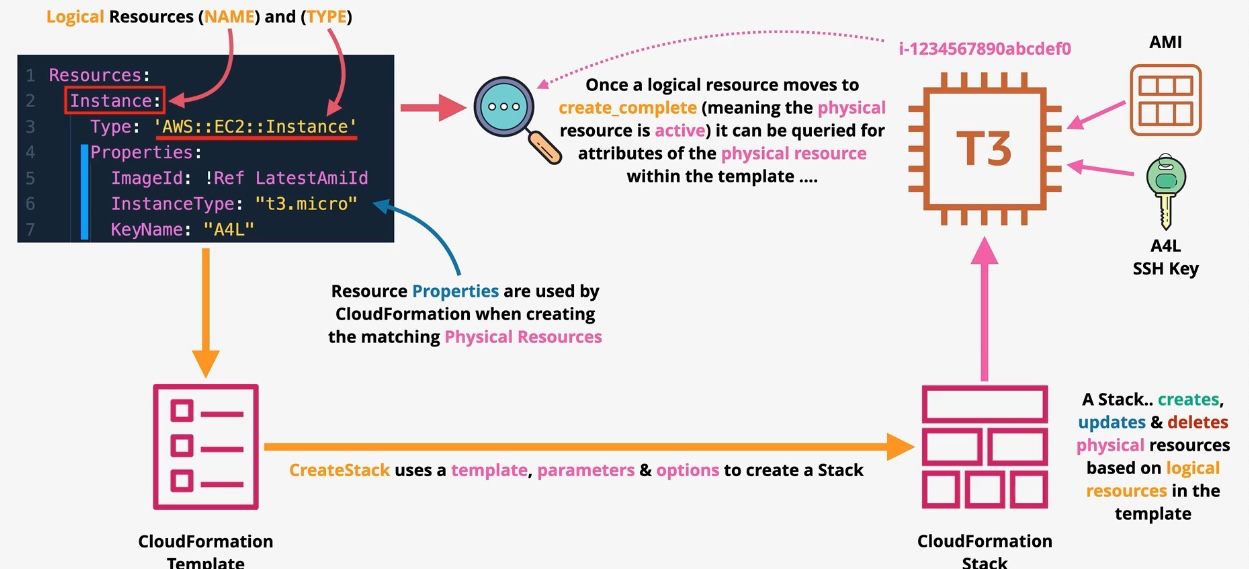

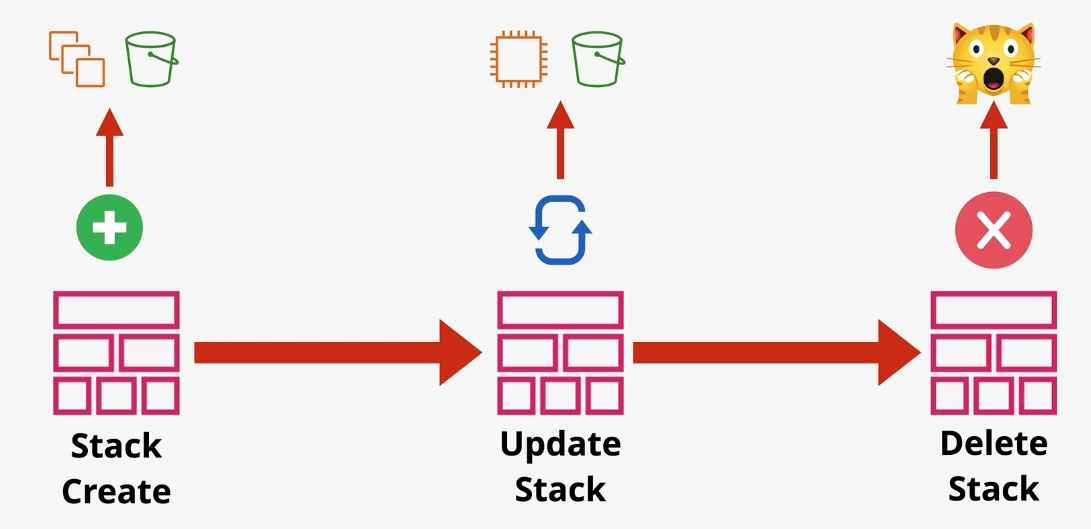

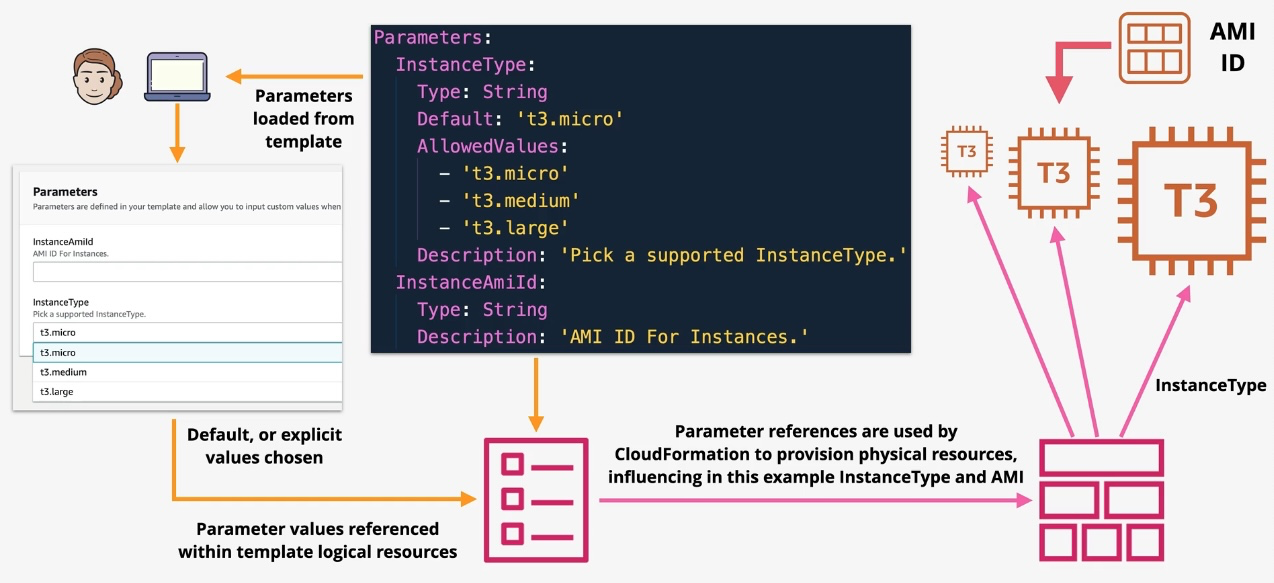

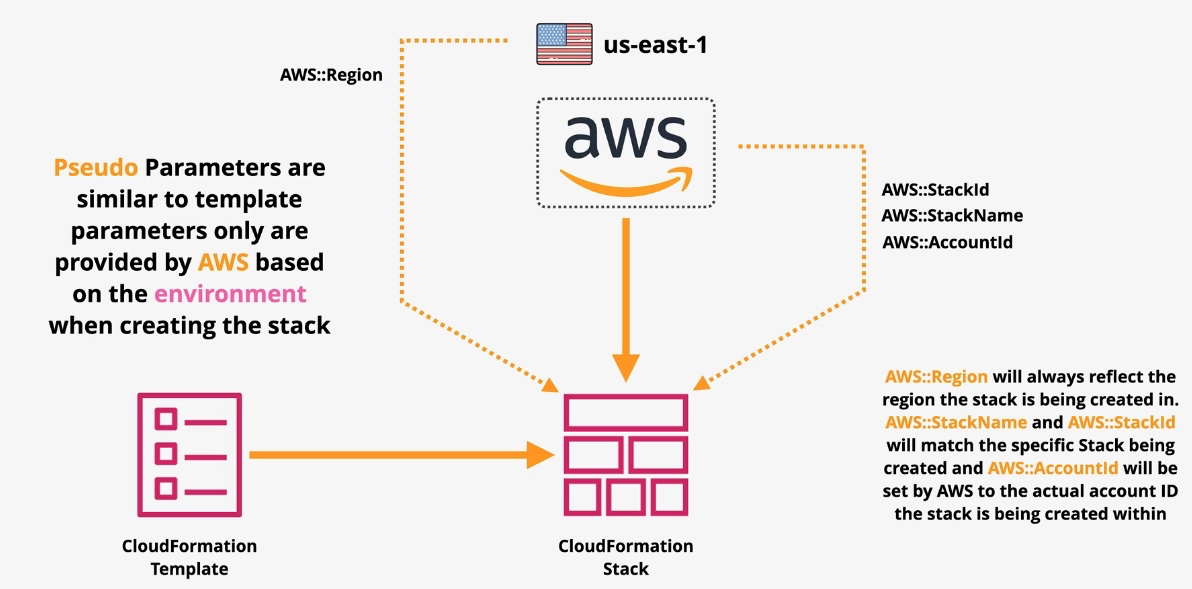

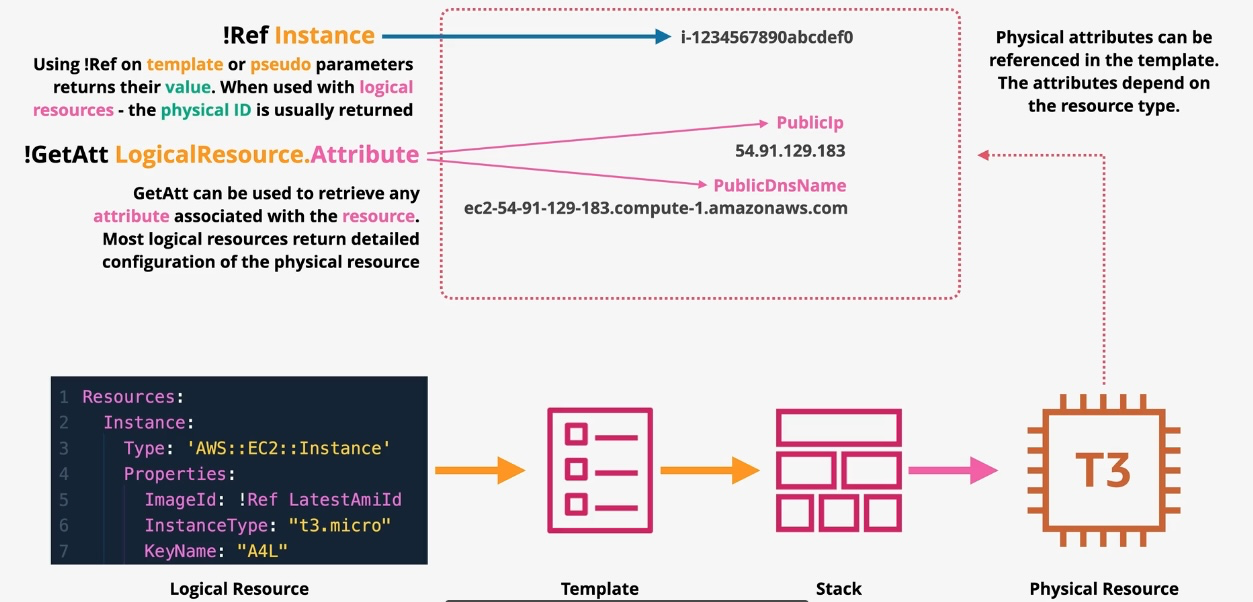

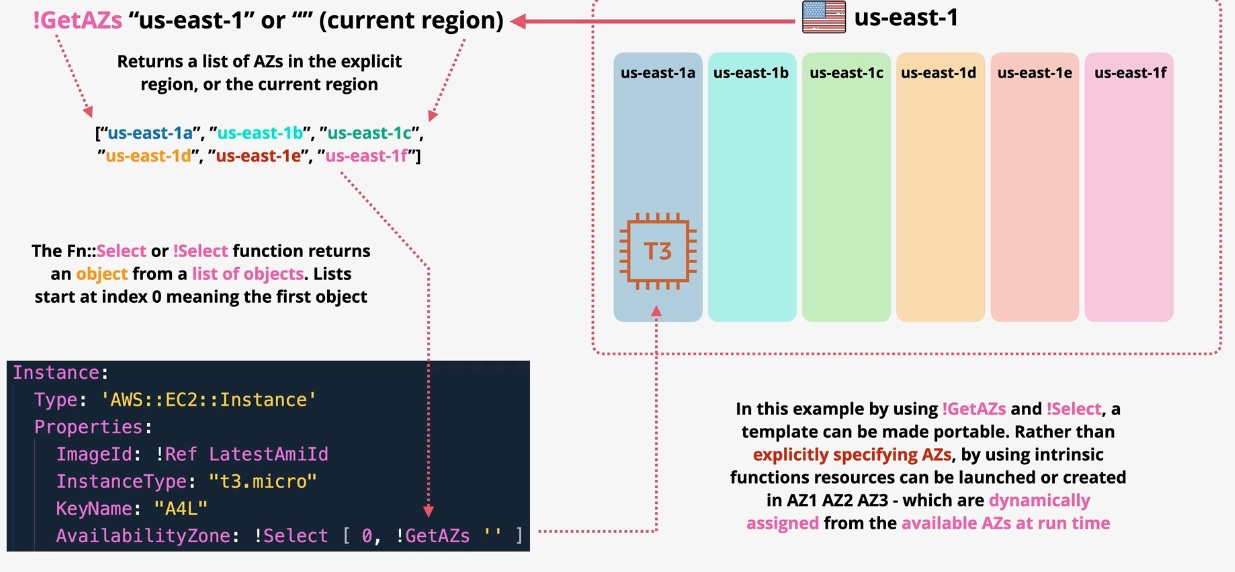

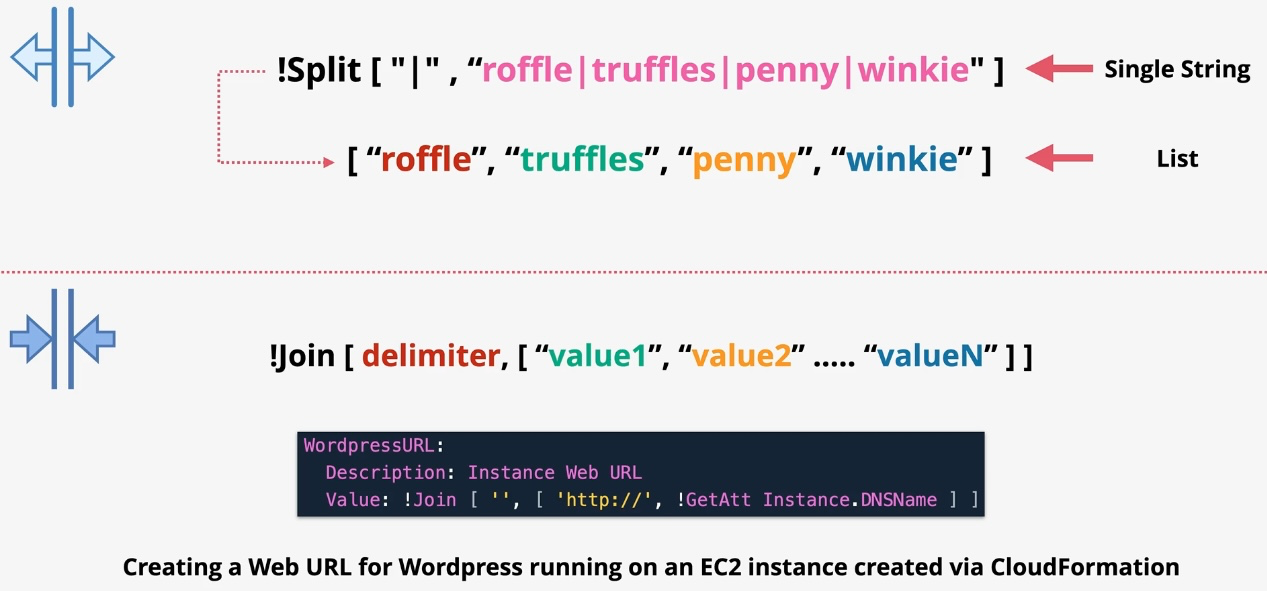

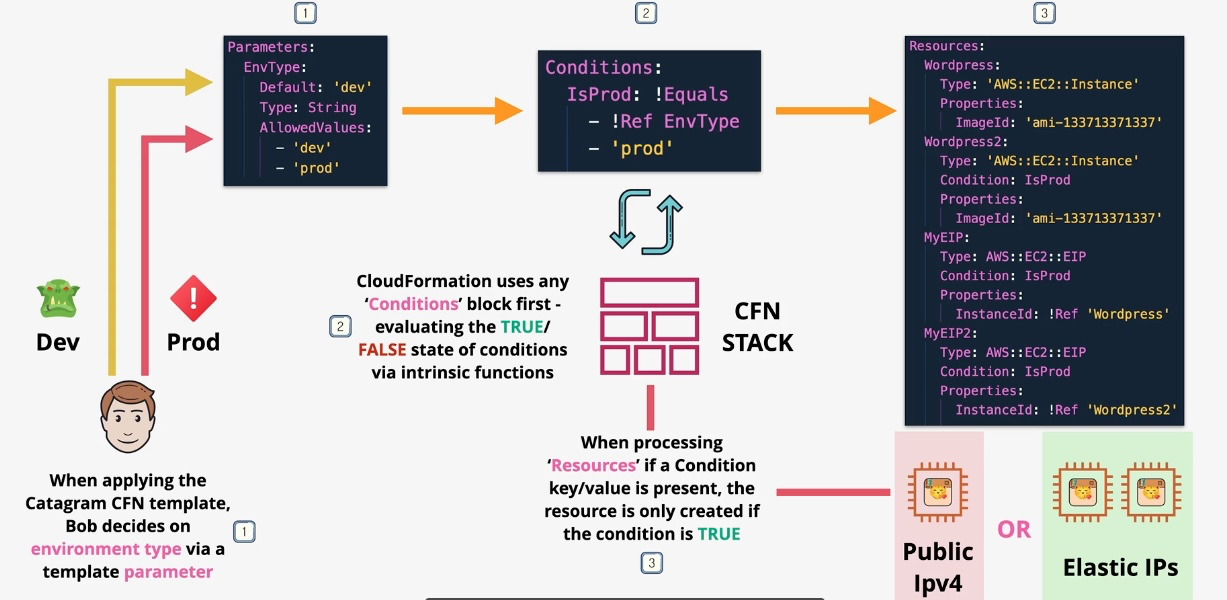

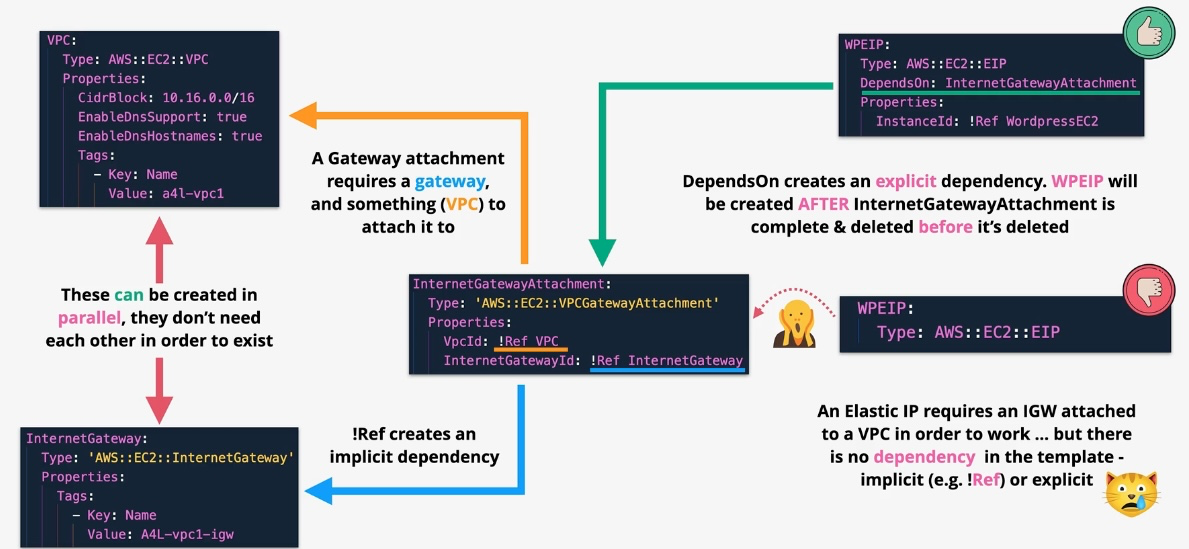

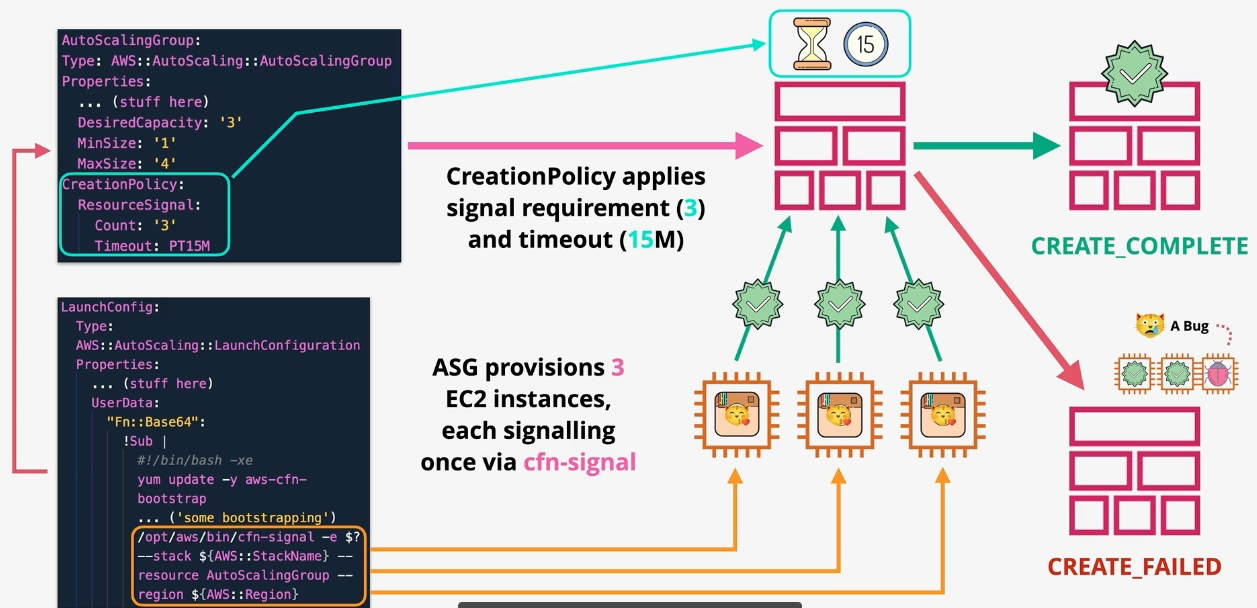

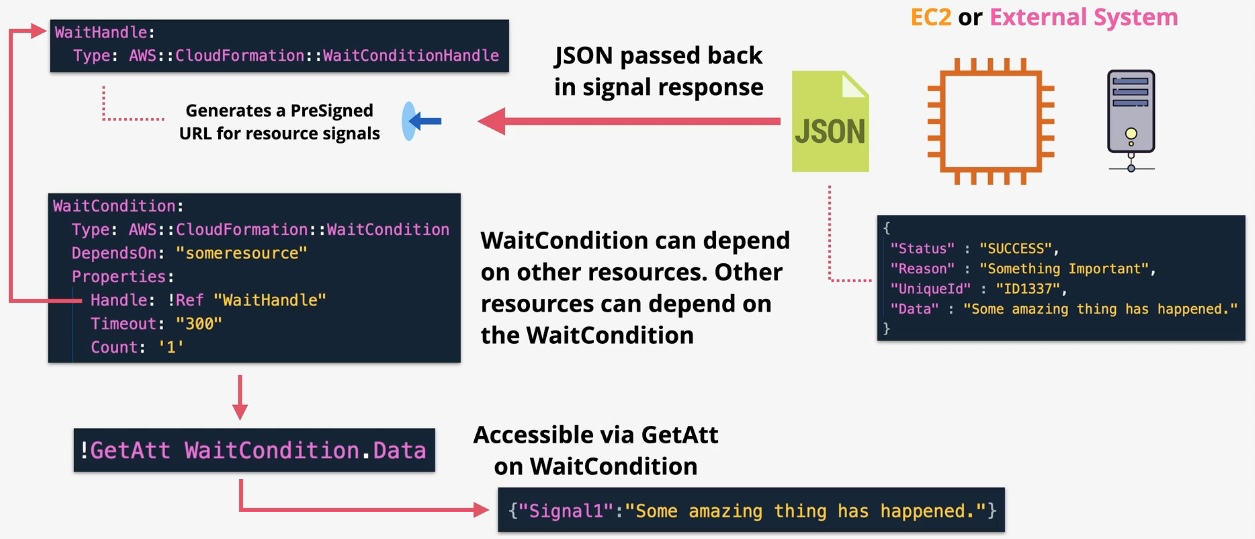

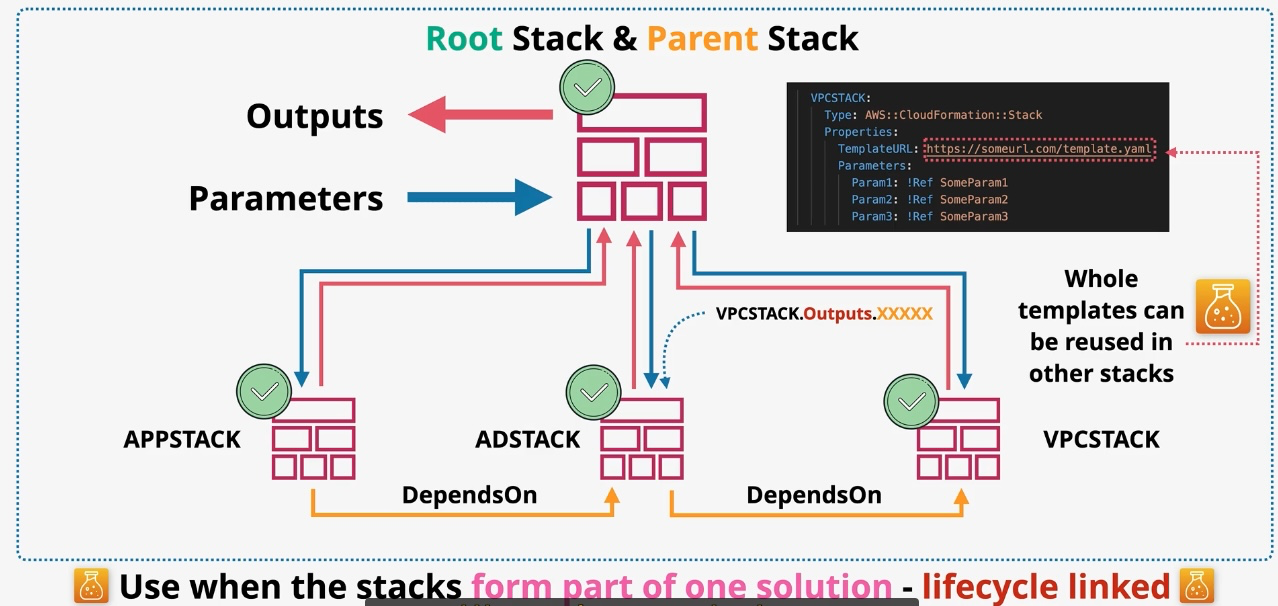

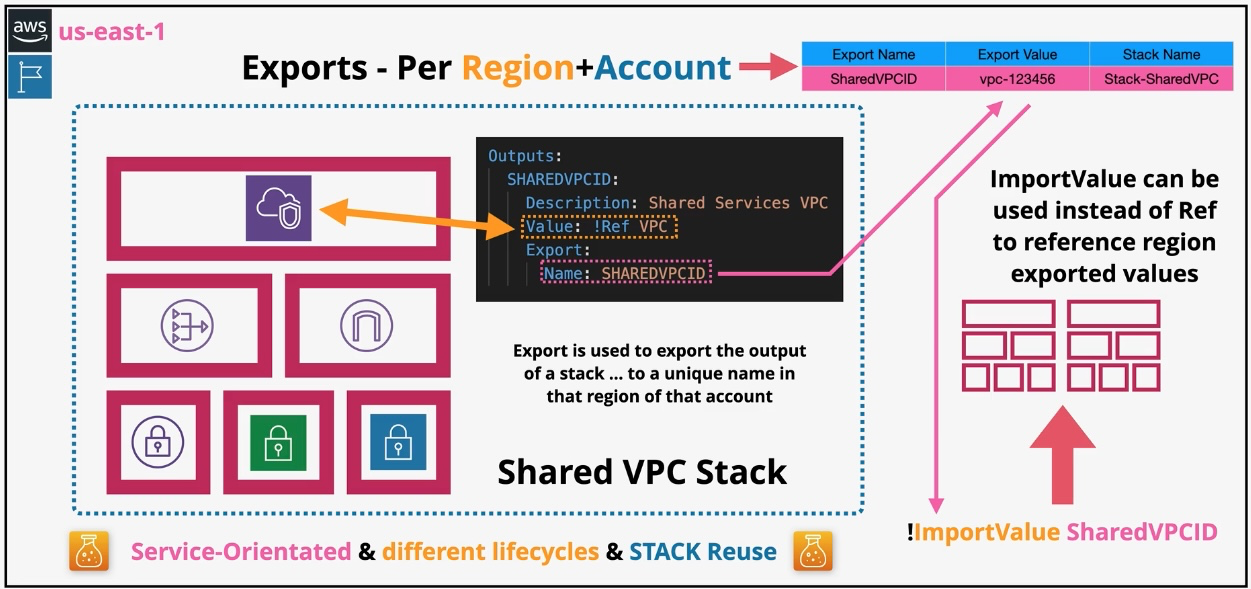

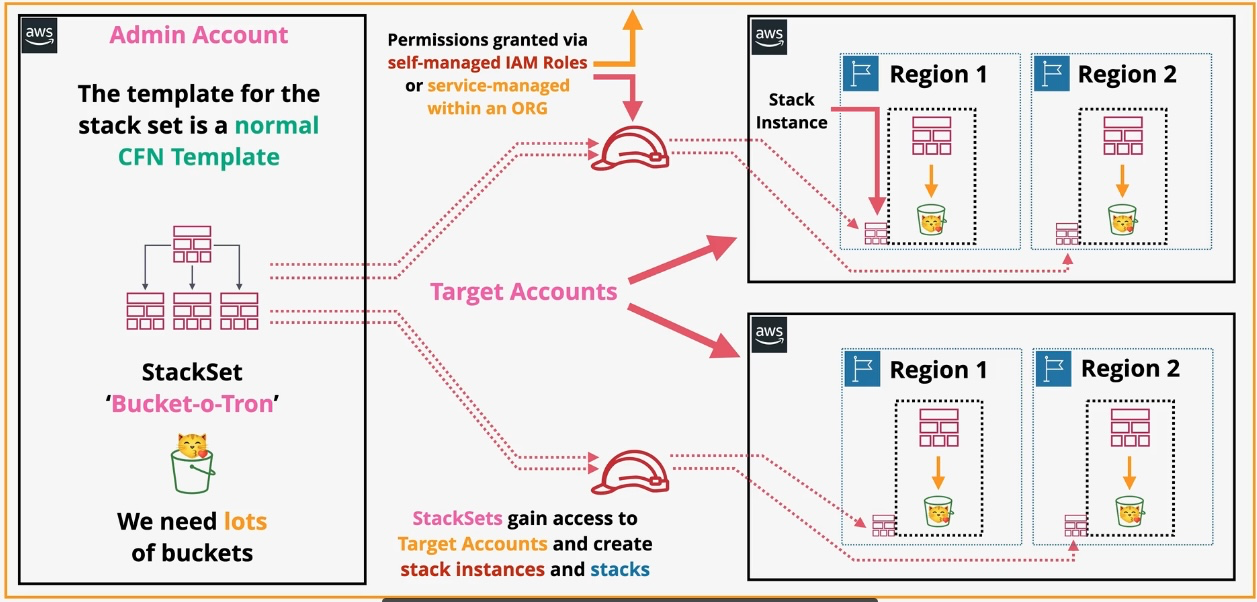

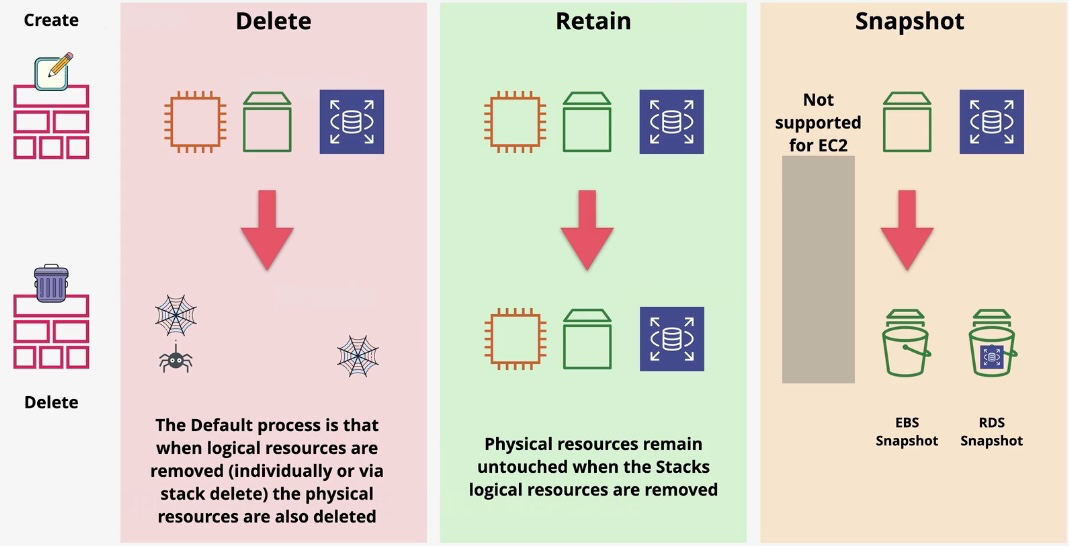

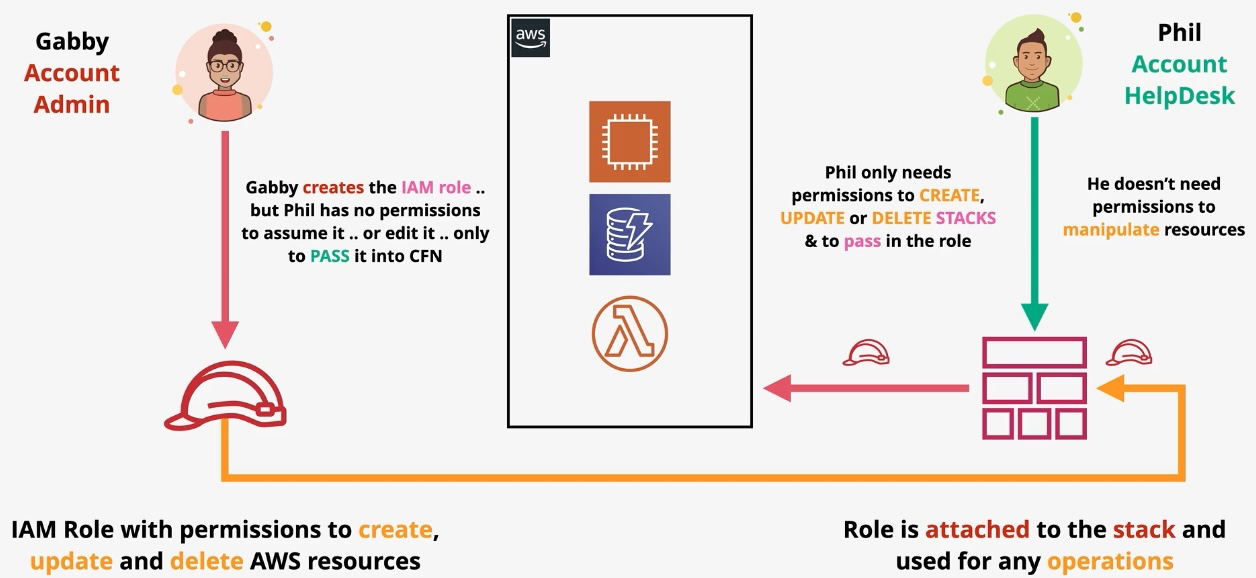

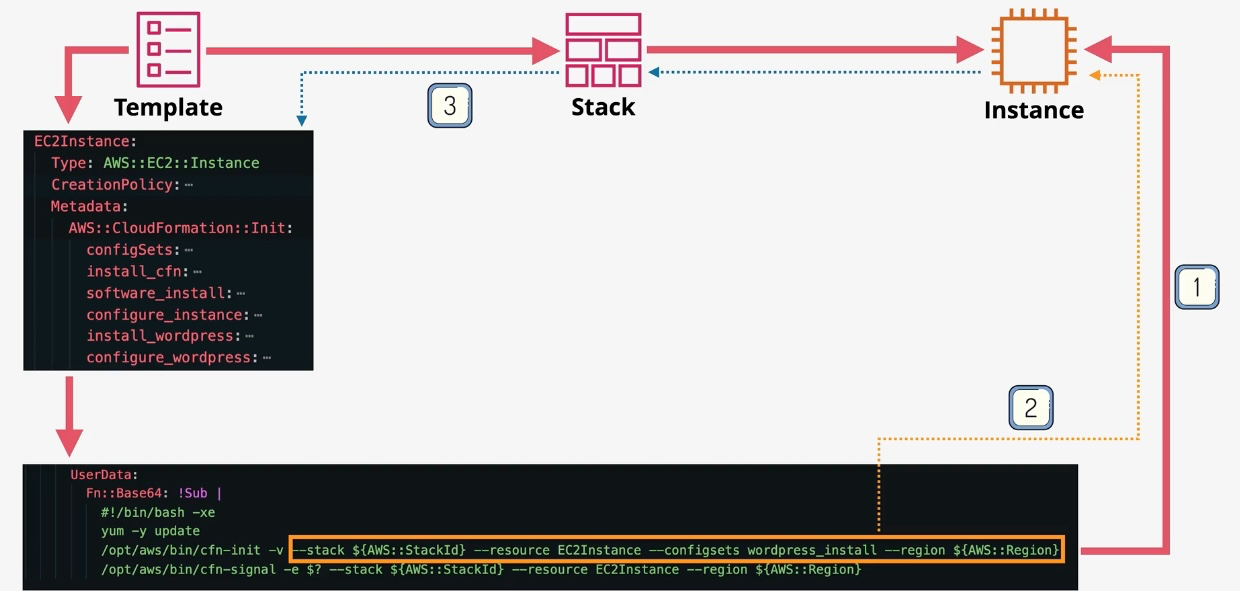

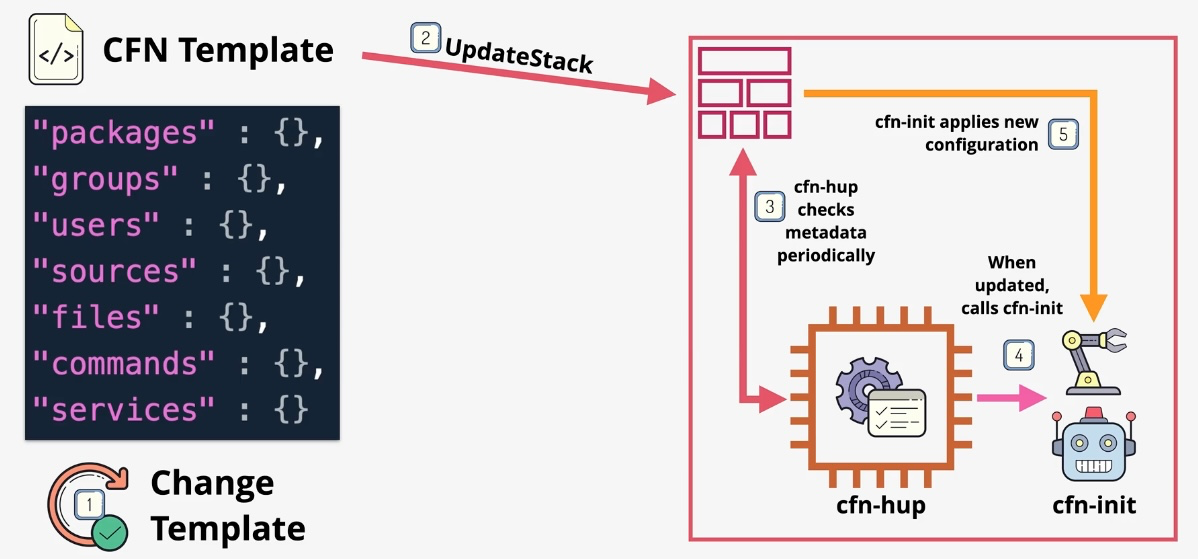

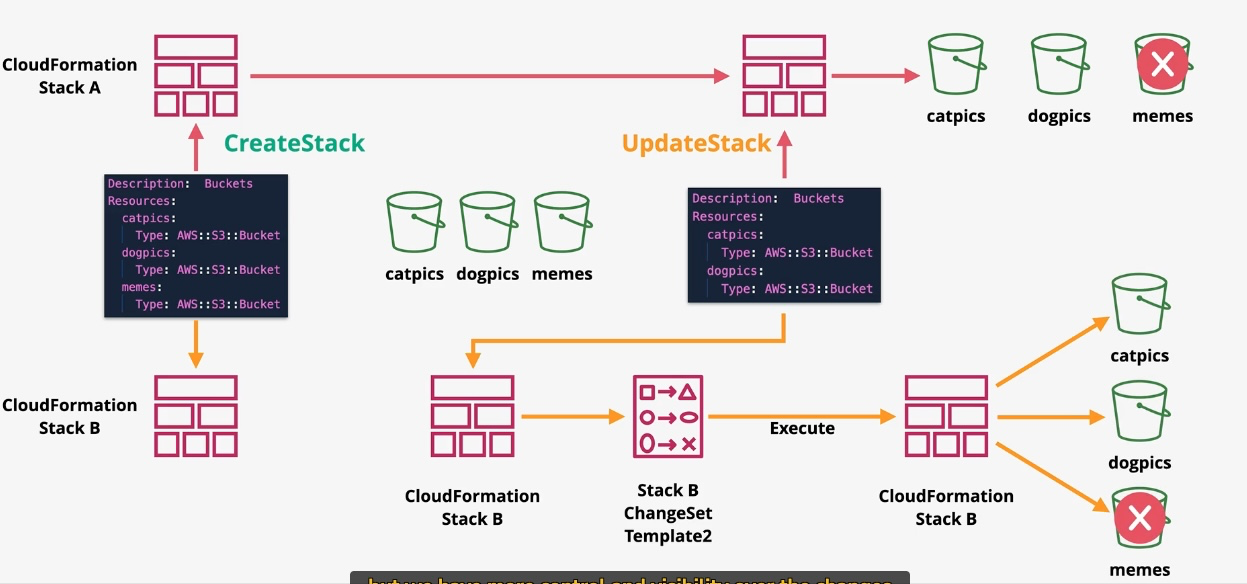

AWS CloudFormation

- Type: Infrastructure as Code

- Description: Automates resource provisioning and management using JSON or YAML templates.

- Use Cases:

- Deploying consistent environments across accounts and regions.

- Version-controlling infrastructure changes.

- Managing complex multi-service deployments.

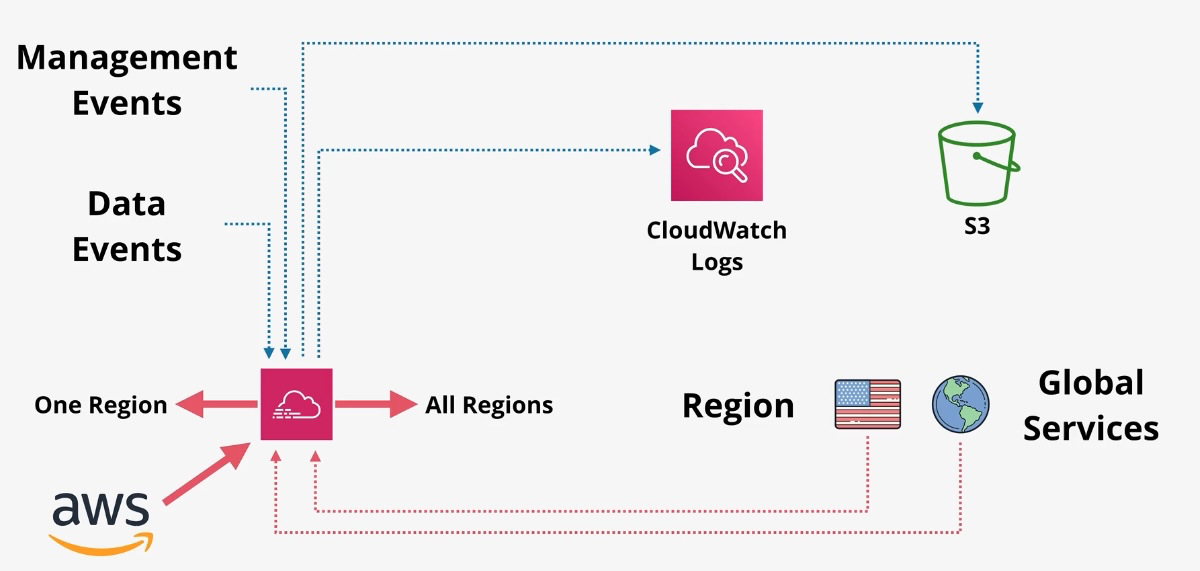

AWS CloudTrail

- Type: Logging Service

- Description: Records API calls and activity made on AWS services.

- Use Cases:

- Security auditing.

- Compliance monitoring.

- Troubleshooting operational issues.

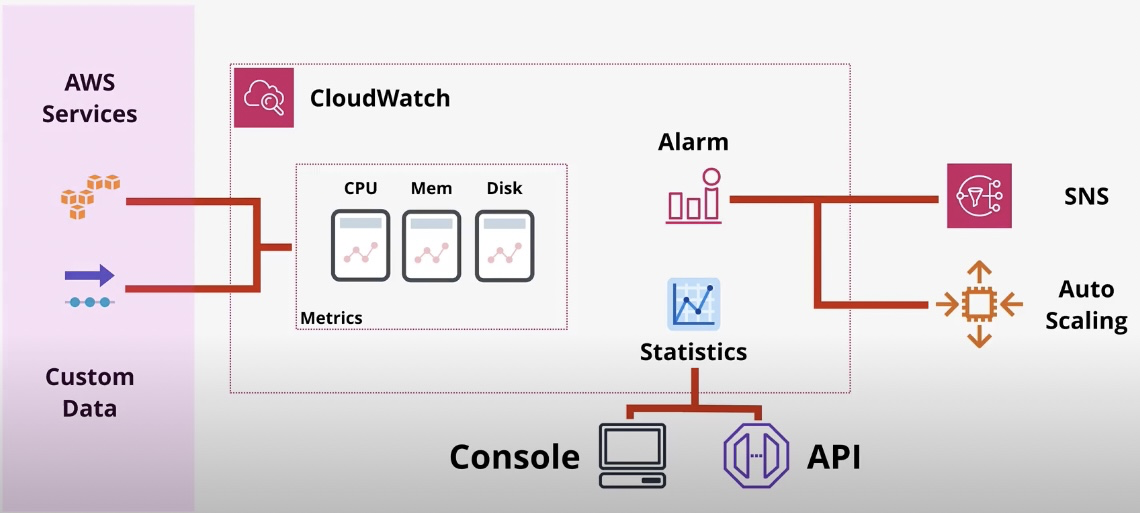

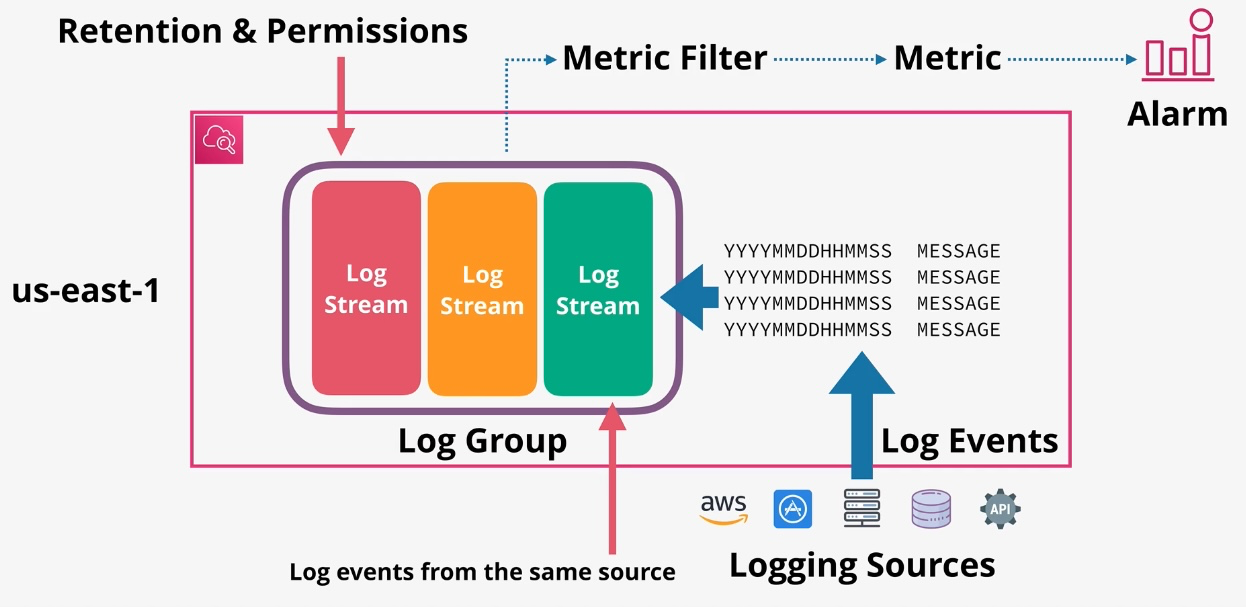

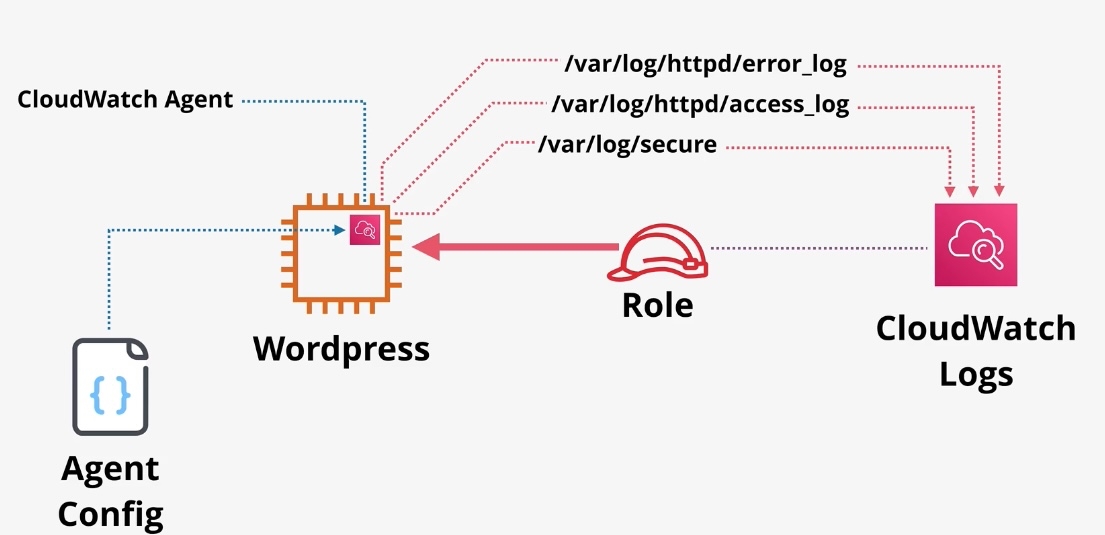

Amazon CloudWatch

- Type: Monitoring and Observability

- Description: Collects and monitors logs, metrics, and events for AWS resources and applications.

- Features:

- Metrics: Monitor resource usage (e.g., CPU, memory).

- Alarms: Trigger actions based on thresholds.

- Logs: Aggregate and analyze application and system logs.

- CloudWatch Events: Automate responses to system changes.

- Use Cases:

- Monitoring EC2 instance performance.

- Setting alerts for resource thresholds.

- Visualizing operational metrics.

AWS Command Line Interface (AWS CLI)

- Type: Command-Line Tool

- Description: Unified tool to manage AWS services from the terminal.

- Use Cases:

- Automating repetitive tasks.

- Scripting deployments and operations.

- Querying AWS resources programmatically.

AWS Compute Optimizer

- Type: Cost and Performance Optimization

- Description: Provides recommendations to optimize AWS resources for cost and performance.

- Use Cases:

- Right-sizing EC2 instances.

- Optimizing Lambda function configurations.

- Balancing cost and performance for resource usage.

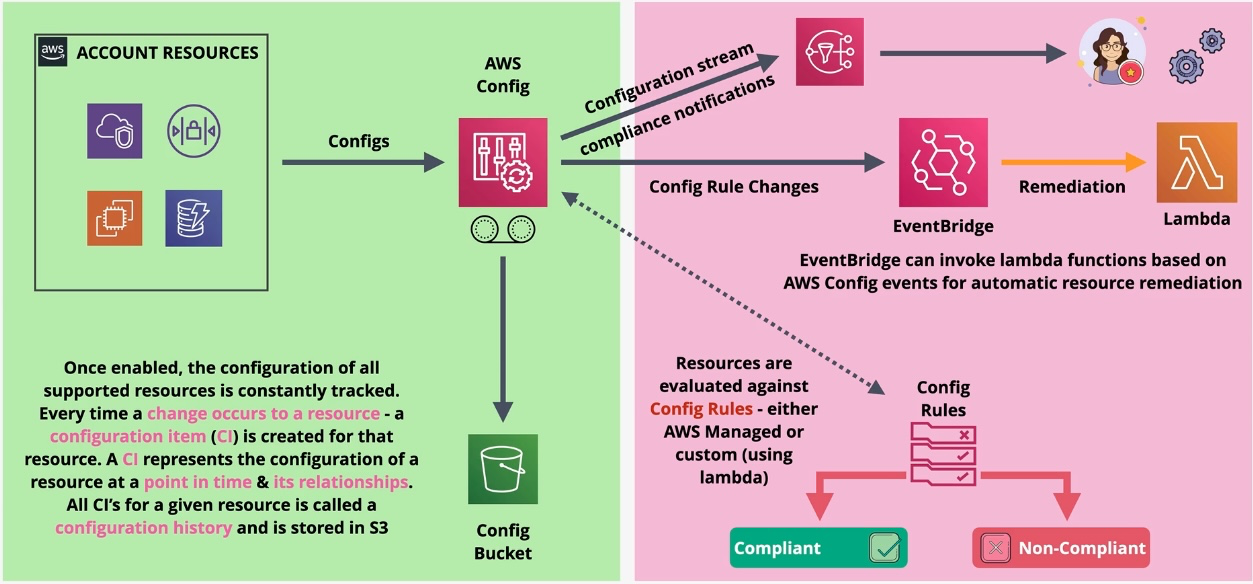

AWS Config

- Type: Configuration Management

- Description: Tracks and evaluates AWS resource configurations for compliance.

- Features:

- Configuration History: Records changes in resource configurations.

- Rules: Define compliance policies.

- Remediation: Automatically fixes non-compliant resources.

- Use Cases:

- Ensuring compliance with organizational policies.

- Auditing resource configurations.

- Detecting and fixing misconfigurations.

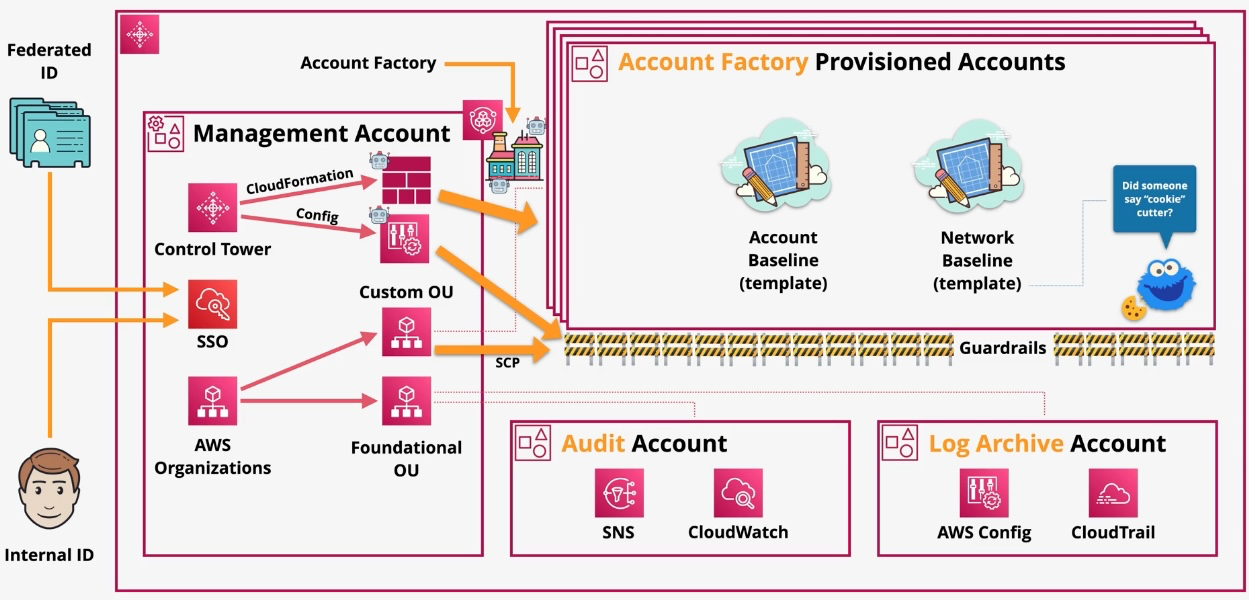

AWS Control Tower

- Type: Multi-Account Governance

- Description: AWS Control Tower simplifies the setup, governance, and management of multi-account AWS environments by implementing AWS best practices. It provides a pre-configured landing zone with governance controls (guardrails) to ensure security and compliance across accounts.

- Use Cases:

- Setting up multi-account environments.

- Enforcing security and compliance policies.

- Centralized account governance. Key Features:

- Landing Zone:

- Pre-configured multi-account environment based on AWS best practices.

- Includes logging, security accounts, and predefined network setups.

- Guardrails:

- Preventive Guardrails: Actively block non-compliant actions, such as creating resources in unauthorized regions.

- Detective Guardrails: Continuously monitor and flag non-compliance, such as unencrypted storage.

- Service Control Policies (SCPs):

- Apply policies across organizational units (OUs) to restrict specific actions.

- Examples: Prevent IAM policy changes, deny access to certain AWS regions.

- Account Factory:

- Automates account creation with predefined templates for networking, security, and compliance.

- Supports customization of VPC, IAM roles, and other baseline settings.

- Audit and Logging:

- Centralized logging through AWS CloudTrail and AWS Config for compliance tracking.

- Includes an audit account for governance and analysis.

- Dashboard:

- Provides a unified view of all accounts, compliance status, and resources.

- Simplifies operational insights and alerts. Governance and Security:

- Integrated Services:

- AWS Organizations: Manages multi-account structure and applies SCPs.

- AWS IAM Identity Center (SSO): Manages secure and centralized user access.

- AWS Config: Tracks resource configurations and ensures compliance with guardrails.

- CloudTrail: Provides detailed logging of account activities.

- Centralized Policy Enforcement:

- Use SCPs to restrict unauthorized actions across accounts.

- Monitor compliance through detective guardrails and Config rules.

- Custom Guardrails:

- Define additional preventive or detective controls using Config or custom Lambda functions.

- Example: Ensure EC2 instances do not use public IP addresses.

- Encryption and Access Control:

- Enforce encryption standards across accounts using SCPs and guardrails.

- Use fine-grained access controls to restrict sensitive actions.

Integrations:

- Security Tools:

- Integrates with GuardDuty, AWS Security Hub, and Amazon Macie for enhanced security monitoring.

- Flags anomalies and potential threats across accounts.

- Cost Management Tools:

- AWS Cost Explorer and AWS Budgets integration for tracking and managing account expenditures.

- Networking:

- Integrates with AWS Transit Gateway to streamline inter-account connectivity.

AWS Health Dashboard

- Type: Service Health Monitoring

- Description: Provides personalized information about AWS service disruptions and planned maintenance.

- Use Cases:

- Monitoring AWS service health.

- Proactive incident management.

- Receiving notifications for service events impacting your resources.

AWS License Manager

- Type: License Management Service

- Description: Simplifies the management of software licenses across AWS and on-premises environments.

- Use Cases:

- Tracking license usage for compliance.

- Managing bring-your-own-license (BYOL) workloads.

- Centralized license administration.

Amazon Managed Grafana

- Type: Visualization and Monitoring

- Description: Fully managed service for Grafana dashboards.

- Use Cases:

- Monitoring infrastructure metrics.

- Visualizing operational data.

- Integrating with CloudWatch, Prometheus, and more.

Amazon Managed Service for Prometheus

- Type: Managed Monitoring Service

- Description: Fully managed service for monitoring and alerting using Prometheus.

- Use Cases:

- Kubernetes metrics monitoring.

- Application performance monitoring.

- Centralizing metrics for large-scale systems.

AWS Management Console

- Type: Web-Based User Interface

- Description: A GUI for accessing and managing AWS services.

- Use Cases:

- Resource creation and management.

- Monitoring and troubleshooting.

- User-friendly interface for non-technical users.

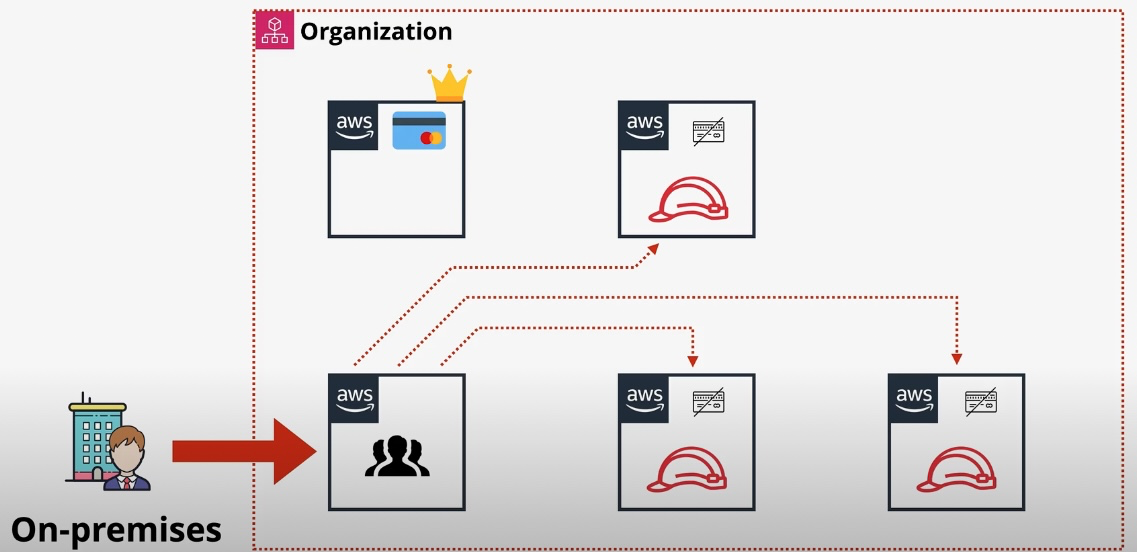

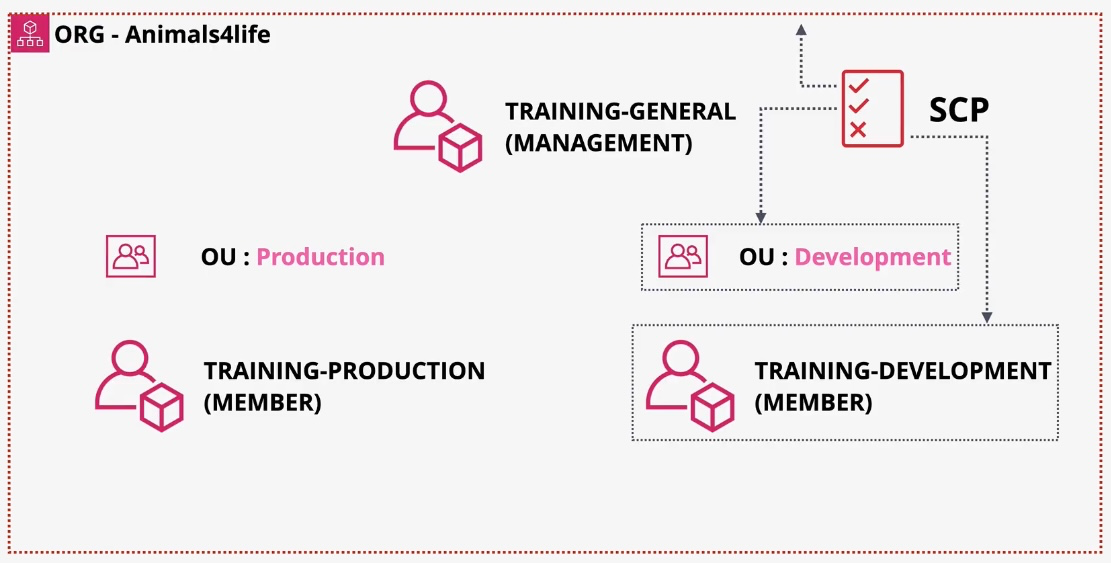

AWS Organizations

- Type: Multi-Account Management

- Description: AWS Organizations simplifies the management of multiple AWS accounts by providing centralized control over policies, billing, and resource sharing. It enables organizations to enforce governance, streamline account creation, and optimize costs. Key Features:

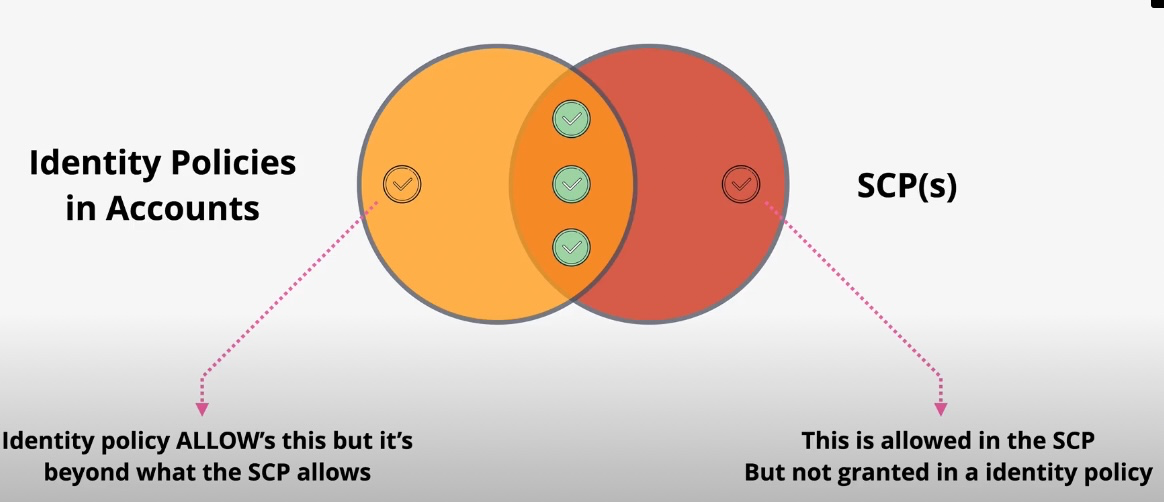

- Service Control Policies (SCPs):

- Enforce fine-grained permissions across accounts or organizational units (OUs).

- Examples: Restrict access to specific regions or services.

- Organizational Units (OUs):

- Group accounts by function, project, or environment for better management.

- Apply SCPs at the OU level for consistent governance.

- Consolidated Billing:

- Combine all accounts under a single payment method for simplified billing and cost tracking.

- Enable cost allocation tags for detailed expense analysis.

- Resource Sharing:

- Share resources like VPCs, Transit Gateways, and license configurations across accounts securely.

- Account Management:

- Simplify the creation and management of AWS accounts with predefined configurations. Governance and Security:

- Enforce least privilege access across accounts with SCPs.

- Monitor account activity and compliance through CloudTrail and AWS Config.

- Secure inter-account communication with AWS PrivateLink and Resource Access Manager (RAM).

Use Cases:

- Centralized Policy Management:

- Enforce compliance by applying SCPs to OUs for consistent governance across accounts.

- Cost Optimization:

- Use consolidated billing to track and reduce costs across the organization.

- Resource Sharing:

- Share VPCs and other resources efficiently across accounts for centralized management.

- Scaling Operations:

- Create and manage new accounts easily while inheriting organizational policies.

Benefits:

- Centralized Governance: Manage accounts and policies from a single location.

- Cost Efficiency: Consolidate billing and streamline cost tracking.

- Simplified Resource Sharing: Securely share resources without duplicating efforts.

- Scalability: Scale cloud operations while maintaining consistent control.

AWS Proton

- Type: Application Delivery Automation

- Description: Automates the deployment and management of container and serverless applications.

- Use Cases:

- Standardizing infrastructure and deployment.

- Managing microservices at scale.

- Simplifying developer workflows.

AWS Service Catalog

- Type: Service Deployment Management

- Description: AWS Service Catalog enables organizations to centrally manage and distribute approved IT services and applications. It simplifies governance by creating a catalog of pre-approved resources, ensuring consistency and compliance across deployments.

Key Features:

- Portfolio Management:

- Organize and manage a collection of approved resources, including EC2 instances, RDS databases, and S3 buckets.

- Define permissions for users and groups to access specific portfolios.

- Product Management:

- Create products using AWS CloudFormation templates to standardize resource provisioning.

- Version control allows easy updates and rollbacks.

- Tagging and Tracking:

- Enforce tagging policies for cost allocation and resource management.

- Track usage of provisioned products across accounts.

- Access Control:

- Granular permissions to restrict who can view, deploy, and manage resources.

- Self-Service Portal:

- Empower users to deploy resources themselves from the catalog while adhering to governance policies.

Governance and Security:

- Compliance: Enforce organizational policies by distributing pre-approved configurations only.

- Audit and Tracking: Monitor deployments with CloudTrail and AWS Config.

- IAM Integration: Manage permissions to portfolios and products securely.

- Cost Control: Prevent over-provisioning by restricting resource specifications in templates.

Use Cases:

- Standardized Resource Deployment:

- Ensure consistent configurations for commonly used resources like VMs or databases.

- Cost Management:

- Restrict access to resource types or sizes that exceed budget constraints.

- Governed Self-Service:

- Allow teams to provision resources independently while adhering to security and compliance standards.

- Multi-Account Resource Sharing:

- Distribute resources across accounts in AWS Organizations. Benefits:

- Streamlined Management: Simplify resource deployment and updates through centralized catalogs.

- Enhanced Compliance: Ensure only approved configurations are deployed.

- Operational Efficiency: Empower teams with self-service capabilities while maintaining governance.

- Cost Optimization: Reduce waste through controlled provisioning.

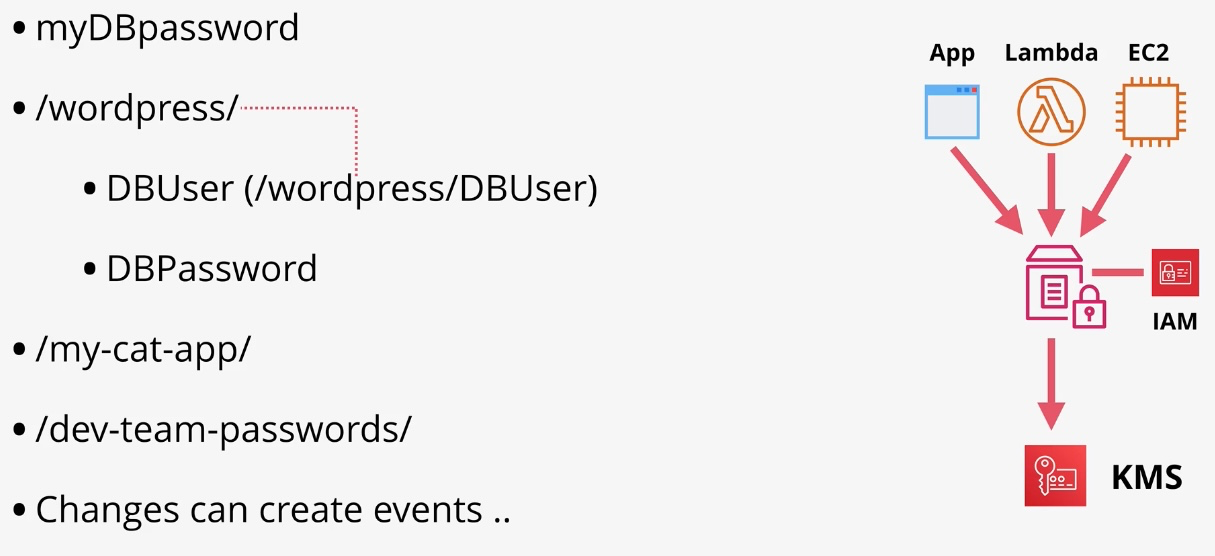

AWS Systems Manager

- Type: Operations Management

- Description: AWS Systems Manager provides a unified interface for managing AWS resources and on-premises infrastructure. It simplifies operational tasks such as automation, patch management, and monitoring, while enhancing security and compliance across environments.

Key Features:

- Session Manager:

- Provides secure shell access to EC2 instances without needing bastion hosts or SSH keys.

- Integrated logging for audit and compliance.

- Automation:

- Automates repetitive tasks like instance provisioning and configuration management using pre-built or custom runbooks.

- Patch Manager:

- Automates patching for operating systems and applications across instances.

- State Manager:

- Ensures desired configurations are applied and maintained on instances.

- Parameter Store:

- Securely store, manage, and retrieve configuration data and secrets for your applications.

- Run Command:

- Execute commands on multiple instances without needing to log in.

- Inventory:

- Collect and view metadata about instances and software installed across environments.

- OpsCenter:

- Centralized dashboard for tracking and managing operational issues.

Governance and Security:

- Access Control: Manage permissions with AWS Identity and Access Management (IAM).

- Audit Trails: Use AWS CloudTrail to log all actions taken via Systems Manager.

- Encryption: Securely store sensitive data in Parameter Store using AWS Key Management Service (KMS).

- Compliance Tracking: Integrates with AWS Config to ensure resources meet compliance requirements.

Use Cases:

- Unified Operations Management:

- Centralize operational tasks across AWS and on-premises infrastructure.

- Patch Automation:

- Ensure all instances remain up-to-date with minimal manual intervention.

- Configuration Management:

- Automatically apply and enforce desired instance configurations.

- Secure Access Management:

- Provide auditable access to instances without requiring open ports or bastion hosts.

- Incident Response:

- Use Automation and OpsCenter for faster diagnosis and resolution of operational issues. Benefits:

- Enhanced Efficiency: Streamline routine operational tasks through automation.

- Improved Security: Secure access to instances and sensitive configurations.

- Cost Savings: Reduce operational overhead with centralized tools and automation.

- Compliance Simplification: Easily monitor and enforce compliance across environments.

AWS Trusted Advisor

- Type: Resource Optimization Tool

- Description: Provides recommendations for cost optimization, security, fault tolerance, performance, and service limits.

- Use Cases:

- Identifying cost-saving opportunities.

- Enhancing security configurations.

- Optimizing AWS resource usage.

AWS Well-Architected Tool

- Type: Architecture Assessment Tool

- Description: Helps assess workloads and implement best practices using the AWS Well-Architected Framework.

- Use Cases:

- Reviewing workloads for compliance with best practices.

- Identifying potential architectural risks.

- Improving operational efficiency.

Comparison of Management and Governance Services Service Best For AWS Auto Scaling Scaling resources dynamically. AWS CloudFormation Automating resource provisioning. AWS CloudTrail Tracking API activity and compliance. Amazon CloudWatch Monitoring resources and setting alerts. AWS Config Ensuring compliance and tracking changes. AWS Control Tower Managing multi-account environments. AWS Organizations Centralizing account management. AWS Systems Manager Unified operational insights and tasks. AWS Trusted Advisor Resource optimization and best practices.

Media Services

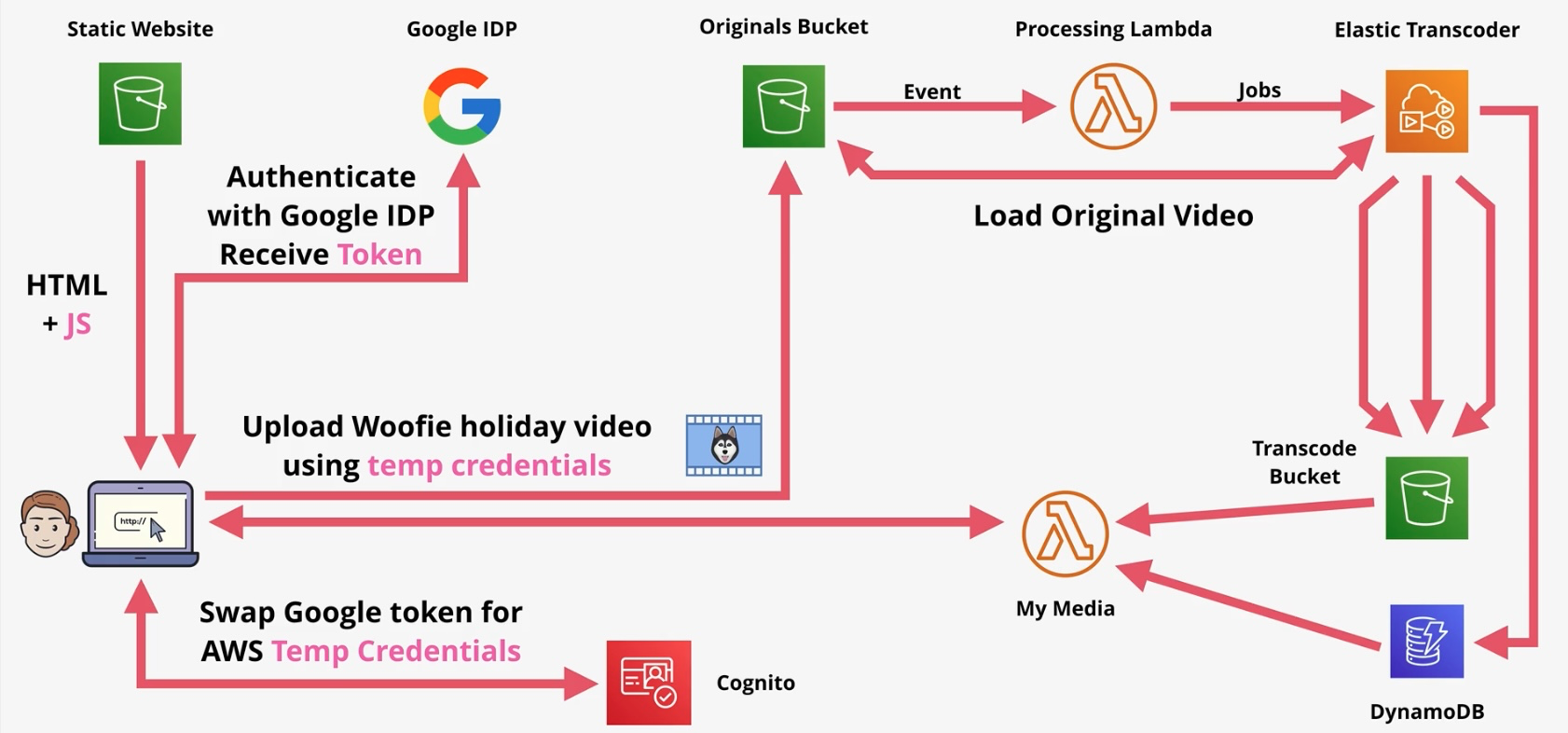

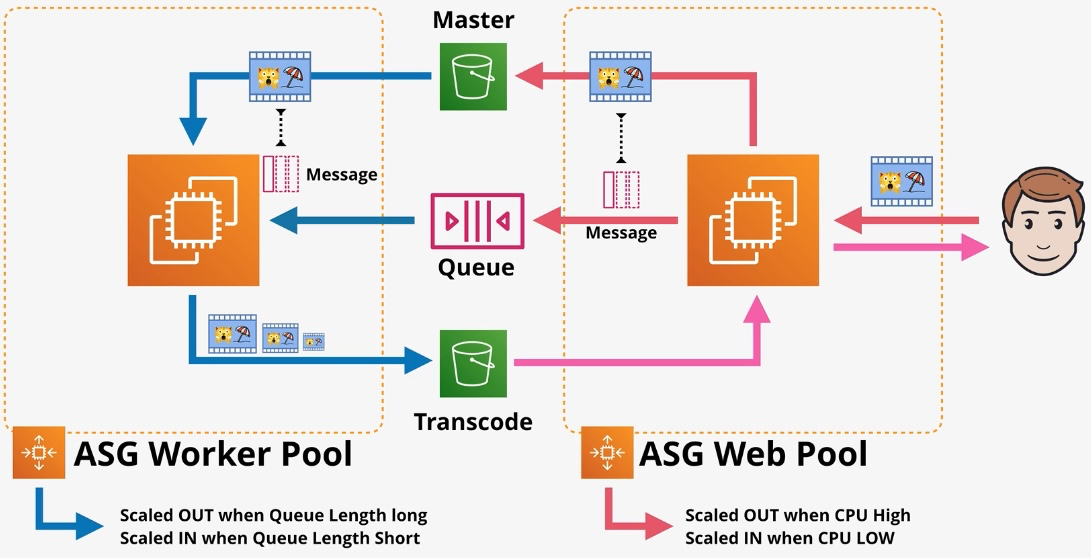

Amazon Elastic Transcoder

- Type: Media Transcoding Service

- Description: Converts media files into formats optimized for playback on devices.

- Use Cases:

- Video format conversion.

- Streaming optimization.

- Content delivery for mobile users.

Amazon Kinesis Video Streams

- Type: Video Streaming

- Description: Processes and analyzes streaming video data in real time.

- Use Cases:

- Real-time video analytics.

- Video archiving and playback.

- Machine learning for video analysis.

Migration and Transfer

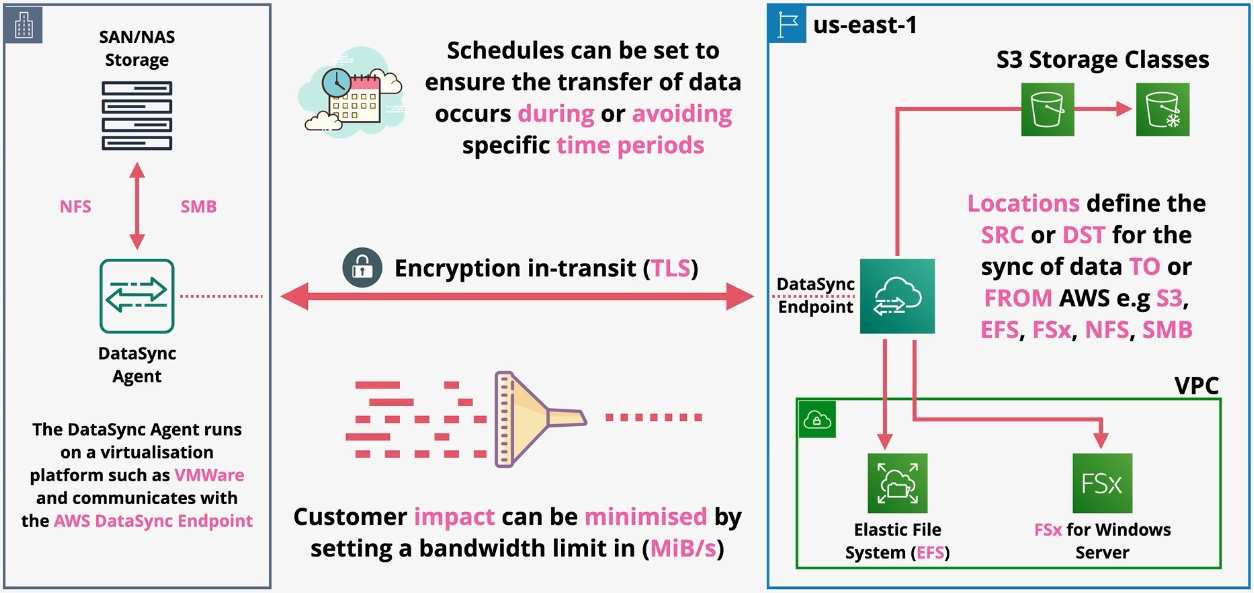

AWS DataSync

Type: Data Transfer Automation Service Description: AWS DataSync simplifies, automates, and accelerates the process of transferring and replicating large volumes of data between on-premises storage systems, edge locations, and AWS storage services over the internet or AWS Direct Connect. It supports file data and associated file system metadata such as ownership, timestamps, and access permissions, ensuring seamless data migration and synchronization.

Key Features:

- High-Performance Data Transfer:

- Transfers large datasets at speeds significantly faster than open-source tools.

- Metadata Preservation:

- Maintains file system metadata such as ownership, timestamps, and permissions during transfers.

- Supported Locations:

- Amazon S3: Transfer data to/from S3 buckets, supporting all storage classes.

- Amazon EFS (Elastic File System): Seamless integration with EFS for NFS-based file systems.

- Amazon FSx (File Systems): Supports FSx for Windows File Server, FSx for Lustre, and FSx for NetApp ONTAP.

- On-Premises Storage: Includes Network File System (NFS) and Server Message Block (SMB) storage systems.

- Protocol Support:

- Uses NFS or SMB protocols for accessing and transferring data from on-premises systems.

- Task Automation:

- Automates data synchronization tasks, including scheduling recurring transfers.

- Data Validation:

- Automatically verifies data integrity during transfers, ensuring accuracy.

- AWS Management Console and API Integration:

- Configure, monitor, and manage transfers easily via the AWS Management Console or programmatically with APIs.

Subtypes and Concepts:

- Locations:

- Define the source and destination endpoints for data transfers, such as S3 buckets, EFS file systems, or FSx systems.

- Example: A location for Amazon FSx for Windows File Server serves as an endpoint for transferring data using the Server Message Block (SMB) protocol.

- Agents:

- Software agents deployed on-premises to facilitate secure communication between local storage systems and AWS.

- Tasks:

- A task specifies the source, destination, filters, and configurations for a data transfer job.

- Filters:

- Allow users to include or exclude specific files or directories during a transfer.

Use Cases:

- Cloud Migration:

- Efficiently move on-premises data to AWS storage services such as S3, EFS, or FSx.

- Hybrid Workloads:

- Synchronize data between on-premises storage and AWS for hybrid application scenarios.

- Backup and Disaster Recovery:

- Automate the replication of critical data to AWS for backup and recovery purposes.

- Data Lake Creation:

- Ingest large datasets into Amazon S3 for analytics and machine learning applications.

- Metadata Preservation:

- Retain access permissions, ownership, and timestamps for compliance and operational needs.

Governance and Security:

- Encryption:

- Encrypts data in transit using Transport Layer Security (TLS).

- Supports encryption at rest using AWS KMS for S3 and FSx destinations.

- Access Control:

- Uses IAM roles and policies to control access to DataSync resources and target AWS services.

- Monitoring and Logging:

- Integrates with CloudWatch to provide visibility into data transfer metrics and task statuses.

Benefits:

- Simplified Management:

- Automates and schedules repetitive data transfer tasks.

- Faster Transfers:

- Moves data at high speeds, significantly reducing transfer times compared to traditional methods.

- Seamless Integration:

- Works natively with AWS storage services for smooth data migration and synchronization.

- Reliable and Secure:

- Ensures data integrity and security during transfers, with automatic verification and encryption.

- Cost Efficiency:

- Pay only for the data transferred, avoiding additional licensing or hardware costs.

AWS Migration Hub

Type: Migration Tracking and Management Service Description: AWS Migration Hub provides a centralized platform to plan, track, and manage the migration of applications and resources to AWS. It integrates with various AWS and partner migration tools, offering a unified view of migration progress and outcomes across multiple projects and services.

Key Features:

- Centralized Migration Tracking:

- Offers a single dashboard to monitor migration progress for applications and servers across multiple AWS services and partner tools.

- Application Grouping:

- Organize servers and resources into logical application groups for better tracking and dependency management.

- Integration with Migration Tools:

- Supports AWS-native tools like AWS Application Migration Service, AWS Database Migration Service (DMS), and third-party tools such as CloudEndure.

- Customizable Metrics:

- Tracks migration status using metrics like server discovery, data replication, and cutover completion.

- Dependency Visualization:

- Automatically identifies application dependencies to ensure complete and efficient migration planning.

- Flexible Reporting:

- Provides detailed migration reports for individual applications or the entire portfolio.

- Multi-Region Support:

- Manage and track migrations across multiple AWS Regions for global projects.

Subtypes and Components:

- Discovery Tools:

- Integrates with tools like AWS Application Discovery Service to collect data about on-premises infrastructure and application dependencies.

- Migration Tools:

- Works seamlessly with AWS services like Application Migration Service, DMS, and partner tools to perform the actual migration.

- Application Portfolio:

- Helps group and manage applications, enabling a structured approach to migration.

- Progress Status:

- Tracks and categorizes migration phases (e.g., data replication, cutover, testing) for each application or server.

Use Cases:

- End-to-End Migration Management:

- Plan, execute, and monitor migrations of applications, servers, and databases from on-premises or other clouds to AWS.

- Application Dependency Mapping:

- Discover and visualize interdependencies between applications to reduce migration risks and ensure smooth transitions.

- Migration Tool Consolidation:

- Use a unified dashboard to manage migrations from multiple tools, reducing complexity and improving visibility.

- Progress Monitoring:

- Track real-time migration progress and completion status for individual applications or portfolios.

- Compliance and Reporting:

- Generate detailed reports for auditing and compliance purposes.

Governance and Security:

- Role-Based Access:

- Use IAM policies to control access to Migration Hub resources and data.

- Integration with CloudTrail:

- Logs all user activities and API calls for monitoring and compliance.

- Encryption:

- Ensures secure data handling by integrating with AWS KMS for encryption.

Benefits:

- Centralized Visibility:

- Consolidates migration progress and metrics into a single view, reducing management overhead.

- Simplified Planning:

- Facilitates detailed planning and execution by identifying dependencies and tracking tools.

- Enhanced Collaboration:

- Teams can work collaboratively with shared insights and consistent progress updates.

- Tool Agnostic:

- Supports a variety of migration tools, making it versatile for different workloads and environments.

- Scalable Management:

- Handles small-scale to enterprise-level migrations seamlessly.

AWS Snow Family

Type: Offline Data Transfer and Edge Computing Services Description: The AWS Snow Family consists of physical devices designed for offline data transfer and edge computing. It enables the movement of large volumes of data between on-premises locations and AWS or facilitates compute capabilities in disconnected or edge environments. The family includes Snowcone, Snowball, and Snowmobile, each tailored for specific data transfer and edge computing needs.

Key Services in AWS Snow Family

-

AWS Snowcone

- Description:

- The smallest member of the Snow Family, ideal for portable edge computing and data transfer.

- Lightweight and rugged, with onboard compute for running applications in remote environments.

- Capacity:

- Storage: 8 TB of usable storage.

- Use Cases:

- Portable data collection in remote or mobile environments.

- Edge computing for IoT devices.

- Data transfer from constrained locations.

-

AWS Snowball

- Description:

- Available in two variants: Snowball Edge Storage Optimized and Snowball Edge Compute Optimized.

- Supports large-scale data transfer and edge computing.

- Capacity:

- Storage Optimized: Up to 80 TB of usable storage.

- Compute Optimized: Supports 42 TB of usable storage with additional compute capabilities.

- Features:

- Edge Computing: Run applications on Snowball devices using AWS IoT Greengrass and EC2 instances.

- Encryption: Data is encrypted with AWS KMS during transfer.

- Use Cases:

- Large-scale data migrations to AWS.

- Processing and analyzing data in disconnected environments.

- Disaster recovery and backup.

-

AWS Snowmobile

- Description:

- A 45-foot shipping container designed for petabyte- and exabyte-scale data migrations.

- Moves massive datasets securely and efficiently.

- Capacity:

- Up to 100 PB per Snowmobile.

- Use Cases:

- Migrating entire data centers to AWS.

- High-volume archival and regulatory data transfers.

- Large-scale digital media migration.

Common Features Across the Snow Family

- Secure Data Handling:

- End-to-end encryption using AWS KMS.

- Tamper-evident devices ensure data security during transit.

- Integration with AWS Services:

- Data is seamlessly ingested into Amazon S3, EBS, or Glacier.

- Data Validation:

- Automatically validates data upon transfer to ensure integrity.

- Durability:

- Designed to withstand harsh environments for remote or edge use cases.

- Edge Computing Capabilities:

- Run applications locally on Snow devices for preprocessing, analytics, or AI/ML inference.

Use Cases

- Data Transfer and Migration:

- Efficiently move large volumes of data from on-premises to AWS without relying on the internet.

- Edge Computing:

- Process and analyze data in disconnected or latency-sensitive environments, such as oil rigs, ships, or military operations.

- Disaster Recovery:

- Rapidly back up or restore large datasets for disaster recovery planning.

- Media and Entertainment:

- Transfer massive video libraries or high-resolution content for processing and archiving.

- IoT and Analytics:

- Collect and preprocess IoT data at the edge before transferring to AWS for further analysis.

Benefits

- Scalability:

- Suitable for small data volumes (Snowcone) to massive datasets (Snowmobile).

- Cost Efficiency:

- Avoids high network transfer costs by utilizing offline transfer methods.

- Portability:

- Compact and rugged devices like Snowcone and Snowball can operate in remote and challenging environments.

- Enhanced Security:

- Hardware encryption and tamper-resistant designs safeguard sensitive data.

- Reliability:

- Provides consistent data transfer and edge compute capabilities even in disconnected environments.

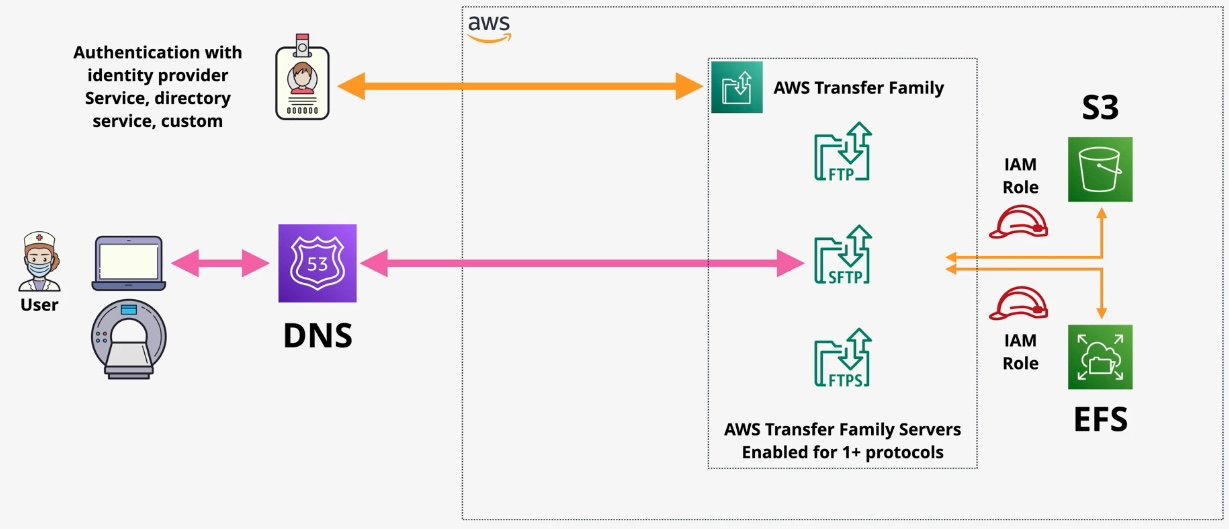

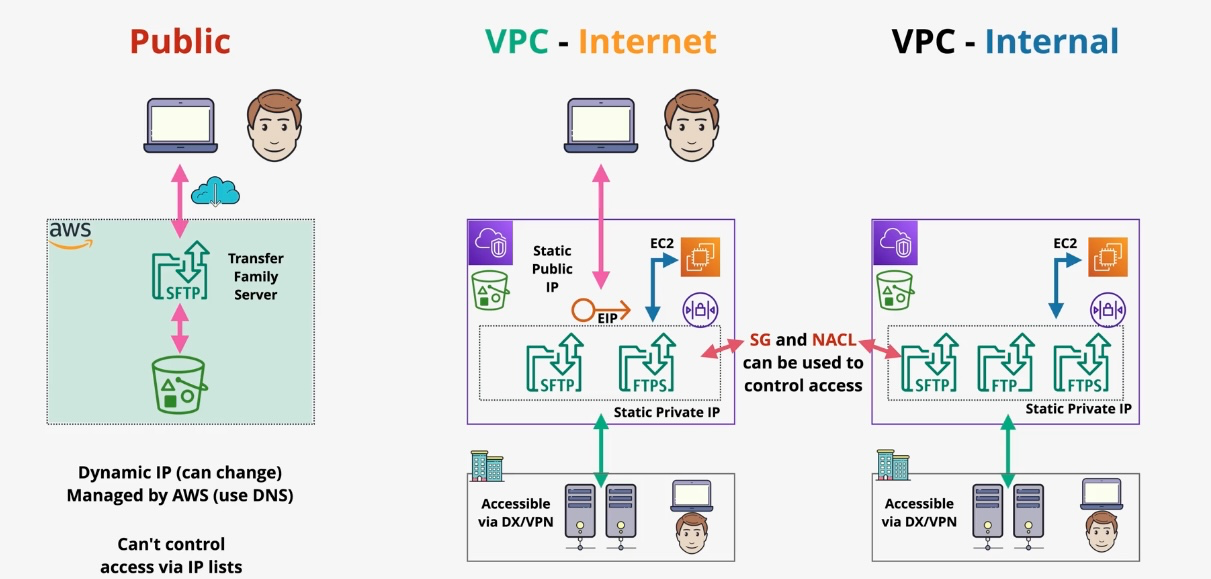

AWS Transfer Family

Type: Managed File Transfer Service Description: The AWS Transfer Family enables secure and reliable file transfers directly into and out of AWS storage services using industry-standard file transfer protocols such as SFTP (Secure File Transfer Protocol), FTPS (File Transfer Protocol Secure), and FTP (File Transfer Protocol). It integrates seamlessly with Amazon S3 and Amazon EFS, making it easy to modernize and migrate legacy file transfer workflows to the cloud without changing client-side configurations.

Key Features

- Protocol Support:

- SFTP (SSH File Transfer Protocol): Securely transfers files using encryption and authentication.

- FTPS (File Transfer Protocol Secure): Transfers files over an encrypted SSL/TLS connection.

- FTP (File Transfer Protocol): For legacy workflows requiring unencrypted file transfers.

- Native AWS Integration:

- Transfers files directly to Amazon S3 or Amazon EFS, enabling further processing or storage in the AWS ecosystem.

- User Management:

- Supports Amazon Cognito, AWS Directory Service, or custom identity providers for user authentication.

- High Availability and Scalability:

- Fully managed service with automatic scaling to handle fluctuating workloads without manual intervention.

- Customizable Workflows:

- Supports custom workflows for post-transfer processing, such as Lambda triggers for file processing or moving files to different S3 buckets.

- Monitoring and Logging:

- Integrated with AWS CloudWatch and AWS CloudTrail for detailed monitoring, auditing, and troubleshooting.

- Security and Compliance:

- Provides fine-grained access control using IAM policies.

- Offers encryption for data at rest (S3, EFS) and in transit.

Subtypes and Components

- Endpoint Types:

- Public endpoints for internet-facing transfers.

- VPC-hosted endpoints for private, secure transfers within a Virtual Private Cloud (VPC).

- Authentication Methods:

- Service-Managed Users: Store user credentials securely in AWS Transfer Family.

- Custom Identity Providers: Use AWS Lambda to authenticate users via external systems.

- AWS Directory Service: Integrate with Microsoft Active Directory for enterprise authentication.

- Custom Processing Workflows:

- Automate actions like virus scanning, data parsing, or moving files across buckets using AWS Lambda. Use Cases

- Legacy File Transfer Modernization:

- Migrate on-premises SFTP/FTPS/FTP workflows to AWS without client-side changes.

- Secure Data Exchange:

- Enable secure data sharing with business partners, vendors, or clients.

- Integration with Analytics Pipelines:

- Automate ingestion of files into data lakes or analytics pipelines using Amazon S3.

- Multi-Region Data Access:

- Transfer data across regions for global applications or disaster recovery.

- IoT and Edge Data Collection:

- Securely transfer files from edge devices to AWS for processing and analysis. Governance and Security

- Encryption:

- Data is encrypted in transit using SFTP or FTPS and at rest in S3 or EFS.

- Access Control:

- Use IAM roles and policies to define granular access permissions for users.

- Auditing and Monitoring:

- Logs all file transfer activities via CloudTrail and provides performance metrics in CloudWatch.

- Compliance:

- Meets industry standards like GDPR, HIPAA, PCI DSS, and FedRAMP, ensuring regulatory compliance for sensitive workloads. Benefits

- Ease of Use: Fully managed service eliminates the need to set up or maintain file transfer servers.

- Scalability: Automatically scales to handle large volumes of file transfers.

- Cost-Effectiveness: Pay only for the resources used, without additional licensing costs.

- Compatibility: Supports legacy protocols for seamless migration without disrupting existing workflows.

- Enhanced Security: Built-in encryption, IAM-based access control, and compliance certifications.

AWS Application Migration Service (AWS MGN)

Type: Lift-and-Shift Migration Service Description: AWS Application Migration Service (AWS MGN) simplifies the migration of applications from physical, virtual, or cloud-based environments to AWS with minimal downtime. It continuously replicates source servers into AWS and converts them into native AWS resources. This approach allows for efficient and reliable migrations without requiring application re-architecture.

Key Features

- Continuous Replication:

- Uses block-level replication to copy data from source servers to AWS, ensuring minimal downtime during migration.

- Automated Conversion:

- Converts replicated servers into Amazon EC2 instances, retaining the original application stack.

- Testing and Validation:

- Launch test instances in AWS to validate the migration before final cutover.

- Support for Multiple Platforms:

- Migrates workloads from physical servers, VMware, Hyper-V, KVM, and other cloud providers.

- Customizable Launch Settings:

- Define EC2 instance types, networking configurations, and security groups for target resources.

- Post-Migration Modernization:

- Integrate migrated applications with AWS services, such as RDS, Lambda, or S3, for optimization.

- Integrated Dashboard:

- Provides a centralized view of replication status, server health, and migration progress.

Subtypes and Components

- Replication Agent:

- A lightweight agent installed on source servers to facilitate data replication to AWS.

- Launch Templates:

- Define instance types, storage configurations, and networking settings for converted resources.

- Testing and Cutover:

- Test migrated workloads in isolation before switching production traffic.

- Orchestration:

- Automated workflows to manage replication, testing, and launch processes.

Use Cases

- Lift-and-Shift Migrations:

- Quickly move existing workloads to AWS without requiring changes to the application stack.

- Disaster Recovery: